Compliance Automated Standard Solution (COMPASS), Part 6: Compliance to Policy for Multiple Kubernetes Clusters

In this post, we present an example implementation of the Compliance Policy Administration Center for the declarative policy in Kubernetes.

Join the DZone community and get the full member experience.

Join For Free(Note: A list of links for all articles in this series can be found at the conclusion of this article.)

In Part 4 of this multi-part series on continuous compliance, we presented designs for Compliance Policy Administration Centers (CPAC) that facilitate the management of various compliance artifacts connecting the Regulatory Policies expressed as Compliance-as-Code with technical policies implemented as Policy-as-Code. The separation of Compliance-As-Code and Policy-As-Code is purposeful, as different personas (see Part 1) need to independently manage their respective responsibilities according to their expertise, be they controls and parameters selection, crosswalks mapping across regulations, or policy check implementations. The CPAC enables users to deploy and run technical policy checks according to different Regulatory Policies on different Policy Validation Points (PVPs) and, depending upon the level of generality or specialty of the inspected systems, the CPAC performs specific normalization and aggregation transformations. We presented three different designs for CPAC: two for handling specialized PVPs with their declarative vs. imperative policies, and one for orchestrating diverse PVP formats across heterogeneous IT stack levels and cloud services.

In this blog, we present an example implementation of CPAC that supports the declarative policy in Kubernetes, whose design was introduced in section 2 of COMPASS Part 4. There are various policy engines in Kubernetes, such as GateKeeper/OPA, Kyverno, Kube-bench, etc. Here, we explore a CPAC using Open Cluster Management (OCM) to administer the different policy engines. This design is just one example of how a CPAC can be integrated with a PVP, and a CPAC is not limited to this design only. We flexibly allow the extension of our CPAC through plugins to any specific PVP, as we will see in upcoming blog posts in this series. We also describe how our CPAC can connect the compliance artifacts from Compliance-as-Code produced using our OSCAL-based Agile Authoring methodology to artifacts in Policy-as-Code. This bridging is the key enabler of end-to-end continuous compliance: from authoring controls and profiles to mapping to technical policies and rules, to collecting assessment results from PVPs, to aggregating them against regulatory compliance into an encompassing posture for the whole environment.

We assume the compliance artifacts have been authored and approved for production runtime using our open-source Trestle-based Agile Authoring tool. Now the challenge is how to deal with the runtime policy execution and integrate the policy with compliance artifacts represented in NIST OSCAL. In this blog, we focus on the Kubernetes policies and related PVPs and show end-to-end compliance management with NIST OSCAL and the technical policies for Kubernetes.

Using Open Cluster Management for Managing Policies in Kubernetes

In Kubernetes, the cluster configuration comprises policies that are written in a YAML manifest, and its format depends upon which particular policy engine is used. In order to accommodate the differences among policy engines, we have used Open Cluster Management (OCM) in our CPAC.

OCM provides various functionalities for managing Kubernetes clusters: Governance Policy Framework to distribute manifests to managed clusters (by a unified object called OCM Policy) and collect the status from managed clusters, PolicyGenerator to compose OCM Policy from raw Kubernetes manifests, Template function to embed parameters in OCM Policy, PolicySets for grouping of policies, and Placement (or PlacementRule)/PlacementBinding for cluster selection. Once an OCM Policy is composed from a Kubernetes manifest specific to a policy engine, it can be deployed and compliance posture status can be collected using the OCM unified approach.

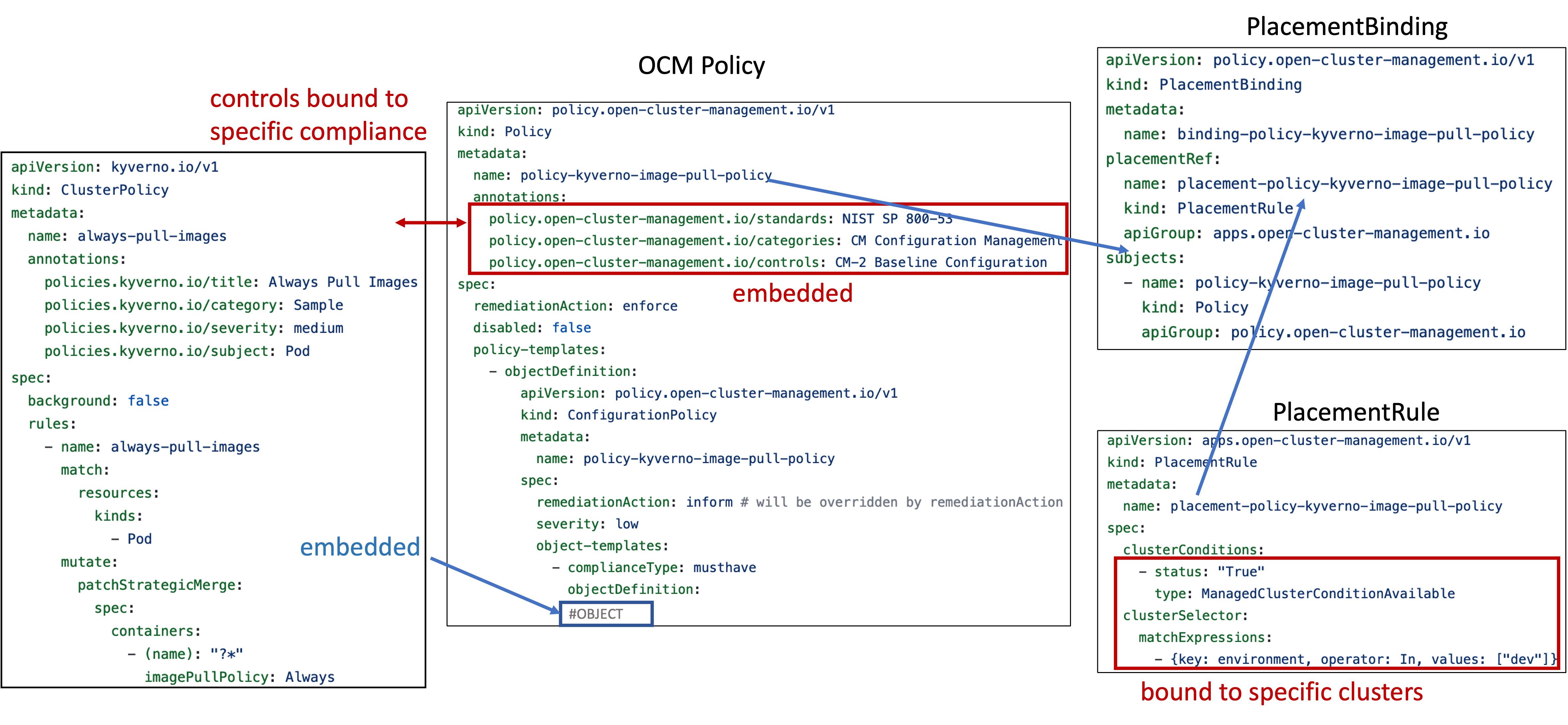

The OCM community maintains OCM Policies in the Policy Collection repository. However, these policies are published with compliance metadata and PlacementRule/PlacementBinding embedded, making it difficult to maintain and reuse policies across regulation programs without constant editing of the policies themselves, while considering them regulation agnostic. Figure 1 is a schematic diagram of policy-kyverno-image-pull-policy.yaml. It illustrates the OCM Policy containing not only the Kubernetes manifests, but also additional compliance metadata, PlacementRule, and PlacementBinding.

Figure 1: Example of Today's OCM Policy. Compliance metadata, PlacementRule, and PlacementBinding are embedded in OCM Policy

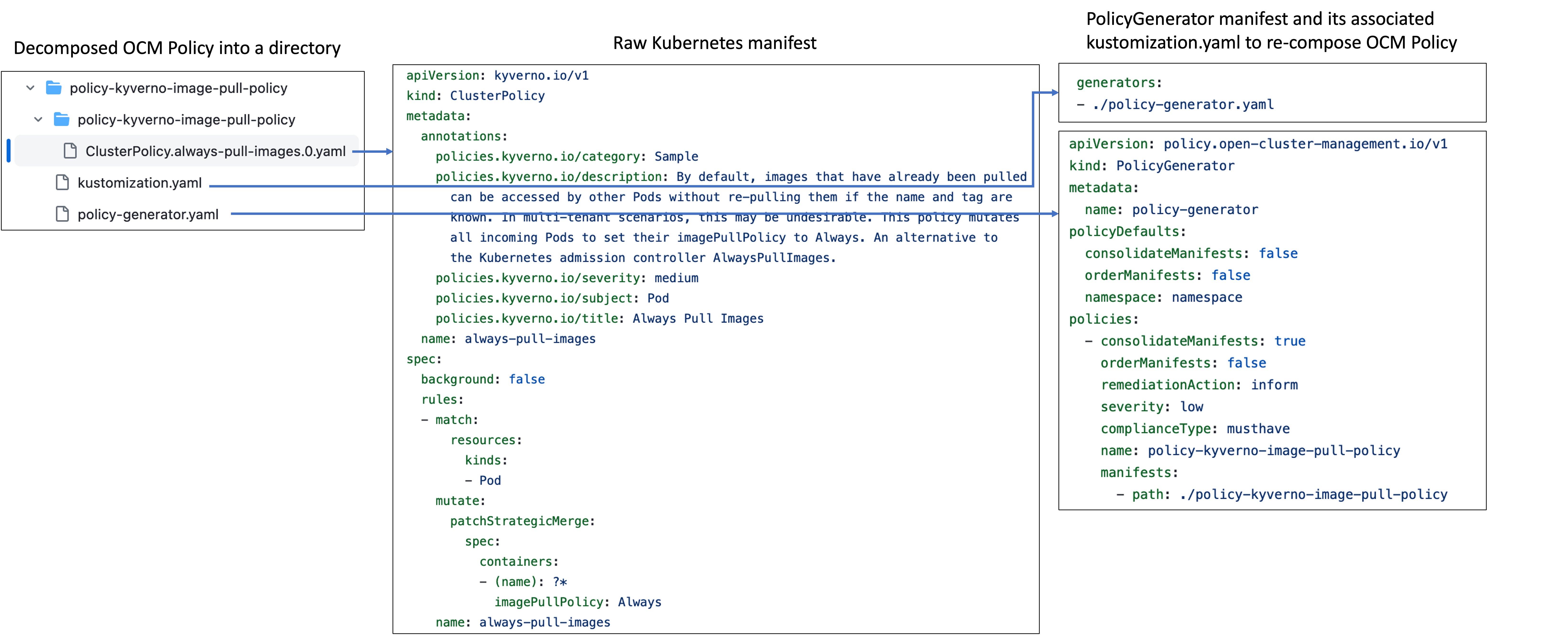

In order to make the policies reusable and composable by the OCM PolicyGenerator, we decompose from each policy its set of Kubernetes manifests. We call this manifest set “Policy Resource." Figure 2 is an example of a decomposed policy that contains three raw Kubernetes manifests (in the middle), along with a PolicyGenerator manifest and its associated kustomization.yaml (on the right). The original OCM Policy can be re-composed by running PolicyGenerator in the directory displayed on the left.

Figure 2: Decomposed OCM Policy

C2P for End-To-End Compliance Automation Enablement

Now that we have completely decoupled compliance and policy as OSCAL artifacts and Policy Resource, we bridge compliance into policy that takes compliance artifacts in OSCAL format and applies policies (including installing policy engines) on managed Kubernetes clusters. We call this bridging process "Compliance-to-Policy" (C2P). The Component Definition is an OSCAL entity that provides a mapping of controls to specific rules for a service and its implementation (check) by a PVP.

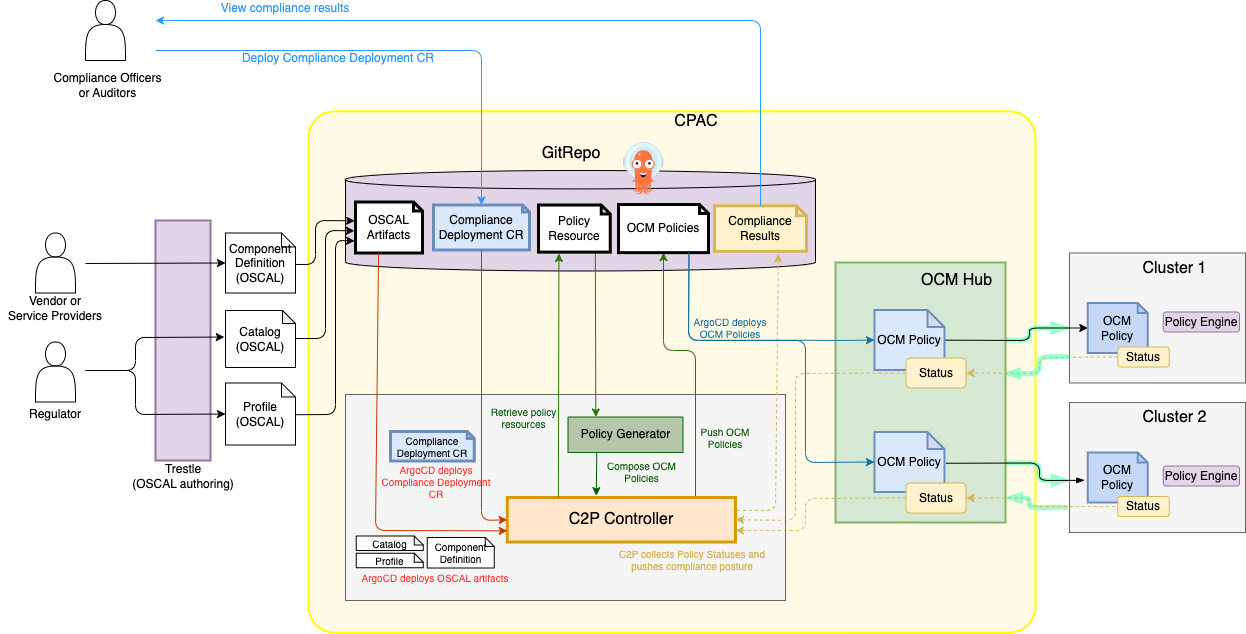

For example, we can have a Component Definition defined for Kubernetes that specifies that cm-6 in NIST SP 800-53 maps to a rule checked by policy-kyverno-image-pull-policy in Kubernetes. Then, C2P interprets this Component Definition by fetching policy-kyverno-image-pull-policy directory and running PolicyGenerator with given compliance metadata to generate OCM Policy. The generated OCM Policy is pushed to GitHub along with Placement and PlacementBinding. OCM automatically distributes the OCM policy to managed clusters specified in Placement and PlacementBinding. Each managed cluster periodically updates the status field of OCM policy in the OCM Hub. C2P collects and summarizes the OCM policy statuses from OCM Hub and pushes it as the compliance posture. Figure 3 illustrates the end-to-end flow diagram of the compliance management and policy administration thus achieved.

Figure 3 depicts the end-to-end flow steps as follows:

- Regulators provide OSCAL Catalog and Profile by using Trestle-based agile authoring methodology (see also COMPASS Part 3).

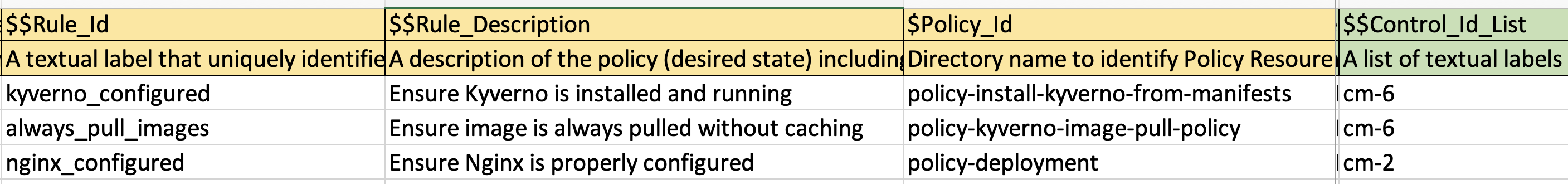

- Vendors or service providers create Component Definition referring to Catalog, Profile, and Policy Resource by Trestle (Component Definition representation in the spreadsheet below).

![Component Definition representation]()

- Compliance officers or auditors create Compliance Deployment CR that defines:

- Compliance information

- OSCAL artifact URLs

- Policy Resources URL

- Inventory information

clusterGroupsfor grouping clusters by label selectors- Binding of cluster group and compliance

- OCM connection information

- The example Compliance Deployment CR is as follows:

- Compliance information

apiVersion: compliance-to-policy.io/v1alpha1

kind: ComplianceDeployment

metadata:

name: nist-high

spec:

compliance:

name: NIST_SP-800-53-HIGH # name of compliance

catalog:

url: https://raw.githubusercontent.com/usnistgov/oscal-content/main/nist.gov/SP800-53/rev5/json/NIST_SP-800-53_rev5_catalog.json

profile:

url: https://raw.githubusercontent.com/usnistgov/oscal-content/main/nist.gov/SP800-53/rev5/json/NIST_SP-800-53_rev5_HIGH-baseline_profile.json

componentDefinition:

url: https://raw.githubusercontent.com/IBM/compliance-to-policy/template/oscal/component-definition.json

policyResources:

url: https://github.com/IBM/compliance-to-policy/tree/template/policy-resources

clusterGroups:

- name: cluster-nist-high # name of clusterGroup

matchLabels:

level: nist-high # label's key value pair of clusterlabel

binding:

compliance: NIST_SP-800-53-HIGH # compliance name

clusterGroups:

- cluster-nist-high # clusterGroup name

ocm:

url: http://localhost:8080 # OCM Hub URL

token:

secretName: secret-ocm-hub-token # name of secret volume that stores access to hub

namespace: c2p # namespace to which C2P deploys generated resources4. C2P takes OSCAL artifacts and CR, retrieves required policies from Policy Resources, generates OCM Policy using PolicyGenerator, and pushes the generated policies with Placement/PlacementBindingto GitHub.

- GitOps engine (for example, ArgoCD) pulls the OCM Policies and

Placement/PlacementBindinginto OCM Hub. - OCM Hub distributes them to managed clusters.

- OCM Hub updates the statuses of OCM Policies of each managed cluster.

5. C2P periodically fetches the statuses of OCM Policy from OCM Hub and pushes compliance posture summary to GitHub.

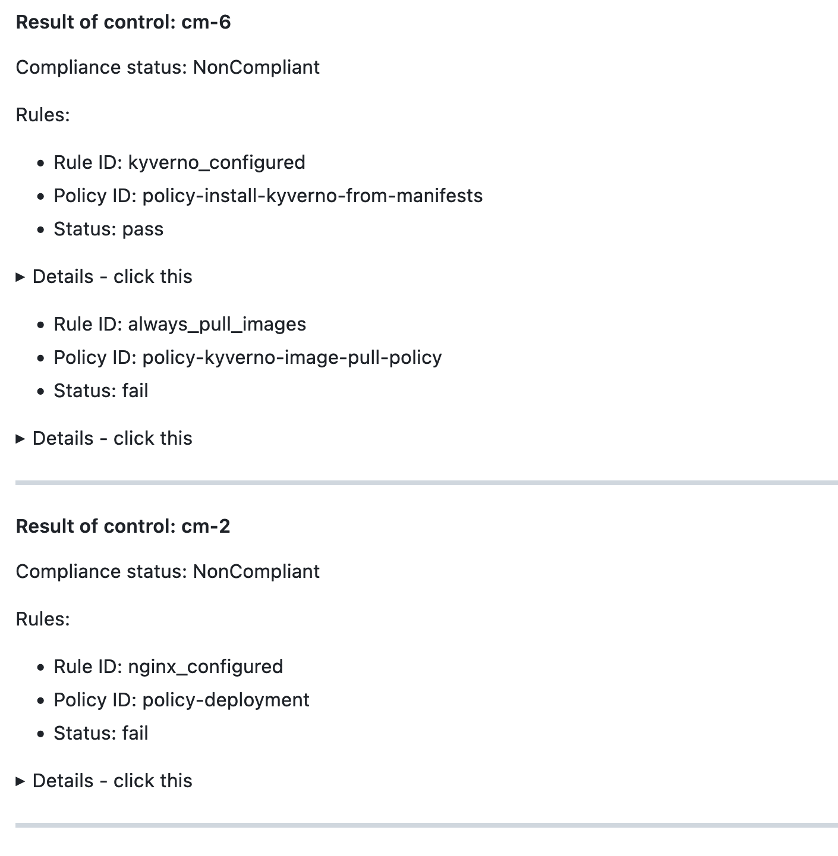

An example compliance posture summary:

6. Compliance officers or auditors check the compliance posture and take appropriate actions.

As a result of the decoupling of Compliance and Policy and bridging them by C2P, each persona can effectively play their role without needing to be aware of the specifics of different Kubernetes Policy Engines.

Conclusion

In this blog, we detailed the making of a Compliance and Policy Administration Center implementation for integrating Regulatory Programs with supportive Kubernetes declarative policies and showed how this design can be applied for the compliance management of the Kubernetes multi-cluster environment.

Coming Next

Besides configuration policies, regulatory programs also require complex processes and procedures that entail batch processing for their validation such as provided by Policy Validation Points which support imperative language for policies. In our next blog, we will introduce another design of CPAC for integrating PVPs supporting imperative policies such as Auditree.

Learn More

If you would like to use our C2P tool, see the compliance-to-policy GitHub project. For our open-source Trestle SDK see compliance-trestle to learn about various Trestle CLIs and their usage. For more details on the markdown formats and commands for authoring various compliance artifacts see this tutorial from Trestle.

Below are the links to other articles in this series:

- Compliance Automated Standard Solution (COMPASS), Part 1: Personas and Roles

- Compliance Automated Standard Solution (COMPASS), Part 2: Trestle SDK

- Compliance Automated Standard Solution (COMPASS), Part 3: Artifacts and Personas

- Compliance Automated Standard Solution (COMPASS), Part 4: Topologies of Compliance Policy Administration Centers

- Compliance Automated Standard Solution (COMPASS), Part 5: A Lack of Network Boundaries Invites a Lack of Compliance

- Compliance Automated Standard Solution (COMPASS), Part 7: Compliance-to-Policy for IT Operation Policies Using Auditree

Opinions expressed by DZone contributors are their own.

Comments