Common Mistakes In Performance Testing

A compilation of common performance testing mistakes.

Join the DZone community and get the full member experience.

Join For FreePerformance Test Execution and Monitoring

In this article, we are highlighting a few points on what can be avoided for better performance when we test and monitor a load test. In this phase, virtual user scripts are run based on the number of concurrent users and workload specified in the non-functional test plan. Monitoring should be enabled at various system tiers to isolate issues to a particular tier. Performance testing and monitoring is an iterative process and should be driven to meet the performance requirements and SLAs

Following are some common mistakes observed during performance testing that should be avoided:

1. Missing NFRs and SLAs

Non-Functional requirements are one of the biggest issues we look in the world of business analysis today. These are the most commonly missed, misunderstood or misrepresented types of requirements by most of the customers and organizations. Every individual sitting across the project/product team must understand what Non-Functional requirements are, how you best express them and how they add tremendous value add-in to your user stories or on your application/product for best results. Performance engineers need stakeholders to give proper inputs to understand the application architecture, it’s user behavior and application/product performance goals to successfully isolate the required load and to identify the performance degradation's/bottlenecks easily and fix them successfully. For any Performance engineer, it is important to understand the impact and relevance of each NFR on the specific functional requirement that he or she is implementing and this is irrespective of years of experience the person has. The habit of thinking about NFRs needs to be developed from day one of their careers.

The following are some of the important and crucial items to understand before starting performance testing:

- The overall picture clear in terms of goals or vision for building a product or software.

- The cost aspects of not handling NFRs in due course of the development process.

- Know as to how to incrementally get the NFRs implemented and delivered through the agile/sprint-based process.

- The NFRs need to be broken down into stories or acceptance criteria and the sprints have to be executed accordingly.

- The end-user perspective of NFRs is more important. Performance Engineers should be able to understand the implicit requirements by talking to end-users.

2. Performance Test Results Are Not Validated

Test success and results need to be validated to ensure that testing scripts are indeed working properly and committing transactions with the system/application. Depending upon the environment, the performance engineer, developer or system administrator can verify the same by querying the database or looking at flat files/logs created. E.g. if a load test is designed to create 15,000 orders/hour then 15,000 orders should be visible in the database at the end of the test.

3. Workload Characteristics Unknown or Not Finalized

In the absence of workload characterization, performance testing will not have a clear focus and targets. In this case, the workload expected in production may be very different than what is tested resulting in incorrect analysis and recommendations. Performance engineers must ensure all the performance testing that is done on the performance test environment which is the same as the production environment to avoid incorrect results and recommendations.

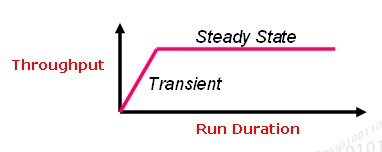

4. Load Test Duration Kept Too Small

All components within a computer system/application undergo an initial transient state for a certain period followed by a steady-state for doing effective work. These inherent characteristics need to be considered while planning any performance test. To ensure correct results, the test duration should be long enough so that results are captured only during the steady-state and not during the transient state.

5. Test Data Required For Load Test Is Not Sufficiently Populated

It is critical that enough data (expected in production) be loaded in the database to ensure the correctness of results. E.g. a query that takes less than 1 sec to complete on a 10GB database may take more than 10 sec on a 100GB database. The existing amount of data and growth rate must be considered while loading the database for load test purposes.

6. Gap Between Test And Production Infrastructure

There is no direct mathematical formula that can be used to extrapolate results observed on a lower configuration to a higher configuration. E.g., if a web page takes four seconds to load on a one CPU server will not necessarily load in one second on a four CPU server. Therefore, it is recommended that performance testing be carried out on an environment comparable to production.

7. Network Bandwidth Not Simulated

The location of end-users needs to be considered while performance testing. An end-user may be accessing the application through a dial-up modem (one Mbps speed) or over the WAN (ten Mbps, etc.).In this case, results achieved by testing the application over LAN (10/100 Mbps) will be very different than what will be observed in real-time. Performance engineers should make use of available network emulation capabilities within tools to ensure that all use cases wrt to load testing are covered.

8. Testing Schedules Are Under Estimated

It is generally assumed that scripting, testing, monitoring, analysis do not take much time to complete. This is an incorrect assumption as test environment setup, data population, creating robust scripts, establishing monitoring techniques and in-depth analysis are all time-consuming activities. Enough time and effort must be allocated within the project plan. This can be estimated in consultation with the performance SME and performance engineers.

The following matrix summarizes various aspects related to performance testing:

GOOD |

BETTER |

BEST |

|

INFRASTRUCTURE |

50% of PROD |

DR Site |

100% of PROD |

NETWORK |

Shared LAN |

Separate LAN |

WAN Simulator |

TEST TOOL |

Homegrown |

Open Source |

Industry Standard |

TEST TYPES |

Load/Volume |

Stress |

Availability and Reliability |

LOAD INJECTION |

Synthetic |

Replay |

Hybrid |

TEST UTILITY |

Profiling |

Optimization |

Capacity Planning |

MONITORING |

Manual |

Shell Scripts |

Industry Standard |

TEST FREQUENCY |

Periodic |

Based On Growth |

Every Release |

TEST DURATION |

2 weeks |

4 weeks |

> 4 weeks |

9. Performance Analysis And Tuning

The most critical aspect of the performance testing exercise is to determine performance bottlenecks within a system/application. A thorough correlation and analysis of all performance test results and monitoring output need to be executed to determine the root cause of performance issues. Performance Leads, Performance engineers and experts related to a specific technology (e.g. Oracle DBA, Linux administrator, Network specialist) should be involved to generate recommendations to improve performance. Any recommendations that are applied within a system/application should be rigorously tested to determine the level of benefit achieved as well as to ensure that there are no side effects because of the implementation. Testing, analysis, and tuning are iterative processes and should ideally continue until all the performance targets or SLA's are achieved.

10. Performance Test Reports Publishing

All testing, analysis and tuning activities executed should be documented and published as test result documents to facilitate discussions and knowledge sharing between teams and management. At the end of the performance testing exercise a final report should be published which would essentially summarize the following:

- Original Non-Functional Test plan

- Deviations from test plan

- Results and Analysis

- Recommendations suggested and applied

- Percentage of improvement achieved (e.g. in response times, workload, user concurrency, etc.)

- Capacity Validation

- Gap Analysis

- Future Steps

Concluding Performance Testing

There will always be some bottleneck in the system, but the Performance Engineer must be able to identify them until the performance is acceptable. It is not acceptable to release an application with all performance-related issues on the planned end date; instead, we need to prioritize the defects that need to be fixed. Many teams from the organizations spend, not months, but years fine-tuning a system. Understanding and filtering the performance defects that need to be fixed is extremely tricky. Performance test teams need to consider every aspect of the system and need opinions from Business analysts to developers to identify potential issues with business workflows and approximately the time to resolve.

Finally, it is sometimes difficult to consider all these points when planning performance testing, but having the information always helps you plan effective performance testing and tuning.

Opinions expressed by DZone contributors are their own.

Comments