CI/CD Pipeline Spanning Multiple OpenShift Clusters

This blog will cover how to create a CI/CD pipeline that spans multiple OpenShift clusters and how an example of a Jenkins-based pipeline.

Join the DZone community and get the full member experience.

Join For Freethis blog will cover how to create a ci/cd pipeline that spans multiple openshift clusters. it will show an example of a jenkins-based pipeline, and design a pipeline that uses tekton.

traditionally, ci/cd pipelines were implemented on top of bare metal servers and virtual machines. container platforms like kubernetes and openshift appeared on the scene only later on. as more and more workloads are migrating to openshift, ci/cd pipelines are headed in the same direction. pipeline jobs are executed in containers in the cluster.

in the real world, companies don't deploy a single openshift cluster but run multiple clusters. why is that? they want to run their workloads in different public clouds as well as on-premise. or, if they leverage a single platform provider, they want to run in multiple regions. sometimes there is a need for multiple clusters in a single region, too. for example, when each cluster is deployed into a different security zone.

as there are plenty of reasons to use multiple openshift clusters, there is a need to create ci/cd pipelines that work across those clusters. the next sections are going to design such pipelines.

ci/cd pipeline using jenkins

jenkins is a legend among ci/cd tools. i remember meeting jenkins back in the day when it was called hudson but that's old history. how can we build a jenkins pipeline that spans multiple openshift clusters? an important design goal for the pipeline is to achieve a single dashboard that can display the output of all jobs involved in the pipeline.

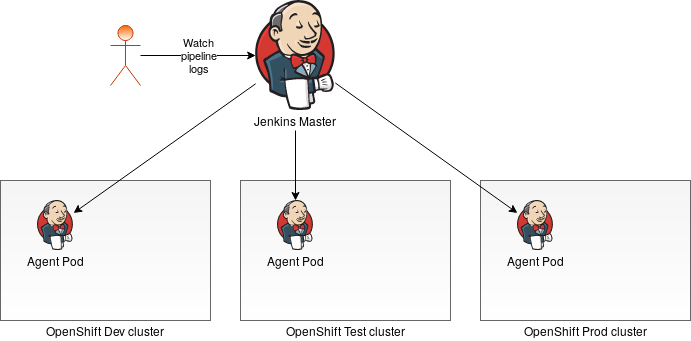

i gave it some thought and realized that achieving a single dashboard pretty much implies using a single jenkins master. this jenkins master is connected with each of the openshift clusters. during the pipeline execution, jenkins master can run individual tasks on any of the clusters. the job output logs are collected and sent to the master as usual. if we consider having three openshift clusters dev, test, and prod, the following diagram depicts the approach:

the jenkins kubernetes plugin is a perfect plugin for connecting jenkins to openshift. it allows the jenkins master to create ephemeral workers on the cluster. each cluster can be assigned a different node label. you can run each stage of your pipeline on a different cluster by specifying the label. a simple pipeline definition for our example would look like this:

stage ('build') {

node ("dev") {

// running on dev cluster

}

}

stage ('test') {

node ("test") {

// running on test cluster

}

}

stage ('prod') {

node ("prod") {

// running on prod cluster

}

}

openshift comes with a jenkins template which can be found in the

openshift

project. this template allows you to create a jenkins master that is pre-configured to spin up worker pods on the same cluster. further effort will be needed to connect this master to additional openshift clusters. a tricky part of this set up is networking. jenkins worker pod, after it starts up, connects back to the jenkins master. this requires the master to be reachable from the worker running on any of the openshift clusters.

one last point i wanted to discuss is security. as long as the jenkins master can spin up worker pods on openshift, it can execute arbitrary code on those workers. the openshift cluster has no means to control what code the jenkins worker will run. the job definition is managed by jenkins and it is solely up to the access controls in jenkins to enforce which job is allowed to execute on which cluster.

kubernetes-native tekton pipeline

in this section, we are going to use tekton to implement the ci/cd pipeline. in contrast to jenkins, tekton is a kubernetes-native solution. it is implemented using kubernetes building blocks and it is tightly integrated with kubernetes. a single kubernetes cluster is a natural boundary for tekton. so, how can we build a tekton pipeline that spans multiple openshift clusters?

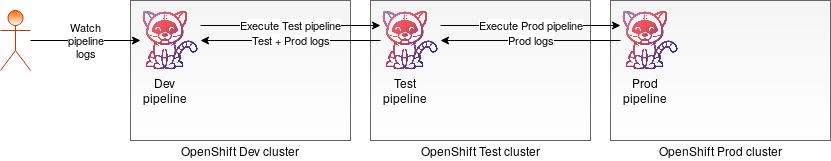

i came up with an idea of composing the tekton pipelines. to compose multiple pipelines into a single pipeline, i implemented the execute-remote-pipeline task that can execute a tekton pipeline located on a remote openshift cluster. the task will tail the output of the remote pipeline while the remote pipeline is executing. with the help of this task, i can now combine tekton pipelines across openshift clusters and run them as a single pipeline. for example, the diagram below shows a composition of three pipelines. each of the pipelines is located on a different openshift cluster dev, test, and prod:

the execution of this pipeline is started on the dev cluster. the dev pipeline will trigger the test pipeline which will in turn trigger the prod pipeline. the combined logs can be followed on the terminal:

xxxxxxxxxx

$ tkn pipeline start dev --showlog

pipelinerun started: dev-run-bd5fs

waiting for logs to be available...

[execute-remote-pipeline : execute-remote-pipeline-step] logged into "https://api.cluster-affc.sandbox1480.opentlc.com:6443" as "system:serviceaccount:test-pipeline:pipeline-starter" using the token provided.

[execute-remote-pipeline : execute-remote-pipeline-step]

[execute-remote-pipeline : execute-remote-pipeline-step] you have one project on this server: "test-pipeline"

[execute-remote-pipeline : execute-remote-pipeline-step]

[execute-remote-pipeline : execute-remote-pipeline-step] using project "test-pipeline".

[execute-remote-pipeline : execute-remote-pipeline-step] welcome! see 'oc help' to get started.

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step] logged into "https://api.cluster-affc.sandbox1480.opentlc.com:6443" as "system:serviceaccount:prod-pipeline:pipeline-starter" using the token provided.

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step]

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step] you have one project on this server: "prod-pipeline"

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step]

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step] using project "prod-pipeline".

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step] welcome! see 'oc help' to get started.

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step] [prod : prod-step] running on prod cluster

[execute-remote-pipeline : execute-remote-pipeline-step] [execute-remote-pipeline : execute-remote-pipeline-step]

[execute-remote-pipeline : execute-remote-pipeline-step]

note that this example is showing a cascading execution of tekton pipelines. another way of composing pipelines would be executing multiple remote pipelines in sequence.

before moving on to the final section of this blog, let's briefly discuss the pipeline composition in terms of security. as a kubernetes-native solution, tekton's access control is managed by rbac. before the task running on a local cluster can trigger a pipeline on a remote cluster, it has to be granted appropriate permissions. these permissions are defined by the remote cluster.

this way a remote cluster running in a higher environment (prod) can impose access restrictions on the tasks running in the lower environment (test). for example, a prod cluster will allow the test cluster to only trigger pre-defined production pipelines. the test cluster won't have permissions to create new pipelines in the prod cluster.

conclusion

this blog showed how to create ci/cd pipelines that span multiple openshift clusters using jenkins and tekton. it designed the pipelines and discussed some of the security aspects. the execute-remote-pipeline tekton task was used to compose pipelines located on different openshift clusters into a single pipeline.

needless to say, containerized pipelines work the same way on any openshift cluster regardless of whether the cluster itself is running on top of a public cloud or on-premise. the vision of the hybrid cloud is well showcased here.

do you create pipelines that span multiple clusters? would you like to share some of your design ideas? i would be happy to hear about your thoughts. please, feel free to leave your comments in the comment section below.

Published at DZone with permission of Ales Nosek. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments