Building Mancala Game in Microservices Using Spring Boot (Part 2: Mancala API Implementation)

The next step!

Join the DZone community and get the full member experience.

Join For Free

In the previous article "Building Mancala Game in Microservices Using Spring Boot (Part 1: Solution Architecture)", I explained the overall architecture of the solution takes for implementing the Mancala game using the Microservices approach.

You may also like: Building Mancala Game in Microservices Using Spring Boot (Part 3: ٌDeveloping Web Client microservice with Vaadin)

In this article, I am going to discuss the detail implementation of the "mancala-api" project which is considered to be the backend API implementation for this game based on Spring Boot. The design of 'mancala-api' microservice follows the SOLID principle and therefore, you will expect to see many classes and interfaces designed to perform one unique operation within the application.

The complete source code for this article is available in my GitHub repository.

To build and run this application, please follow the instructions I have provided here.

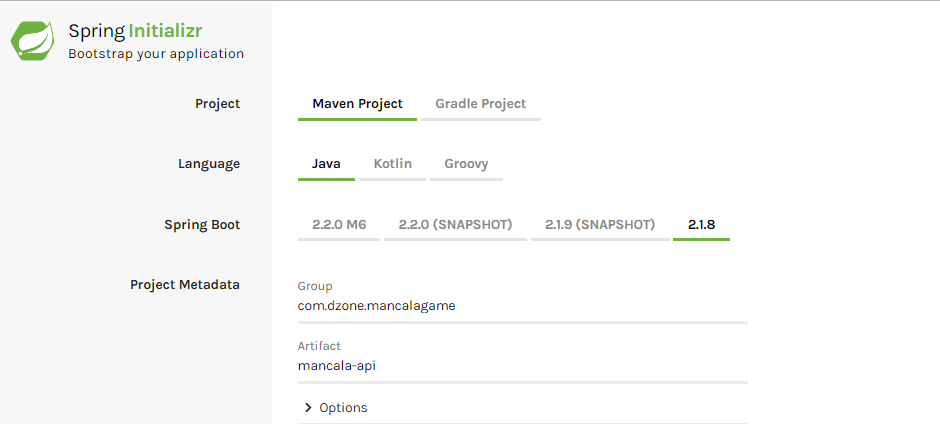

1— Spring Initializer

As always the first step for building Spring boot application for many developers is to use Spring initializer using start.spring.io:

- Group: com.dzone.mancalagame

- Artifact: mancala-API

- Select below dependencies:

Now, let's dive into Game API implementation details:

2 — Data Models

Here are domain classes identified for this project:

2.1 — KalahaConstants

This class contains all the constants I have defined for 'mancala-api' implementation:

public class KalahaConstants {

public static final int leftPitHouseId = 14;

public static final int totalPits = 14;

public static final int rightPitHouseId = 7;

public static final int emptyStone = 0;

public static final int defaultPitStones = 6;

public static final int firstPitPlayerA = 1;

public static final int secondPitPlayerA = 2;

public static final int thirdPitPlayerA = 3;

public static final int forthPitPlayerA = 4;

public static final int fifthPitPlayerA = 5;

public static final int sixthPitPlayerA = 6;

public static final int firstPitPlayerB = 8;

public static final int secondPitPlayerB = 9;

public static final int thirdPitPlayerB = 10;

public static final int forthPitPlayerB = 11;

public static final int fifthPitPlayerB = 12;

public static final int sixthPitPlayerB = 13;

}2.2 — KalahaGame

This class contains all information about Mancala game including the number of stones, player turn, and collection of KalahaPit which is corresponding to pits in the real game.

@Document(collection = "games")

@Setter

@Getter

public class KalahaGame implements Serializable{

@Id

private String id;

private List<KalahaPit> pits;

private PlayerTurns playerTurn;

@JsonIgnore

private int currentPitIndex;

public KalahaGame() {

this (defaultPitStones);

}

public KalahaGame(int pitStones) {

this.pits = Arrays.asList(

new KalahaPit(firstPitPlayerA, pitStones),

new KalahaPit(secondPitPlayerA, pitStones),

new KalahaPit(thirdPitPlayerA, pitStones),

new KalahaPit(forthPitPlayerA, pitStones),

new KalahaPit(fifthPitPlayerA, pitStones),

new KalahaPit(sixthPitPlayerA, pitStones),

new KalahaHouse(rightPitHouseId),

new KalahaPit(firstPitPlayerB, pitStones),

new KalahaPit(secondPitPlayerB, pitStones),

new KalahaPit(thirdPitPlayerB, pitStones),

new KalahaPit(forthPitPlayerB, pitStones),

new KalahaPit(fifthPitPlayerB, pitStones),

new KalahaPit(sixthPitPlayerB, pitStones),

new KalahaHouse(leftPitHouseId));

}

public KalahaGame(String id, Integer pitStones) {

this (pitStones);

this.id = id;

}

// returns the corresponding pit of particular index

public KalahaPit getPit(Integer pitIndex) throws MancalaApiException {

try {

return this.pits.get(pitIndex-1);

}catch (Exception e){

throw new MancalaApiException("Invalid pitIndex:"+ pitIndex +" has given!");

}

}

@Override

public String toString() {

return "KalahaGame{" +

", pits=" + pits +

", playerTurn=" + playerTurn +

'}';

}

}2.3 — KalahaPit

This class holds information regarding each Pit including the pit index and the number of stones within that pit.

@AllArgsConstructor

@NoArgsConstructor

@Data

public class KalahaPit implements Serializable {

private Integer id;

private Integer stones;

@JsonIgnore

public Boolean isEmpty (){

return this.stones == 0;

}

public void clear (){

this.stones = 0;

}

public void sow () {

this.stones++;

}

public void addStones (Integer stones){

this.stones+= stones;

}

@Override

public String toString() {

return id.toString() +

":" +

stones.toString() ;

}

}2.4 — KalahaHouse

It's a subclass of KalahaPit which indicates the current pit index is a House.

public class KalahaHouse extends KalahaPit {

public KalahaHouse(Integer id) {

super(id , 0);

}

}3 — API design

Below are two interfaces designed for this game to cover all operations for this microservice:

1 — KalahaGameApi is responsible for the creation of Mancala game instances based on the number of stones within each pit.

public interface KalahaGameApi {

KalahaGame createGame(int stones);

}

2 — KalahaGameSowApi is responsible for providing sowing functionality of the game for a specific pit index.

public interface KalahaGameSowApi {

KalahaGame sow (KalahaGame game, int pitIndex);

}

4 — Data Repositories With MongoDB

To persist game data, Document-based data storage such as MongoDB has been used. To enable MongoDB spring data we need to add below dependency to pom.xml file:

<!-- MongoDB -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-mongodb</artifactId>

</dependency>

We also need to add @EnableMongodbRepositories to our Spring boot application startup class in order to use Spring Boot specific data repositories for MongoDB:

@EnableMongoRepositories

@EnableCaching

@EnableDiscoveryClient

@SpringBootApplication

public class MancalaGameApiApplication {

public static void main(String[] args) {

SpringApplication.run(MancalaGameApiApplication.class, args);

}

}KalahaGameRepository

In order to use the Spring Boot implementation of MongoDB data repositories, we will need to define our interfaces to extend MongoRepository interface as below:

public interface KalahaGameRepository extends MongoRepository<KalahaGame, String> {

}5 — GamePersistence Service Implementation

MancalaGameService class has designed to perform persisting operations with a MongoDB database and also Redisin-memory data storage as our caching mechanism:

@Service

public class MancalaGameService implements KalahaGameApi {

@Autowired

private KalahaGameRepository kalahaGameRepository;

@Override

public KalahaGame createGame(int pitStones) {

KalahaGame kalahaGame = new KalahaGame(pitStones);

kalahaGameRepository.save(kalahaGame);

return kalahaGame;

}

// loads the game instance from the Cache if game instance was found

@Cacheable (value = "kalahGames", key = "#id" , unless = "#result == null")

public KalahaGame loadGame (String id) throws ResourceNotFoundException {

Optional<KalahaGame> gameOptional = kalahaGameRepository.findById(id);

if (!gameOptional.isPresent())

throw new ResourceNotFoundException("Game id " + id + " not found!");

return gameOptional.get();

}

// put the updated game instance into cache as well as data store

@CachePut(value = "kalahGames", key = "#kalahaGame.id")

public KalahaGame updateGame (KalahaGame kalahaGame){

kalahaGame = kalahaGameRepository.save(kalahaGame);

return kalahaGame;

}

}The loadGame method forces Spring to first try finding the game instance within the cache and retrieves it if it's found, otherwise, it will continue executing the logic for retrieving the game instance from our MongoDB database and then store the results into cache system before returning from this method.

The updateGame method updates the game data in both MongoDB as well as Redis cache systems. This method is invoked on every request to sow method from 'mancal-api' microservice endpoint, therefore it always keeps cache data updated for further fast retrieval.

6 — Mancala Game Logic Implementation

MancalaSowingService class has designed to implement the sowing functionality of the Mancala game. This class implements a simple interface designed for this purpose called KalahaGameSowApi:

public interface KalahaGameSowApi { KalahaGame sow (KalahaGame game, int pitIndex);}The implementation for the above interface provided as below:

@Service

public class MancalaSowingService implements KalahaGameSowApi {

// This method perform sowing the game on specific pit index

@Override

public KalahaGame sow(KalahaGame game, int requestedPitId) {

// No movement on House pits

if (requestedPitId == KalahaConstants.rightPitHouseId || requestedPitId == KalahaConstants.leftPitHouseId)

return game;

// we set the player turn for the first move of the game based on the pit id

if (game.getPlayerTurn() == null) {

if (requestedPitId < KalahaConstants.rightPitHouseId)

game.setPlayerTurn(PlayerTurns.PlayerA);

else

game.setPlayerTurn(PlayerTurns.PlayerB);

}

// we need to check if request comes from the right player otherwise we do not sow the game. In other words,

// we keep the turn for the correct player

if (game.getPlayerTurn() == PlayerTurns.PlayerA && requestedPitId > KalahaConstants.rightPitHouseId ||

game.getPlayerTurn() == PlayerTurns.PlayerB && requestedPitId < KalahaConstants.rightPitHouseId)

return game;

KalahaPit selectedPit = game.getPit(requestedPitId);

int stones = selectedPit.getStones();

// No movement for empty Pits

if (stones == KalahaConstants.emptyStone)

return game;

selectedPit.setStones(KalahaConstants.emptyStone);

// keep the pit index, used for sowing the stones in right pits

game.setCurrentPitIndex(requestedPitId);

// simply sow all stones except the last one

for (int i = 0; i < stones - 1; i++) {

sowRight(game,false);

}

// simply the last stone

sowRight(game,true);

int currentPitIndex = game.getCurrentPitIndex();

// we switch the turn if the last sow was not on any of pit houses (left or right)

if (currentPitIndex !=KalahaConstants. rightPitHouseId && currentPitIndex != KalahaConstants.leftPitHouseId)

game.setPlayerTurn(nextTurn(game.getPlayerTurn()));

return game;

}

// sow the game one pit to the right

private void sowRight(KalahaGame game, Boolean lastStone) {

int currentPitIndex = game.getCurrentPitIndex() % KalahaConstants.totalPits + 1;

PlayerTurns playerTurn = game.getPlayerTurn();

if ((currentPitIndex == KalahaConstants.rightPitHouseId && playerTurn == PlayerTurns.PlayerB) ||

(currentPitIndex == KalahaConstants.leftPitHouseId && playerTurn == PlayerTurns.PlayerA))

currentPitIndex = currentPitIndex % KalahaConstants.totalPits + 1;

game.setCurrentPitIndex(currentPitIndex);

KalahaPit targetPit = game.getPit(currentPitIndex);

if (!lastStone || currentPitIndex == KalahaConstants.rightPitHouseId || currentPitIndex == KalahaConstants.leftPitHouseId) {

targetPit.sow();

return;

}

// It's the last stone and we need to check the opposite player's pit status

KalahaPit oppositePit = game.getPit(KalahaConstants.totalPits - currentPitIndex);

// we are sowing the last stone and the current player's pit is empty but the opposite pit is not empty, therefore,

// we collect the opposite's Pit stones plus the last stone and add them to the House Pit of current player and

// make the opposite Pit empty

if (targetPit.isEmpty() && !oppositePit.isEmpty()) {

Integer oppositeStones = oppositePit.getStones();

oppositePit.clear();

Integer pitHouseIndex = currentPitIndex < KalahaConstants.rightPitHouseId ? KalahaConstants.rightPitHouseId : KalahaConstants.leftPitHouseId;

KalahaPit pitHouse = game.getPit(pitHouseIndex);

pitHouse.addStones(oppositeStones + 1);

return;

}

targetPit.sow();

}

public PlayerTurns nextTurn(PlayerTurns currentTurn) {

if (currentTurn == PlayerTurns.PlayerA)

return PlayerTurns.PlayerB;

return PlayerTurns.PlayerA;

}

}7- Redis In-Memory Cache

Loading Mancala games instances from MongoDB storage on each request could be a very resource-intensive process especially in a large production deployment with millions of online users. Therefore, we will need to apply the Data Locality pattern in our implementation keeping our data as closest possible to the processing thread. There are many options available such as Facebook Mcrouter, yahoo pistachio, and Redis. In order to use Spring Redis Data to our project, we need to add below dependency to pom.xml file:

<!-- Redis -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>and having Redis configuration in the application.properties file as below:

# Redis pool configurations

spring.redis.jedis.pool.max-active=7

spring.redis.jedis.pool.max-idle=7

spring.redis.jedis.pool.min-idle=2

#Redis Cache specific configurations

spring.cache.redis.cache-null-values=false

spring.cache.redis.time-to-live=6000000

spring.cache.redis.use-key-prefix=true

# Redis data source configuration

spring.redis.port=6379

spring.redis.host=localhost

# Spring cache system

spring.cache.type=redis

as you can see, we have set the default spring caching system to use Redis as its underlying caching data structure. for more information, you can read Spring boot documentation here.

8 — MancalGame Controller

There are three endpoints defined for 'mancala-api' microservice:

@Slf4j

@RestController

@RequestMapping("/games")

@Api(value = "Mancala game API. Set of endpoints for Creating and Sowing the Game")

public class MancalaController {

@Autowired

private MancalaGameService mancalaGameService;

@Autowired

private MancalaSowingService mancalaSowingService;

@Value("${mancala.pit.stones}")

private Integer pitStones;

@PostMapping

@ApiOperation(value = "Endpoint for creating new Mancala game instance. It returns a KalahaGame object with unique GameId used for sowing the game",

produces = "Application/JSON", response = KalahaGame.class, httpMethod = "POST")

public ResponseEntity<KalahaGame> createGame() throws Exception {

log.info("Invoking create() endpoint... ");

KalahaGame game = mancalaGameService.createGame(pitStones);

log.info("Game instance created. Id=" + game.getId());

log.info(new ObjectMapper().writerWithDefaultPrettyPrinter().writeValueAsString(game));

mancalaGameService.updateGame(game);

return ResponseEntity.ok(game);

}

@PutMapping(value = "{gameId}/pits/{pitId}")

@ApiOperation(value = "Endpoint for sowing the game. It keeps the history of the Game instance for consecutive requests. ",

produces = "Application/JSON", response = KalahaGame.class, httpMethod = "PUT")

public ResponseEntity<KalahaGame> sowGame(

@ApiParam(value = "The id of game created by calling createGame() method. It can't be empty or null", required = true)

@PathVariable(value = "gameId") String gameId,

@PathVariable(value = "pitId") Integer pitId) throws Exception {

log.info("Invoking sow() endpoint. GameId: " + gameId + " , pit Index: " + pitId);

if (pitId == null || pitId < 1 || pitId >= KalahaConstants.leftPitHouseId || pitId == KalahaConstants.rightPitHouseId)

throw new MancalaApiException("Invalid pit Index!. It should be between 1..6 or 8..13");

KalahaGame kalahaGame = mancalaGameService.loadGame(gameId);

kalahaGame = mancalaSowingService.sow(kalahaGame, pitId);

mancalaGameService.updateGame(kalahaGame);

log.info("sow is called for Game id:" + gameId + " , pitIndex:" + pitId);

log.info(new ObjectMapper().writerWithDefaultPrettyPrinter().writeValueAsString(kalahaGame));

return ResponseEntity.ok(kalahaGame);

}

@GetMapping("{id}")

@ApiOperation(value = "Endpoint for returning the latest status of the Game",

produces = "Application/JSON", response = KalahaGame.class, httpMethod = "GET")

public ResponseEntity<KalahaGame> gameStatus(

@ApiParam(value = "The id of game created by calling createGame() method. It's an String e.g. 5d34968590fcbd35b086bc21. It can't be empty or null",

required = true)

@PathVariable(value = "id") String gameId) throws Exception {

return ResponseEntity.ok(mancalaGameService.loadGame(gameId));

}

}9 — Service Discovery Using Consul

Consul, is an open source Service Registry/Discovery used to connect and secure services across any runtime platform. In order to use Consul in your Spring Boot application, you will need to add below dependency to your pom.xml file:

<!-- Consul -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-consul-discovery</artifactId>

</dependency>

and add @EnableDiscoveryClient annotation to your Spring Boot startup class:

@EnableMongoRepositories

@EnableCaching

@EnableDiscoveryClient

@SpringBootApplication

public class MancalaGameApiApplication {

public static void main(String[] args) {

SpringApplication.run(MancalaGameApiApplication.class, args);

}

}

and set necessary Consul configurations in your application.properties file:

#consul configurations

spring.cloud.consul.host=localhost

spring.cloud.consul.port=8500

spring.cloud.consul.discovery.preferIpAddress=true

spring.cloud.consul.discovery.instanceId=${spring.application.name}:${spring.application.instance_id:${random.value}}

and add below configuration in your bootstrap.properties file:

spring.application.name=mancala-apiWe have already launched our Consul server on port 8500 using Docker-compose file provided within the project repository:

| version: '3' |

| services: |

| consul-server: |

| image: consul:1.2.0 |

| command: consul agent -dev -client 0.0.0.0 |

| ports: |

| - "8500:8500" |

- "8600:8600/udp" |

10 — Swagger Documentation

Swagger, is an open-source project used widely by millions of developers to provide API documentation for REST services. It allows you to describe the structure of your API in a machine readable way. To use Swagger with your Spring Boot application, you need to add below dependencies to your pom.xml file:

<!-- Swagger -->

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>2.9.2</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>2.9.2</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-bean-validators</artifactId>

<version>2.8.0</version>

</dependency>Then, you will need to provide your custom configuration implementation within your Spring boot application:

@EnableSwagger2

@Configuration

public class SwaggerConfiguration {

@Bean

public Docket apiDocket() {

return new Docket(DocumentationType.SWAGGER_2)

.select()

.apis(RequestHandlerSelectors.basePackage("com.dzone.mancala.game.controller"))

.paths(PathSelectors.any())

.build()

.apiInfo(getApiInfo());

}

private ApiInfo getApiInfo() {

return new ApiInfo(

"Manacala Game Api service",

"This application provides api for building Mancala game application.",

"1.0.0",

"TERMS OF SERVICE URL",

new Contact("Esfandiyar", "http://linkedin.com/in/esfandiyar", "esfand55@gmail.com"),

"MIT License",

"LICENSE URL",

Collections.emptyList()

);

}

}The operations within MancalaController class has been documented using @ApiOperation and @ApiParam annotations from Swagger:

@PutMapping(value = "{gameId}/pits/{pitId}")

@ApiOperation(value = "Endpoint for sowing the game. It keeps the history of the Game instance for consecutive requests. ",

produces = "Application/JSON", response = KalahaGame.class, httpMethod = "PUT")

public ResponseEntity<KalahaGame> sowGame(

@ApiParam(value = "The id of game created by calling createGame() method. It can't be empty or null", required = true)

@PathVariable(value = "gameId") String gameId,

@PathVariable(value = "pitId") Integer pitId) throws Exception @PostMapping

@ApiOperation(value = "Endpoint for creating new Mancala game instance. It returns a KalahaGame object with unique GameId used for sowing the game",

produces = "Application/JSON", response = KalahaGame.class, httpMethod = "POST")

public ResponseEntity<KalahaGame> createGame() throws ExceptionTo see the online documentation for your REST services, you will need to have swagger-ui dependency in your pom.xml file as shown above and then navigate to below address after launching your service:http://localhost/mancala-api/swagger-ui.html

Please notice that the given URL shows the way we access our microservices through Consul registry while using Apache httpd to proxy requests and hide the underlying ports for our end users. To achieve this functionality, we are using a template provided by Hashicorp's Consul here: https://releases.hashicorp.com/consul-template/0.18.0/consul-template_0.18.0_linux_amd64.zip.

For more detail about the configuration, please see the apache folder provided in my GitHub repository for this project: https://github.com/esfand55/mancala-game/tree/master/docker/apache

However, if you are running your Spring boot application as a standalone application for instance on port 8080, the URL will be like this: http://localhost:8080/swagger-ui.html

You can even test your API using the web interface provided by swagger.

11 — Spring Boot Actuator

In the world of microservices, it's essential to have enough information in run time about how well each microservice operates in production through a comprehensive list of metrics and health checks collected from those microservice. Spring Boot Actuator lets you monitor and interact with your application at runtime through well-defined APIs. To enable Spring Boot Actuator, you will need to add below dependency into your pom.xml file:

<!-- Spring boot actuator to expose metrics endpoint -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

You can observe those metrics by navigating to the below address if you are running your Spring boot application for instance in port 8080: http://localhost:8080/actuator.

But in our implementation, since we are using Consul and Httpd proxy, the URL would be as below:

http://localhost/mancala-api/actuator

{"_links":{"self":{"href":"http://localhost/actuator","templated":false},

"archaius":{"href":"http://localhost/actuator/archaius","templated":false},

"auditevents":{"href":"http://localhost/actuator/auditevents","templated":false},

"beans":{"href":"http://localhost/actuator/beans","templated":false},

"caches":{"href":"http://localhost/actuator/caches","templated":false},

"caches-cache":{"href":"http://localhost/actuator/caches/{cache}","templated":true},

"health":{"href":"http://localhost/actuator/health","templated":false},

"health-component":{"href":"http://localhost/actuator/health/{component}","templated":true},

"health-component-instance":{"href":"http://localhost/actuator/health/{component}/{instance}","templated":true},

"conditions":{"href":"http://localhost/actuator/conditions","templated":false},

"configprops":{"href":"http://localhost/actuator/configprops","templated":false},

"env-toMatch":{"href":"http://localhost/actuator/env/{toMatch}","templated":true},

"env":{"href":"http://localhost/actuator/env","templated":false},

"info":{"href":"http://localhost/actuator/info","templated":false},

"logfile":{"href":"http://localhost/actuator/logfile","templated":false},

"loggers-name":{"href":"http://localhost/actuator/loggers/{name}","templated":true},

"loggers":{"href":"http://localhost/actuator/loggers","templated":false},

"heapdump":{"href":"http://localhost/actuator/heapdump","templated":false},

"threaddump":{"href":"http://localhost/actuator/threaddump","templated":false},

"prometheus":{"href":"http://localhost/actuator/prometheus","templated":false},

"metrics-requiredMetricName":{"href":"http://localhost/actuator/metrics/{requiredMetricName}","templated":true},

"metrics":{"href":"http://localhost/actuator/metrics","templated":false},

"scheduledtasks":{"href":"http://localhost/actuator/scheduledtasks","templated":false},

"httptrace":{"href":"http://localhost/actuator/httptrace","templated":false},

"mappings":{"href":"http://localhost/actuator/mappings","templated":false},

"refresh":{"href":"http://localhost/actuator/refresh","templated":false},

"features":{"href":"http://localhost/actuator/features","templated":false},

"service-registry":{"href":"http://localhost/actuator/service-registry","templated":false},

"consul":{"href":"http://localhost/actuator/consul","templated":false}}}You can define which endpoints to be exposed in your application.properties file:

management.endpoints.jmx.exposure.include=*

management.endpoints.jmx.exposure.exclude=To see the details information about any of above endpoints, you will need to add the name of that metric to the end of the above URL. For instance, to see the metrics information check this URL:

http://localhost/mancala-api/actuator/metrics

{"names":["jvm.memory.max","jvm.threads.states","process.files.max","jvm.gc.memory.promoted","system.load.average.1m","jvm.memory.used","jvm.gc.max.data.size","jvm.gc.pause","jvm.memory.committed","system.cpu.count","logback.events","http.server.requests","tomcat.global.sent","jvm.buffer.memory.used","tomcat.sessions.created","jvm.threads.daemon","system.cpu.usage","jvm.gc.memory.allocated","tomcat.global.request.max","tomcat.global.request","tomcat.sessions.expired","jvm.threads.live","jvm.threads.peak","tomcat.global.received","process.uptime","tomcat.sessions.rejected","process.cpu.usage","tomcat.threads.config.max","jvm.classes.loaded","jvm.classes.unloaded","tomcat.global.error","tomcat.sessions.active.current","tomcat.sessions.alive.max","jvm.gc.live.data.size","tomcat.threads.current","process.files.open","jvm.buffer.count","jvm.buffer.total.capacity","tomcat.sessions.active.max","tomcat.threads.busy","process.start.time"]}To see the health of microservice, check this URL: http://localhost/mancala-api/actuator/health

{"status":"UP"}This is the default implementation of Spring Boot for microservice health checking. You can always customize the default implementation and provide your own definition of how to provide health checks for your microservices. For that you will need to implement HealthIndicator interface and provide your own implementation for health() method:

@Component

public class ServiceAHealthIndicator implements HealthIndicator {

private final String message_key = "mancala-api Service is Available";

@Override

public Health health() {

if (!isServiceRunning()) {

return Health.down().withDetail(message_key, "Not Available").build();

}

return Health.up().withDetail(message_key, "Available").build();

}

private Boolean isServiceRunning() {

Boolean isRunning = true;

// Logic Skipped. Provide your own logic

return isRunning;

}

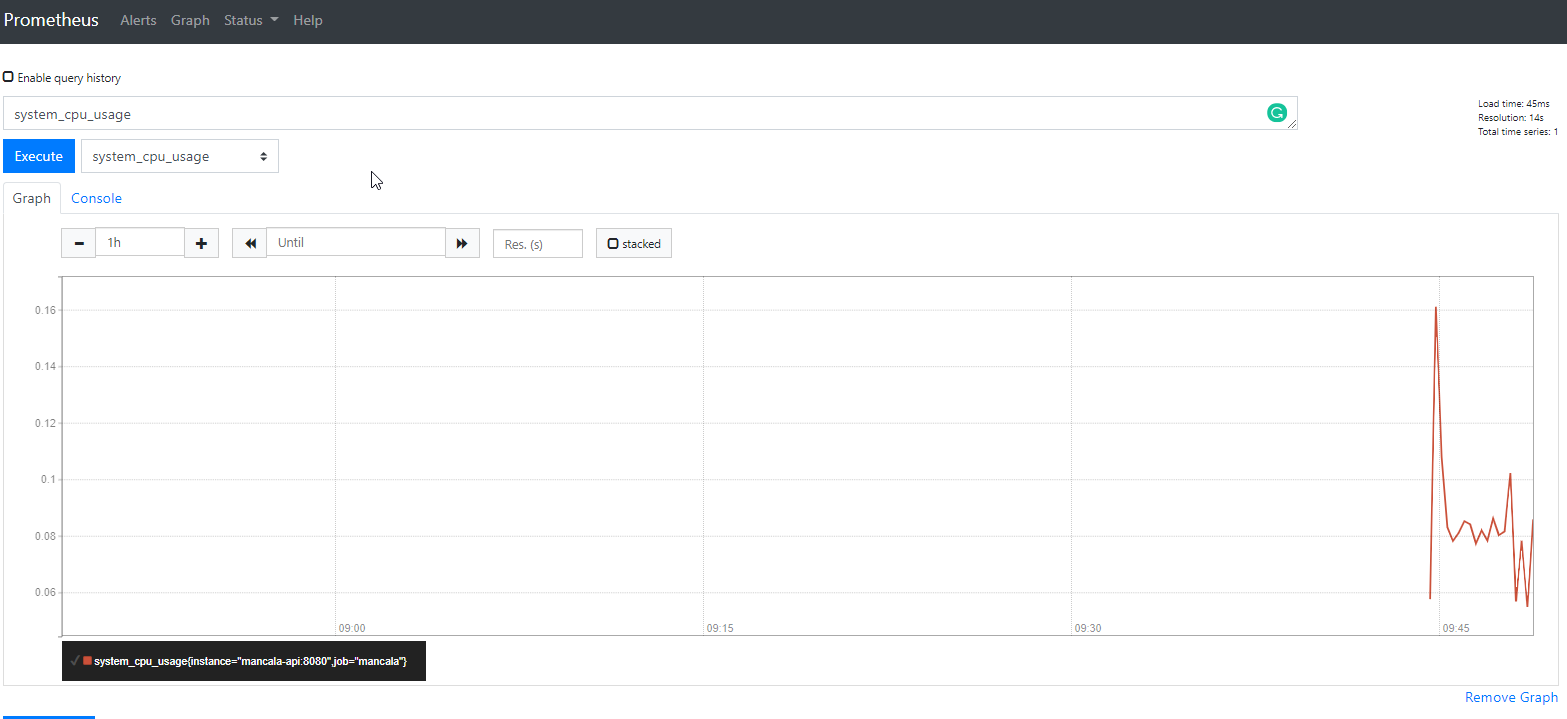

}12 — Micrometer and Prometheus Server

Micrometer is the most popular open source monitoring system used widely by many developers which allows you to include different libraries into your application to collect and ship metrics to different backend systems such as Prometheus.

In order to enable Micrometer to send your metrics to Prometheus server from your Spring Boot application, you will need below dependencies to be added to your pom.xml file:

<!-- Micormeter core -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-core</artifactId>

</dependency>

<!-- Micrometer Prometheus registry -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>You will also need to provide below configurations in your application.properties file within your Spring boot application:

# Prometheus configuration

management.metrics.export.prometheus.enabled=true

management.endpoint.prometheus.enabled=true

In this implementation, we have used Docker-compose to build and run our containers. You can find the docker-compose.yml file designed for this purpose at Github repository for this project here.

You can access to Prometheus server and play with various metrics collected: http://localhost:9090/graph

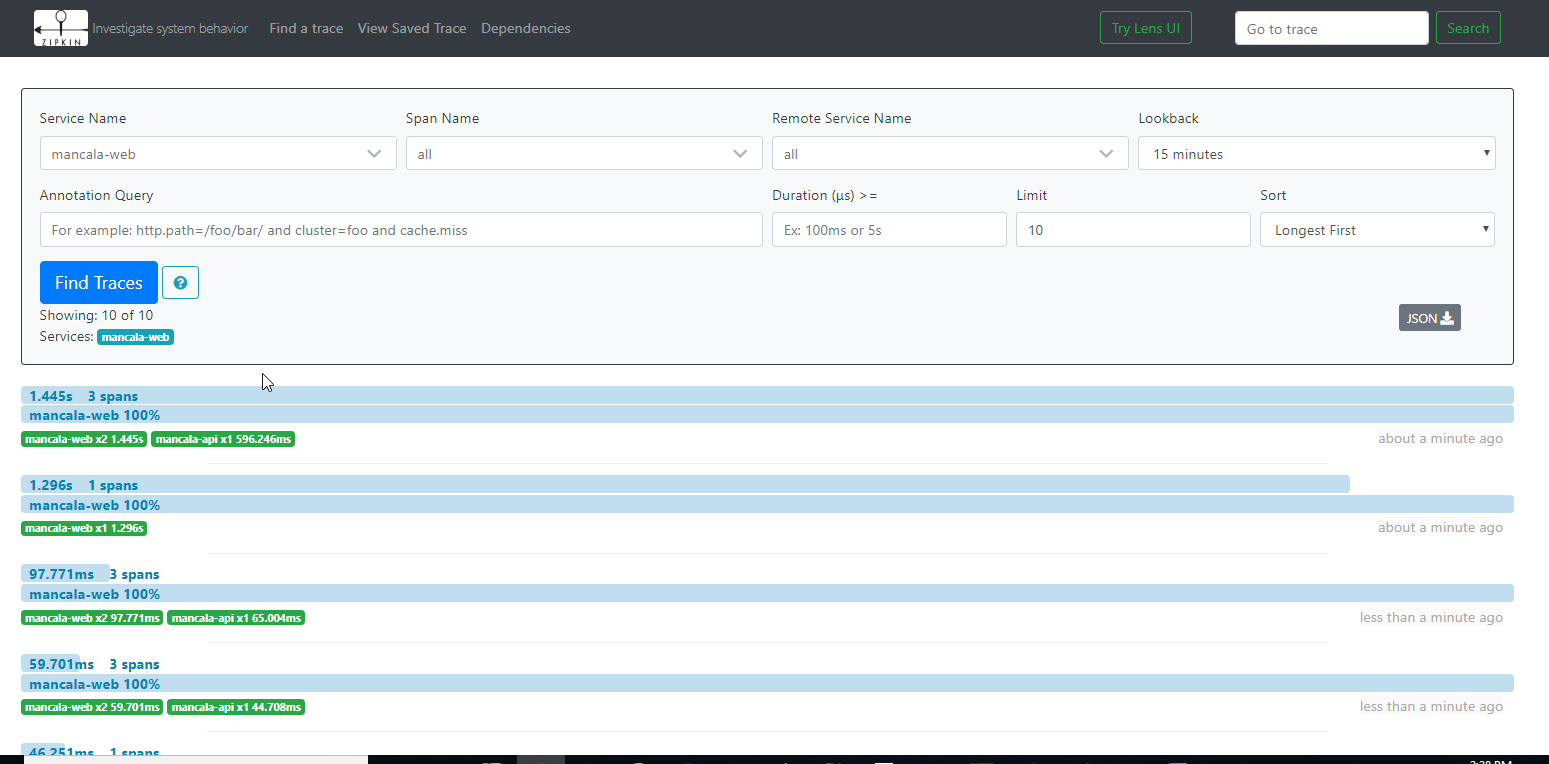

13 — Distributed Tracing Using Spring Cloud Sleuth and Zipkin

In the Microservices ecosystem where a typical request might span multiple services, we would need efficient tools to help us diagnose if there is any performance degradation or failure in that process chain and give us required runtime information to debug and fix those issues. Spring Cloud Sleuth is a project by the Spring Boot team designed to address this issue.

To enable this feature in your Spring boot application, you will need to add below dependency into your pom.xml file:

<!-- Sleuth-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

and you will provide below configuration in your application.properties file:

#Sleuth configurations

spring.sleuth.sampler.probability=1

Zipkin is a distributed tracing system. It helps gather timing data needed to troubleshoot latency problems in service architectures. Features include both the collection and lookup of this data. To enable Zipkin in your Spring Boot application you will need to add below dependency to your pom.xml file:

<!-- Zipkin -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-zipkin</artifactId>

</dependency>

and provide below configuration in your application.properties file:

spring.zipkin.base-url=http://localhost:9411/We have used Docker-compose to build and run our containers. Here is the docker-compose.yml file designed for this purpose:

version: '3'

services:

consul-server:

image: consul:1.2.0

command: consul agent -dev -client 0.0.0.0

ports:

- "8500:8500"

- "8600:8600/udp"

mongodb:

image: mongo:latest

container_name: "dzone_mancala_mongodb"

ports:

- "27017:27017"

command: mongod --smallfiles --logpath=/dev/null # --quiet

redisdb:

restart: always

container_name: "dzone_mancala_redis"

image: redis

ports:

- "6379:6379"

mancala-api:

build: ../mancala-microservice/mancala-api

links:

- consul-server

- zipkin-server

depends_on:

- redisdb

- mongodb

environment:

- MANCALA_PIT_STONES=6

- SPRING_CLOUD_CONSUL_HOST=consul-server

- SPRING_APPLICATION_NAME=mancala-api

- SPRING_DATA_MONGODB_HOST=mongodb

- SPRING_DATA_MONGODB_PORT=27017

- SPRING_REDIS_HOST=redisdb

- SPRING_REDIS_PORT=6379

- MANCALA_API_SERVICE_ID= mancala-api

- SPRING_ZIPKIN_BASE_URL=http://zipkin-server:9411/

mancala-web:

build: ../mancala-microservice/mancala-web

links:

- consul-server

- zipkin-server

environment:

- SPRING_CLOUD_CONSUL_HOST=consul-server

- SPRING_APPLICATION_NAME=mancala-web

- MANCALA_API_SERVICE_ID= mancala-api

- SPRING_ZIPKIN_BASE_URL=http://zipkin-server:9411/

apache:

build: apache

links:

- consul-server

depends_on:

- consul-server

ports:

- "80:80"

zipkin-storage:

image: openzipkin/zipkin-cassandra

container_name: cassandra

ports:

- "9042:9042"

zipkin-server:

image: openzipkin/zipkin

ports:

- "9411:9411"

environment:

- STORAGE_TYPE=cassandra3

- CASSANDRA_ENSURE_SCHEMA=false

- CASSANDRA_CONTACT_POINTS=cassandra

depends_on:

- zipkin-storage

grafana:

image: grafana/grafana:6.2.5

container_name: grafana

ports:

- 3000:3000

depends_on:

- prometheus

environment:

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

prometheus:

build: prometheus

links:

- mancala-api

ports:

- "9090:9090"

To build the images execute the below command:

docker-compose -f docker-compose-zipkin-cassandra.yml buildand to run them execute :

docker-compose -f docker-compose-zipkin-cassandra.yml up -dThen you can access the Zipkin web interface to see all tracking information about calling our microservices at: http://localhost:9411

14 — ELK-Stack

ELK-Stack, "ELK" is the acronym for three open source projects: Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a server‑side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a "stash" like Elasticsearch. Kibana lets users visualize data with charts and graphs in Elasticsearch.

We are using java logger API to log all operations within MancalaController class along with their results in order to ingest them into Elasticsearchengine through Filebeat component. These log data are stored in the logs folder as we have described in our logger-spring.xml file. This file is located under the resources folder of our spring boot application:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml" />

<property name="LOG_FILE"

value="${LOG_FILE:-${LOG_PATH:-${LOG_TEMP:-${java.io.tmpdir:-/tmp}}/}spring.log}" />

<include

resource="org/springframework/boot/logging/logback/console-appender.xml" />

<appender name="JSON_FILE"

class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logs/mancala-api-${PID}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>mancala-api-${PID}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder

class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<arguments />

<stackTrace />

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"severity": "%level",

"service": "mancala-api",

"pid": ${PID:-},

"thread": "%thread",

"logger": "%logger",

"message": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="JSON_FILE" />

<appender-ref ref="CONSOLE" />

</root>

</configuration>We also need to configure our Filebeat module to read these log data from the proper location. This configuration has been set in filebeat.yml used within Filebeat docker file:

filebeat.prospectors:

- input_type: log

paths:

- /logs/*.json

json:

keys_under_root: true

output.elasticsearch:

hosts:

- elasticsearch:9200in this application, we are using docker-compose to build and run our containers. The docker-compose.yml file for the above configuration is provided here:

version: '3'

services:

consul-server:

image: consul:1.2.0

command: consul agent -dev -client 0.0.0.0

ports:

- "8500:8500"

- "8600:8600/udp"

mongodb:

image: mongo:latest

container_name: "dzone_mancala_mongodb"

ports:

- "27017:27017"

command: mongod --smallfiles --logpath=/dev/null # --quiet

redisdb:

restart: always

container_name: "dzone_mancala_redis"

image: redis

ports:

- "6379:6379"

mancala-api:

build: ../mancala-microservice/mancala-api

volumes:

- logs:/logs

links:

- consul-server

- zipkin-server

depends_on:

- redisdb

- mongodb

environment:

- MANCALA_PIT_STONES=6

- SPRING_CLOUD_CONSUL_HOST=consul-server

- SPRING_APPLICATION_NAME=mancala-api

- SPRING_DATA_MONGODB_HOST=mongodb

- SPRING_DATA_MONGODB_PORT=27017

- SPRING_REDIS_HOST=redisdb

- SPRING_REDIS_PORT=6379

- MANCALA_API_SERVICE_ID= mancala-api

- SPRING_ZIPKIN_BASE_URL=http://zipkin-server:9411/

mancala-web:

build: ../mancala-microservice/mancala-web

volumes:

- logs:/logs

links:

- consul-server

- zipkin-server

environment:

- SPRING_CLOUD_CONSUL_HOST=consul-server

- SPRING_APPLICATION_NAME=mancala-web

- MANCALA_API_SERVICE_ID= mancala-api

- SPRING_ZIPKIN_BASE_URL=http://zipkin-server:9411/

apache:

build: apache

links:

- consul-server

depends_on:

- consul-server

ports:

- "80:80"

zipkin-storage:

image: openzipkin/zipkin-cassandra

container_name: cassandra

ports:

- "9042:9042"

zipkin-server:

image: openzipkin/zipkin

ports:

- "9411:9411"

environment:

- STORAGE_TYPE=cassandra3

- CASSANDRA_ENSURE_SCHEMA=false

- CASSANDRA_CONTACT_POINTS=cassandra

depends_on:

- zipkin-storage

prometheus:

build: prometheus

links:

- mancala-api

ports:

- "9090:9090"

node-exporter:

image: prom/node-exporter:latest

ports:

- '9100:9100'

grafana:

image: grafana/grafana:6.2.5

container_name: grafana

ports:

- "3000:3000"

depends_on:

- prometheus

environment:

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

filebeat:

build: filebeat

volumes:

- logs:/logs

links:

- elasticsearch

elasticsearch:

build: elasticsearch

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- xpack.security.enabled=false

- xpack.monitoring.enabled=false

kibana:

image: docker.elastic.co/kibana/kibana:6.3.1

ports:

- "5601:5601"

links:

- elasticsearch

environment:

- ELASTICSEARCH_URL=http://elasticsearch:9200

volumes:

logs:The above docker-compose.yml file includes all the features provided by this game which are discussed in this article.

You can then access the Kibana web interface at http://localhost:5601/

15- Better API With TDD

Developing production-ready robust APIs is essential in Microservices architecture and requires a lot of attention and solid principles to follow.

16- Source Code

The complete source code for this project is available in my GitHub repository.

17- How to Build and Run

To build and run this application, please follow the instructions I have provided here.

In my next article (Building Mancala Game in Microservices Using Spring Boot (Web Client Microservice Implementation), I am explaining how to develop a simple web application using Spring Boot and Vaadin and use 'mancala-api' microservice using Consul service registry.

Your valuable comments and feedbacks are highly appreciated.

Further Reading

Deploying a Spring Boot Microservices to Docker: A Quick Guide

Opinions expressed by DZone contributors are their own.

Comments