Hybrid Multi-Cloud Event Mesh Architectural Design

Building the event mesh with Camel

Join the DZone community and get the full member experience.

Join For FreePart 1 || Part 2 || Part 3

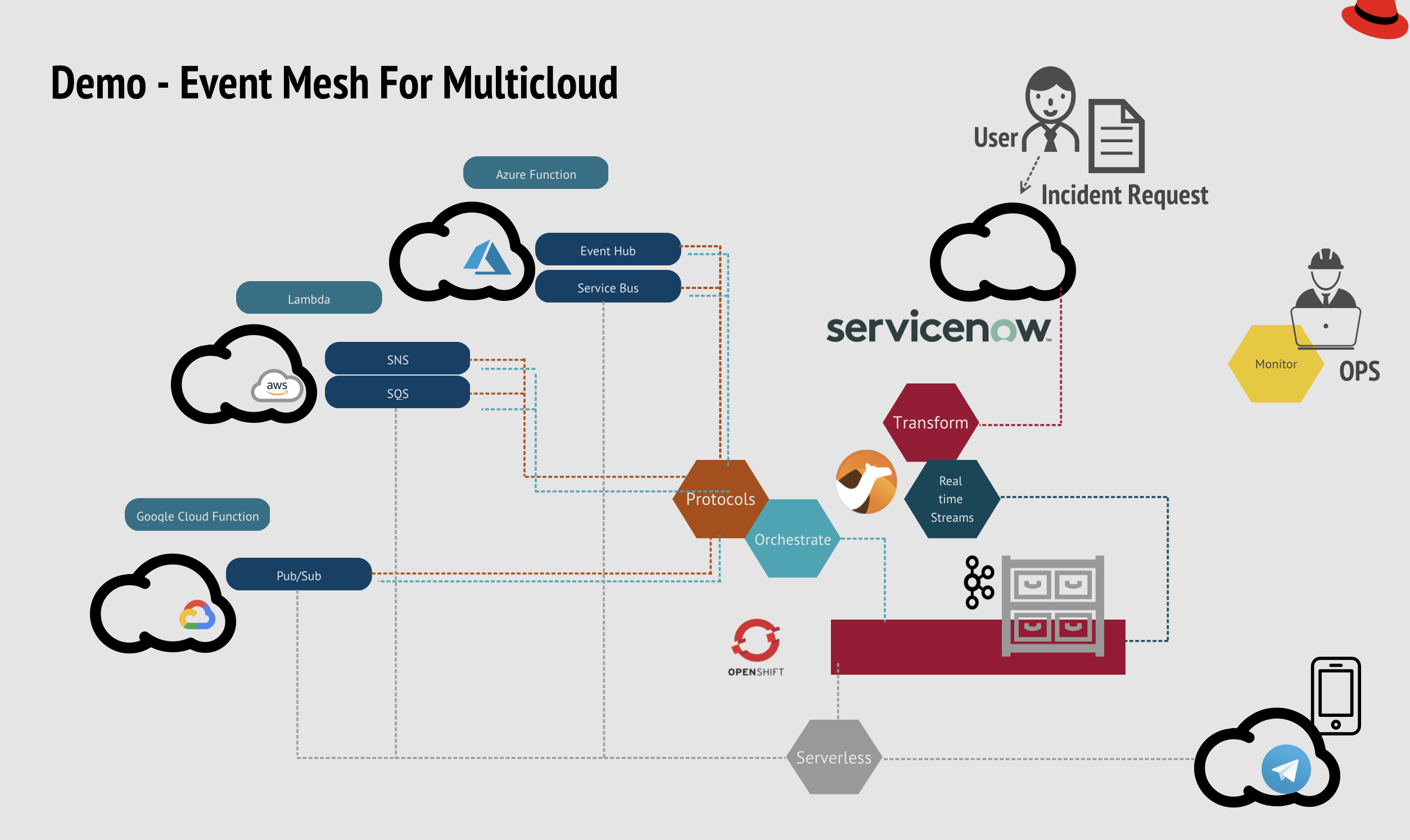

This blog is part one of my three blogs on how to build a hybrid multi cloud event mesh with Camel. In this part I am going over what inspired me to do the demo, and architecturally how everything is put together. I will be using Red Hat OpenShift (Kubernetes) as the platform for my mesh. And using Kafka as the backbone to stream/persist events and last but not least using Apache Camel (in Red Hat Integration) to weave the mesh across Service Now, Amazon AWS, Microsoft Azure and Google Cloud Platform.

Why hybrid multi cloud demo and challenges in it?

The whole reason why I'm doing this is because I'm seeing trends from our customers. More than 50% of the CTO and architect we interview are moving at least part of the workload to either a single cloud or multiple clouds. And not surprisingly most would also have in-house data centers and purchase SaaS services. And it got me thinking, what are the possible ways to bridge and connect everything? What are the challenges we will face when trying to connect the dots?

Taking a look specifically from the integration side. As more applications, services and functionality are deployed across geo, data centers and clouds, you will start to see problems arise:

Service Silo: Possibly causes could be geo regulation, how the company structure the teams, deployment policies or vendor specific features. Services were NOT effectively reused, simply because it’s hard to get to. Or not even know among different teams.

Data Integrity: Keeping the data in sync across the multi-cloud can be challenging. Event driven architecture can help by streamlining real-time events simultaneously across multiple clouds, events can be changed from state, request or command. To achieve eventual consistency, and the faster the broader the events gets to, the more real time it can achieve.

Integration Mess. Having many point to point could lead to disarray of connections. And it will definitely affect how fast to deliver change for your application. As there are no organized and holistic view of where the events are going and the subscriber of the events.

Building an Event Mesh

I propose to avoid your solutions becoming a bowl of spaghetti, is to build an event mesh.

There are many components in the mesh, what you need first is a reliable streaming platform that is able to handle high throughput of events. Here I am using the Kafka platform by Red Hat. In the cluster, we can create topics, each topic is responsible for a particular type of event, for instance, inventory removal request, state of an order or transaction log of an account. To access these events, you will need a connector, here I am using Camel, that is used to connect the dot and building the event mesh. It's obvious from the diagram above. Having a connector can make your mesh FLEXIBLE! You can easily plug and unplug from the mesh if needed. (Of course you can switch some connectors to Debezium if you need access to the state event in the database or a simple application.)

Once you have established the foundation of the mesh, it will be the hardest part. Defining the what events that you need in your system. Domain driven design (DDD) can get you started on that. Once you figure out the context of the domain, define the Entities. These entities are where you want to consider as the base of the events. And think about do you need to provide state change of an entity? That could be an event, What about those query requests for an entity? Do I need to combine entities for analytic purposes? In my demo the use case is simple, I have two events, one is the service request I have gathered from ServiceNow and the second one is the result event that I will use to display in Telegram.

Some Serverless workload

Serverless itself is also event driven, and not all the nodes in the mesh needs to be up 24/7. Having to scale down making the connector standby with 0 running instance, this will optimize the resource allocation on the cloud and lower the cost of running the mesh.I have made some connector nodes serverless. In order to demonstrate that the mesh is not just flexible architecture but also physically and budget friendly.

The Demo

Demo starts by creating a connector(Camel Quarkus) that collects incident tasks from ServiceNow, while collecting, it filters and transforms the incoming data event into smaller payload for lower ingress/egress cost. The payload is then picked up by other nodes in the mesh and push the events to AWS, Azure and GCP, depending on the request type. For Azure, I am sending the event to both Eventhub(Streaming in Azure) and ServiceBus to trigger an Azure function. On AWS the events are sent to SNS and it will be picked up by a Lambda function. Lastly events are sent via pub/sub and consumed by a Cloud function on Google Cloud Platform. We will be using serverless connectors(Kamelet) to subscribe from each cloud for any result notification. When the result event occurs the serverless function will scale up and return the result back to the Telegram.

Watch this video for actual demonstration:

The technology stack that I used to build is pretty simple. OpenShift for the onsite hybrid platform, Red Hat Integration for setting the foundational stream platform - Kafka, and using Apache Camel to build the connectors.

I will dive into each connector, and also monitoring of the mesh in my upcoming blogs.

Opinions expressed by DZone contributors are their own.

Comments