Enterprise AI Platform With Amazon Bedrock

A walkthrough of Amazon Bedrock with various Foundation Models and how it can be explored to build an Enterprise Gen AI platform.

Join the DZone community and get the full member experience.

Join For FreeWhat Is the Enterprise GEN-AI Platform?

The release of ChatGPT by OpenAI has shown many businesses the immense potential of large language models and the power of GENERATIVE AI. As a result, companies of all sizes across industries like retail, travel, healthcare, and finance are rushing toward adopting GENERATIVE AI to boost productivity and foster innovation for better outcomes and values to the customers.

Organizations have multiple options for leveraging these powerful AI capabilities in their eco-system by designing and building a robust, scalable Gen AI platform. They can either set up a private infrastructure to run open-source models by fine-tuning with domain-specific data from their own business. Or they can utilize vendor APIs to access proprietary closed models hosted externally. The possibilities are endless for harnessing generative AI to solve real business challenges and move beyond prototypes into full-scale implementations that generate tangible gains. Companies are racing to capitalize on this technology and translate the initial excitement into concrete benefits.

However, not every strategy works for all organizations at all levels. A one-size-fits-all approach may not meet the privacy or compliance needs of some businesses. Additionally, highly regulated industries like finance and healthcare often have extra compliance and regulatory requirements that not all AI platforms can address. Despite these challenges, there are key considerations that AI leaders across various industry sectors consistently seek in their Generative AI platforms. :

- Streamlining the development of generative AI applications by ensuring they are both swift and user-friendly while prioritizing robust security measures and data privacy with implemented access controls and sharing. Strong data security and governance capabilities in the AI platform ensure sensitive data is protected. This is critical for privacy and compliance.

- Emphasizing the adoption of the most efficient and cost-effective infrastructure for generative AI, allowing organizations to train or fine-tune their models economically. This approach facilitates scalable inference to support diverse business use cases. Also, the platform should provide capabilities to integrate with existing company infrastructure and data which would maximize the values.

- Delivering enterprise-level generative AI applications to revolutionize workflow processes and enhance overall operational efficiency.

- Leveraging data as a distinctive factor by customizing Foundation Models (FMs), making them experts tailored to the unique aspects of a business, its data, and its operations.

- Attaining regulatory certifications such as FINRA or HIPAA compliance to ensure the infrastructure or platform is devoid of data leaks, addressing various regulatory requirements effectively.

- Transparent AI capabilities so companies understand how the technology works. Explainability builds trust.

- Robust content filtering to avoid generating inappropriate or biased content. This is important for responsible AI.

The one-size-fits-all approach has limitations. Leading organizations seek generative AI platforms that align with their specific requirements and priorities around security, governance, transparency, and responsible AI.

Why Do We Need Foundation Models as Opposed to the Traditional Machine Learning Model?

To understand the benefits of foundation models, it helps to compare them to traditional machine learning models. Machine learning models are trained on specific data to perform narrow functions. In contrast, foundation models are large-scale models trained on diverse data, which makes them adaptable to a wide range of downstream applications and tasks through fine-tuning. The broad training of foundation models allows them to learn general capabilities like language understanding that can then be specialized for specific use cases. This makes foundation models more flexible and capable than narrow machine learning models trained only for one purpose. In summary, foundation models are a more versatile base for building many different AI applications, whereas machine learning models have limited scope for transferability to new tasks. The broad applicability of foundation models trained on large, diverse data makes them a superior launching point for organizations looking to leverage AI across multiple domains.

Generative AI powered by the Foundation Models has a wide range of use cases across different industries and domains:

- All industries: Chatbots and question-answering systems, document summarization

- Financial sector: Risk management and fraud detection.

- Healthcare industry: Drug development and personalized medicine recommendation, assisting researchers on huge repetitive tasks completion

- Retail sector: Pricing and inventory optimization, product and brand classification, product recommendation based on user recent purchase, product metadata and description generator

- Energy and utilities: Predictive maintenance, renewable energy design.

In summary, key applications of generative AI by foundation model include conversational agents, prediction systems for risk/fraud/maintenance, optimized and personalized recommendations, creative design across products and technologies, and more. The versatility of generative AI is allowing companies across sectors to integrate their capabilities in diverse business processes and functions.

How Amazon Bedrock Can Lay the Foundation of the Enterprise Gen AI Platform

Amazon Bedrock is a new AWS service that lets businesses easily utilize and customize generative AI models through an API. Companies can now build and expand AI applications without having to manage the complex infrastructure and maintenance required to run these models themselves. Amazon Bedrock acts as a "Foundation Models as a Service" platform where customers can explore both open-source and proprietary models to find the best fit for their needs. A key benefit is the serverless experience, which simplifies customizing foundation models with a company's own data. The customized models can then be seamlessly integrated and deployed using other AWS tools within the organization's infrastructure. Overall, Bedrock aims to make leveraging generative AI more accessible by removing the barriers of model management and infrastructure complexity.

AWS Bedrock helps organizations adopt generative AI more easily by providing convenient access to high-quality foundation models for text and images. It offers both open-source and proprietary models from multiple vendors, including Amazon's own Titan models. This alleviates the need for enterprises to do vendor assessments themselves since Amazon runs everything on its infrastructure. By handling security, compliance, and model serving, Bedrock removes key barriers to generative AI adoption for companies. They no longer have to build and maintain their own model infrastructure and capabilities. Instead, Bedrock allows them to leverage powerful generative models through a simple API without worrying about underlying complexities.

AWS Bedrock lowers the barriers for enterprises to adopt generative AI, both open source and commercial, by:

- Enabling easy fine-tuning of the existing Open Source or Closed Foundation models using just a few labeled examples in Amazon S3, without large-scale data annotation or building a data pipeline. This simplifies customization as well as speed up new Generative AI-based application development.

- Providing a serverless, scalable, reliable, and secure managed service where customers maintain full control and governance over their data for customization. Built-in model access systems allow administrators to control model usage, supporting strong AI governance.

- Integrating with AWS SageMaker, Lambda, EKS, ECS, and other AWS services like EC2 through APIs so developers can easily build, scale, and deploy AI apps without managing infrastructure or high-level LLM deployment processes.

In summary, Bedrock accelerates generative AI adoption by simplifying customization, seamlessly integrating with AWS, and giving enterprises full control, governance, and security over their data and models. This reduces risk and time-to-value when leveraging generative AI capabilities.

A Walkthrough of the Amazon Bedrock Service

After logging in to the AWS console, we need to type Amazon Bedrock to load the service.

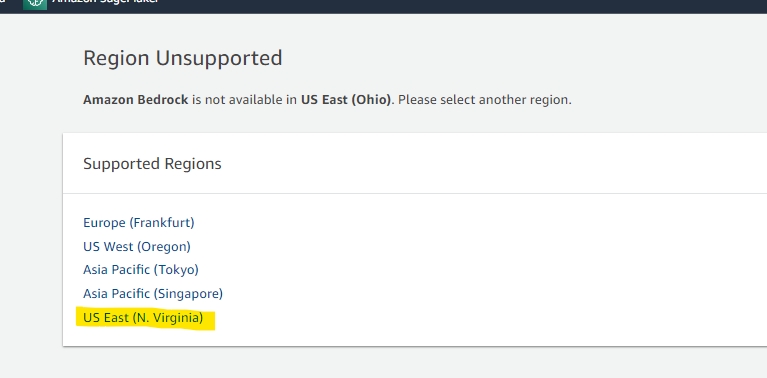

Currently, till writing this article, Bedrock is only available in a few regions, and they are constantly adding new regions to the list. Here, I am going to select the US East (North Virginia).

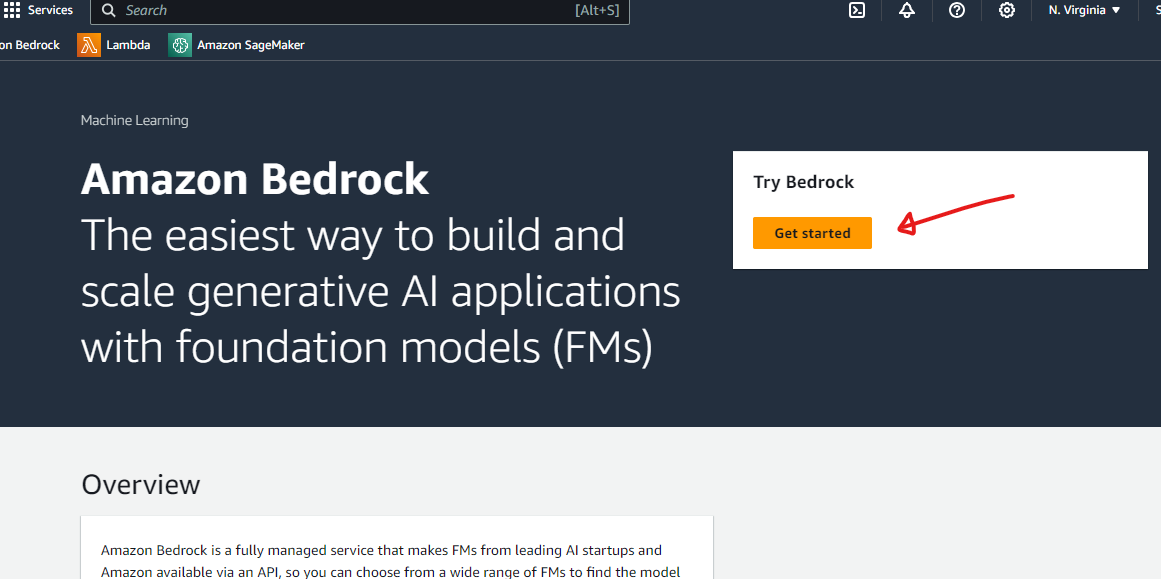

Once the supported region is selected, Amazon Bedrock's console is opened.

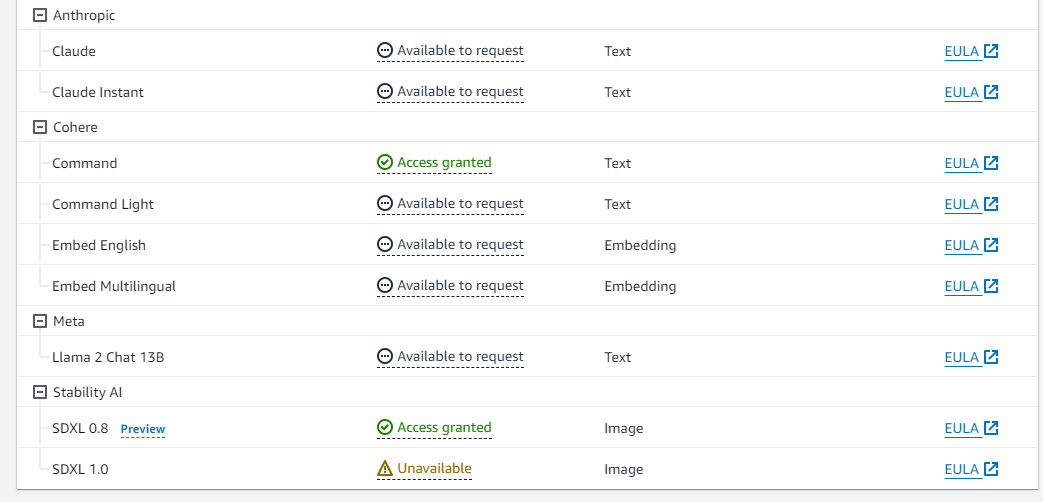

This is the dashboard of Amazon Bedrock, which allows you to play with the model or control the access of the models for other users. Since I logged in with a root user account, the dashboard shows me all the features and controls as an admin root user. The right panel shows many well-known LL<M or FM vendors like AI21 Amazon or Anthropic. The right panel shows various playgrounds where one can experiment with the model and its behavior by injecting prompts.

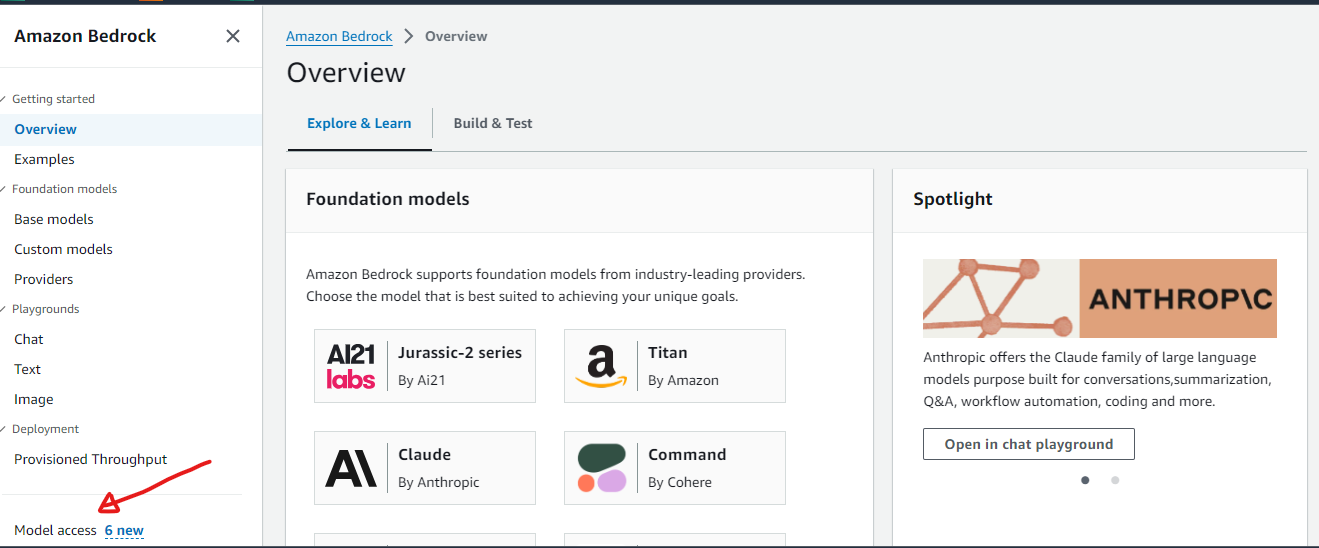

One of the important links is "Model Access," which provides the ability to control the model access. You can click the link to access the model access gallery.

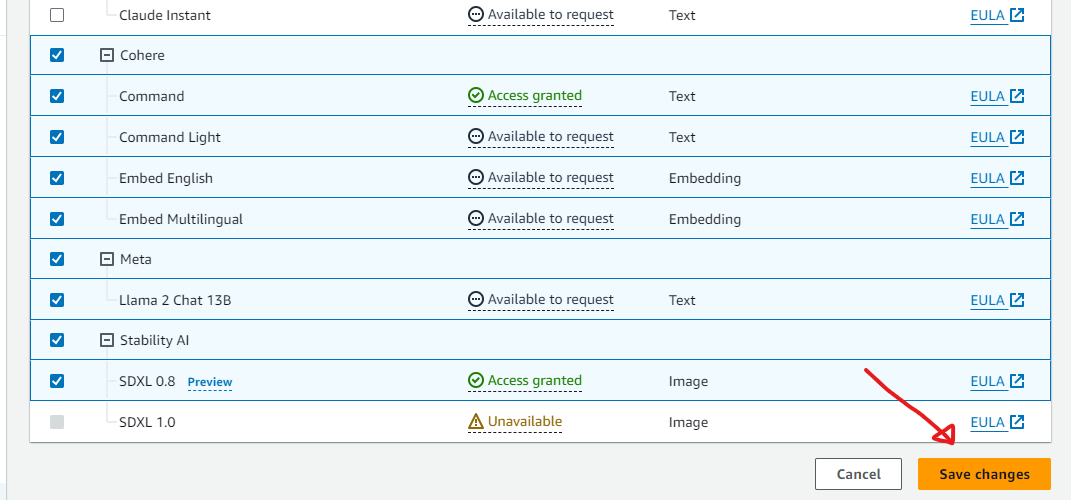

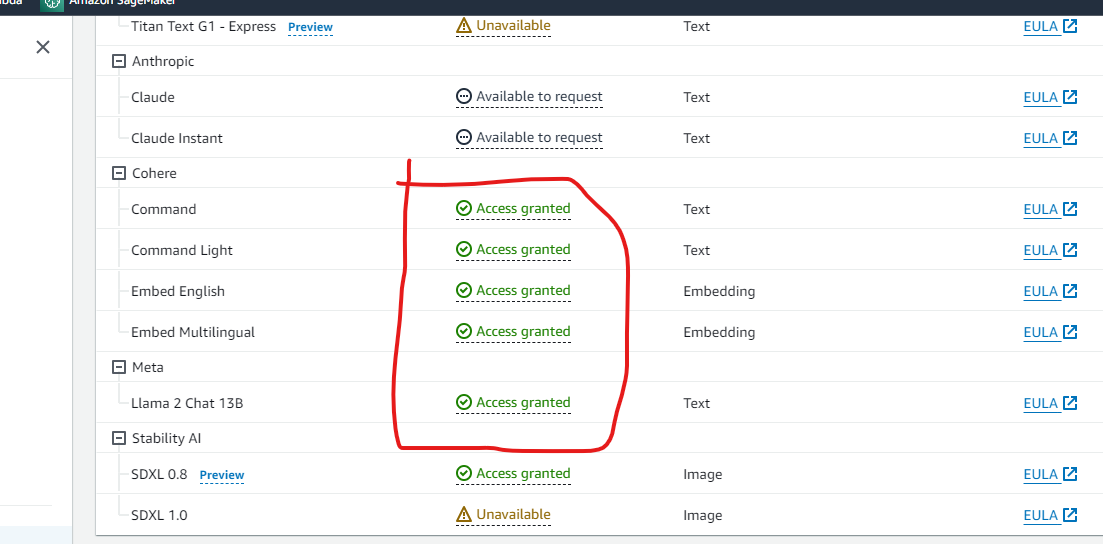

The model access dashboard shows all the models by vendor Amazon Bedrock has provisioned as of now. Some of them, like Anthropic's Claude models, require a long form to fill up if you want to access them. The rest of them are pretty simple. You need to click the button to manage model access, which shows an editable checklist to control access.

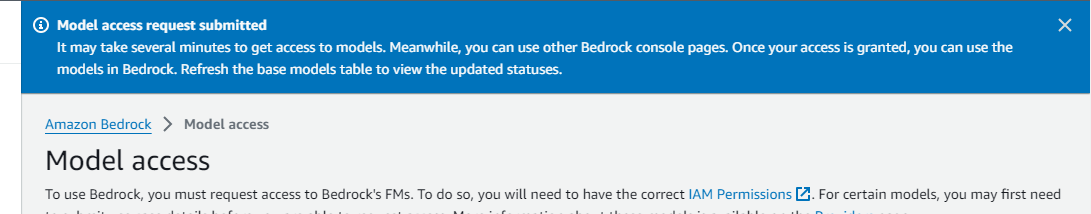

Once submitted the Save changes button, the status of the access changes to "In Progress." Sometimes, you need to wait for more than an hour or a day, depending upon the type of model you are requesting to access and your account type.

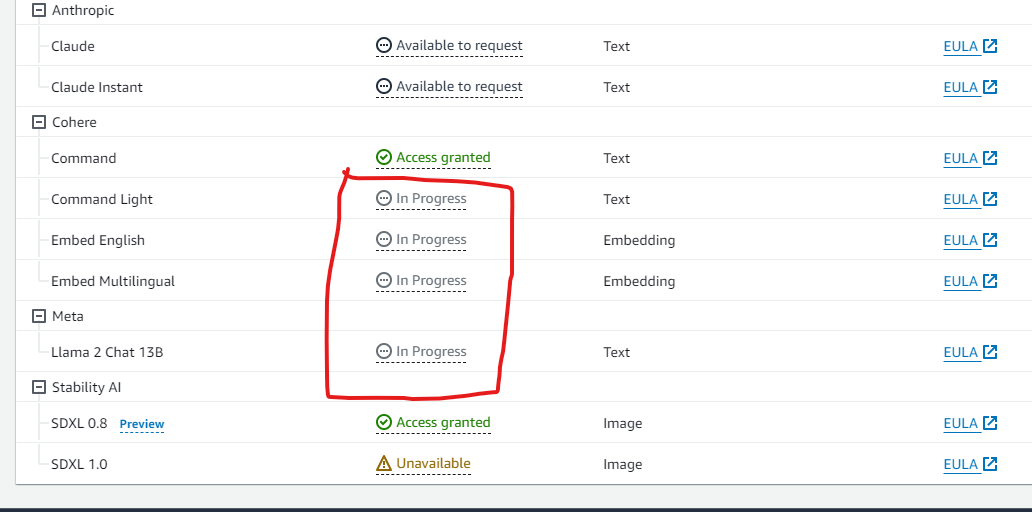

Once AWS has given access to the models you requested, the status will change to "Access Granted." Now, you can use the API to use the model to integrate into your own applications.

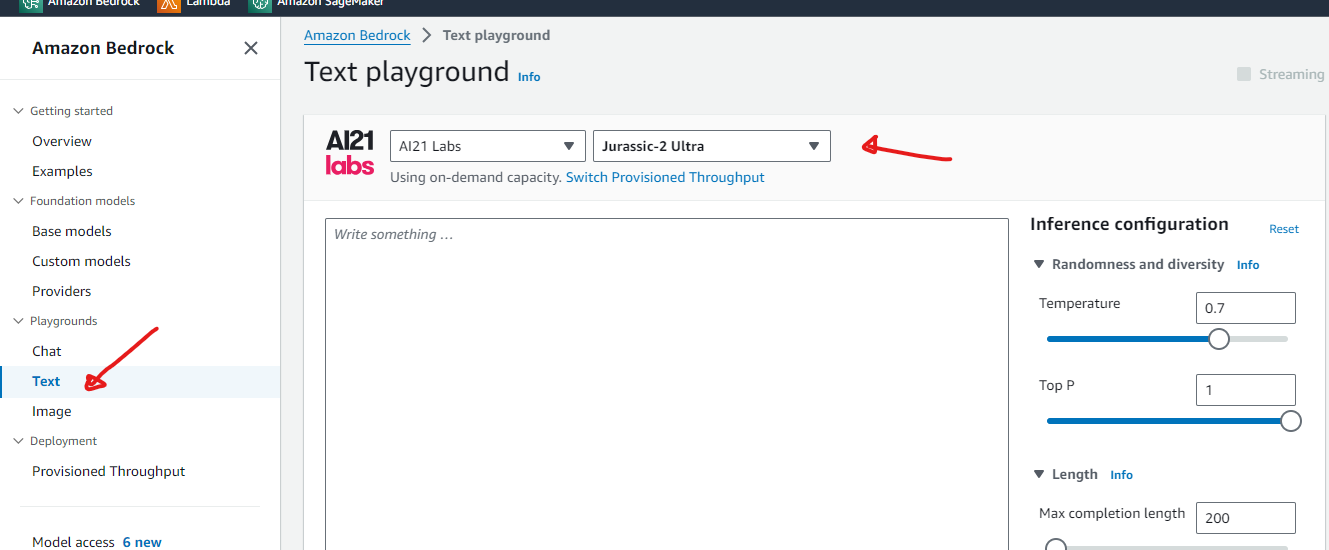

We can go back to the original dashboard and click on the "Text" link to invoke the Text Generation playground, where we can use some prompt engineering to evaluate the models by choosing the vendor and the corresponding models from the drop-down. The model's response can also be customized by changing the configuration shown in the right panel. The "temperature" element determines the output of the model. The higher value of temperature leads to more creative or dynamic responses from the model. If the value is zero, then you can expect the same static responses from the model for a specific prompt or question, or NLP task.

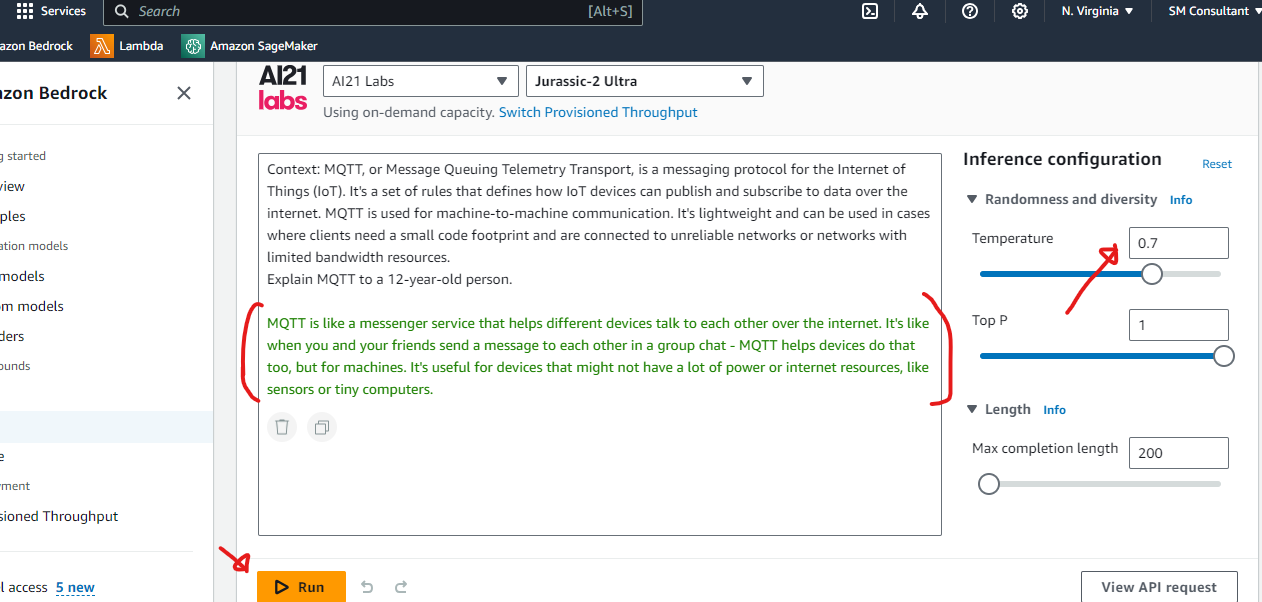

Now, in the playground, we will explore using the same text prompt engineering to play with different models. First, we will explore the Jurassic model's output from the vendor AI21 Labs. We input a text on MQTT, which is the communication pattern being used in IoT devices, and we asked the model to explain MQTT to a 12-year-old person. The text output in green color is the text generated by the model Jurrasic -2 Ultra with the temperature of 0.7

If you click the "View API request" button in the bottom section, it shows the API request that the playground console sends to the foundation model.

Response from Command model by Cohere:

Context: MQTT, or Message Queuing Telemetry Transport, is a messaging protocol for the Internet of Things (IoT). It's a set of rules that defines how IoT devices can publish and subscribe to data over the internet. MQTT is used for machine-to-machine communication. It's lightweight and can be used in cases where clients need a small code footprint and are connected to unreliable networks or networks with limited bandwidth resources.

Explain MQTT to a 12-year-old person.

MQTT is like a secret club for machines where they can all talk to each other and share cool stuff. You know how people use social media to post pictures and updates, and others can follow or see those posts if they're interested? MQTT is like that, but for machines.

Imagine you have a cool new gadget, like a smart watch or a smart home device. These gadgets can do amazing things, like track your steps, control your lights, or even order you a pizza! But for them to do those things, they need to talk to other machines. That's where MQTT comes in.

MQTT is like a special set of rules that these machines use to talk to each other over the internet. They can send messages to each other, kind of like sending emails or texts, but these messages are super fast and efficient. And just like you might subscribe to a celebrity's social media account to see all their posts, these machines can subscribe to messages that are important to them.

The best part is that MQTT is really lightweight, which means it doesn't use a lot of computer power or take up a lot of space. This is important because sometimes these machines don't have a lot of power or they're in places where the internet connection isn't very good. MQTT is designed to work even in these tough conditions.

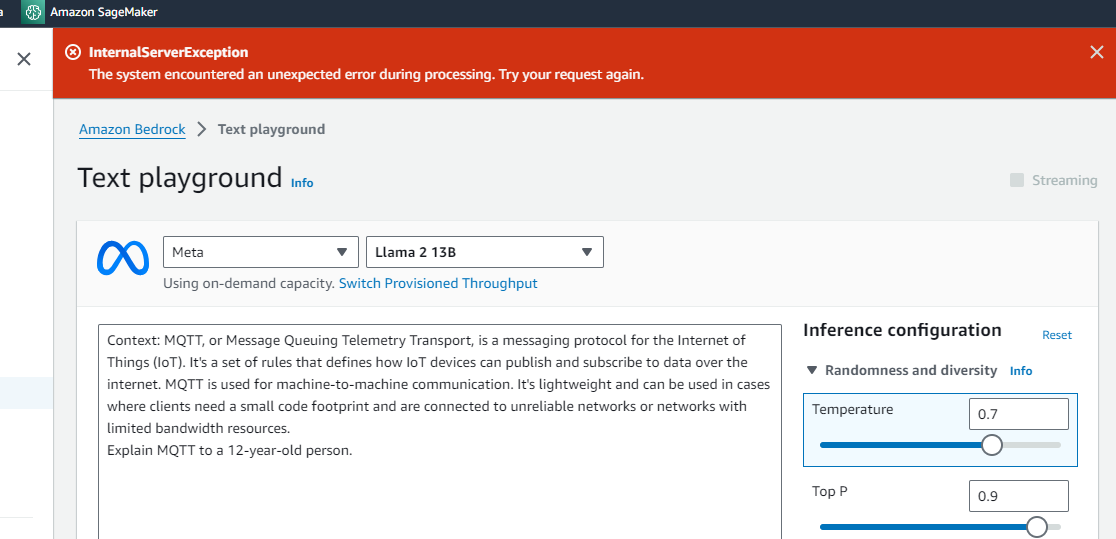

So, think of MQTT as a secret club where machines can talk, share messages, and work together to make our lives easier and more fun. It's like a behind-the-scenes hero that helps all our cool gadgets work together!I tried to use the LLama2 model from Meta but kept getting the error. It looks like Amazon is still trying to stabilize.

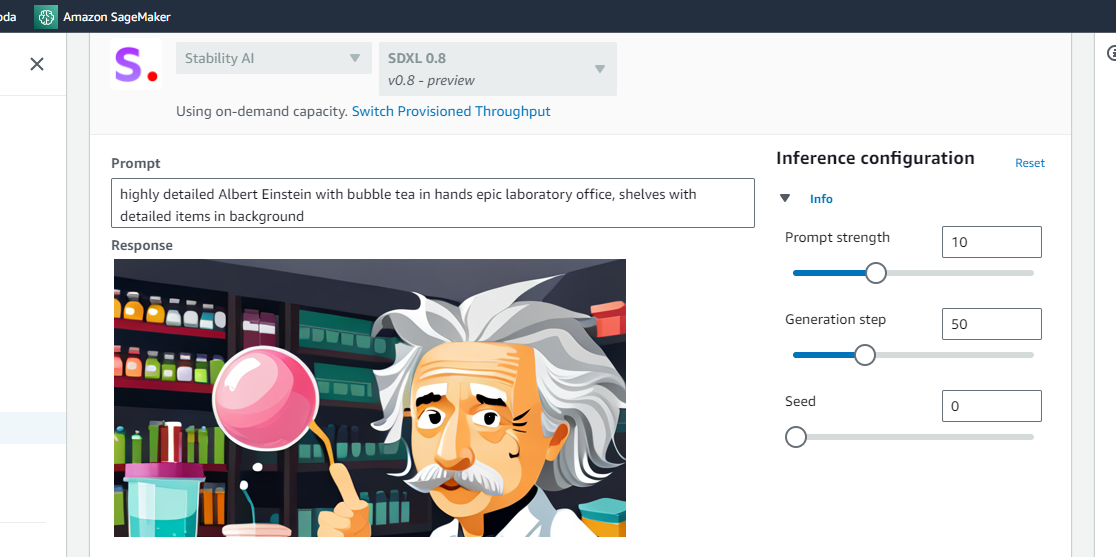

We will explore the Sytable Diffusion model, which uses the text-based prompt to generate images.

The API request is:

aws bedrock-runtime invoke-model \

--model-id stability.stable-diffusion-xl-v0 \

--body "{\"text_prompts\":[{\"text\":\"highly detailed Albert Einstein with bubble tea in hands epic laboratory office, shelves with detailed items in background\"}],\"cfg_scale\":10,\"seed\":0,\"steps\":50}" \

--cli-binary-format raw-in-base64-out \

--region us-east-1 \

invoke-model-output.txtIntegration Strategies to the GEN AI Platform

As Amazon Bedrock operates as a serverless component, integration with this platform is genuinely serverless. The Foundation Model is made accessible through a REST API for downstream use, and AWS provides the Bedrock SDK client, which any application can utilize to connect to Amazon Bedrock.

The most effective method for integrating the AI platform involves leveraging AWS Lambda, where IAM policies can be configured to ensure proper access for querying the model. AWS SAM is the recommended approach for designing and constructing the Lambda to connect to BedRock. In a subsequent article, I will provide a code snippet demonstrating a sample Lambda integration with Bedrock. If the Lambda is developed in Python, the boto3 module is necessary, and it can be added as a layer to the Lambda. Alternatively, SAM can be used to package boto3 while building Lambda using Python. The Lambda can be fronted by the AWS API Gateway, creating an abstraction layer.

An alternative integration approach is to develop a Python-based application using the Langchain or LLamaIndex framework, containerize it using Docker, and run it in the EKS platform. This approach allows for the full potential of running applications in the Kubernetes platform with high scalability and control while simultaneously connecting to Bedrock through the SageMaker interface. In my next article, I will walk you through more details on this integration approach.

Conclusion

In a nutshell, Amazon Bedrock provides a robust and scalable platform with security and RBAC control in place through AWS IAM policy for enterprises to leverage generative AI capabilities effectively and build their own AI platform. By offering easy access to leading foundation models and handling the complexities of infrastructure and maintenance, Bedrock allows organizations to focus on building impactful AI applications to solve their business use cases. The ability to securely customize models and integrate with AWS services ensures alignment with enterprise needs for governance, compliance, and accelerated development.

As generative AI continues its rapid evolution, Bedrock represents a flexible, future-proof way for organizations to tap into the technology and gain real business value. With thoughtful architecture using Bedrock's capabilities, enterprises can overcome the hurdles of AI adoption and start realizing the promise of generative intelligence across their digital transformation and offering. The result is an AI-powered company that can drive higher levels of innovation, efficiency, and competitive differentiation.

Opinions expressed by DZone contributors are their own.

Comments