Blenderbot: A Pre-Eminent AIML Model for Chatbot Development

Installation, usage, and application of the Blenderbot — a pre-trained AIML model specialized in Conversational AI that can be extended into various innovative use cases.

Join the DZone community and get the full member experience.

Join For FreeTable of Contents

- Introduction

- Installation of dependencies and importing of Blenderbot model

- Chatting with the model

- Conclusion

- References

Introduction

In Artificial Intelligence, Blenderbot comes under the category of conversational agents. A conversational agent, or CA, is a computer program designed to have a conversation with a person, according to Wikipedia. In other words, conversational agents are automated systems — often driven by artificial intelligence — that are designed to have natural-language conversations with people.

We will discuss more regarding Blenderbot as it is the foundation of this article. A language-generating model developed by Facebook AI is called Blenderbot. When given a certain context, it is capable of clearly expressing itself and engaging in meaningful dialogues in natural language (human-readable language).

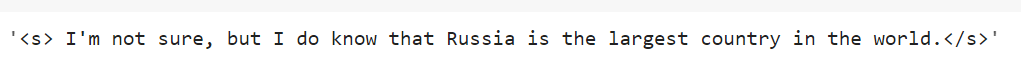

It may, for instance, engage in discourse about Russia after being asked a question like, "Which is the world's largest country?"

Installation of Dependencies And Importing of Blenderbot Model

We will now install two libraries that are required to run Blenderbot.

Installing Transformers

Hugging Face will be used to download the Blenderbot model that has already been trained.

!pip install transformersInstalling PyTorch

Because Torch tensors and Blenderbot are tokenized, we will install the PyTorch deep learning library.

You can visit the PyTorch website and install your customized version if you wish to install your own PyTorch software.

!pip3 install torch==1.9.1+cu111 torchvision==0.10.1+cu111 torchaudio===0.9.1 -f https://download.pytorch.org/whl/torch_stable.htmlImport Model

Here, we'll import and download the Blenderbot model that has already been taught from Hugging Face.

To start, we will import the tokenizer and the model class.

from transformers import BlenderbotTokenizer, BlenderbotForConditionalGenerationWe will now install and set up the tokenizer and the Blenderbot model.

model_name = 'facebook/blenderbot-400M-distill'

tokenizer = BlenderbotTokenizer.from_pretrained(model_name)

model = BlenderbotForConditionalGeneration.from_pretrained(model_name)The name of the model in the image above is facebook/blenderbot-400M-distill; it starts with the name of the model's creator, in this case, Facebook, and is followed by the model's name, which is blenderbot-400M-distill.

On the model name, we then instantiated the tokenizer and the model itself.

Chatting With the Model

With our conversation agent, we will now start a real discussion.

First, we will post an utterance, which is a statement that is used to start a discussion with the conversation agent (like "hey, google").

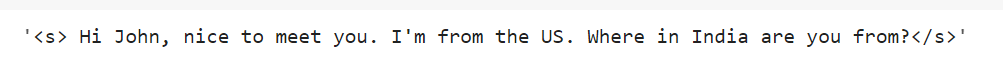

utterance = "Hi Bot, My name is John. I am coming from India"The utterance will then be converted into a token so that the model can process it.

inputs = tokenizer(utterance, return_tensors="pt")In the example above, we tokenized our speech and delivered the token as a PyTorch Tensor so the Blenderbot model could analyze it.

The answer result from our conversational agent will then be generated.

result = model.generate(**inputs)Above, we gave the model instructions to produce a reply to our tokenized speech.

We will now decode our result answer back into a human-readable form because, as you may recall, it is still in PyTorch Tensor form (Natural Language).

tokenizer.decode(result[0])Above, we gave the tokenizer instructions to decode the answer.

You may view your agent's response after running the aforementioned code.

Conclusion

Because Blenderderbot, unlike other conversational agents, is able to keep replies long-term, as opposed to others who have goldfish brains, it is able to carry on conversations depending on the provided context with a high degree of likeness to natural human speech.

As a result, the model records crucial conversational information in its long-term memory so that it may use it later in talks that could last for days, weeks, or even months. Every individual it converses with has its knowledge kept separately, preventing the reuse of any new information from one discussion in another.

The following URL has all the programming examples uploaded to the GitHub repository so that you can play around with the codes according to your requirements.

I hope that now you got an intuitive understanding of the Blenderbot library, and these concepts will help you in building some really valuable projects.

References

- Blenderbot - Official documentation

- Blenderbot - Github repo

Opinions expressed by DZone contributors are their own.

Comments