Benchmarking AWS Graviton2 and gp3 Support for Apache Kafka

Using AWS's ACCP alongside Apache Kafka on Graviton2 instances, Kafka users can get a particularly highly performant Kafka experience

Join the DZone community and get the full member experience.

Join For FreeWith the release of AWS’s Graviton2 (ARM) instances and gp3 disks, I immediately wanted to explore the potential opportunity for anyone using Apache Kafka. My team and I embarked on a journey to understand the changes required for Kafka users to be able to provision AWS Graviton2 instances paired with gp3 disks.

Previously we’d only used Java 11 (OpenJDK) to run the Kafka service on x86 instances. As part of this change, we also shifted our internal environment to use Amazon Corretto. Amazon Corretto is used internally by AWS; it has built-in performance enhancements, security fixes, and is compatible with Java SE standards. Furthermore, Amazon Corretto reportedly has a performance benefit over OpenJDK distributions when operating in ARM architecture especially for network-intensive applications, of which Kafka is one.

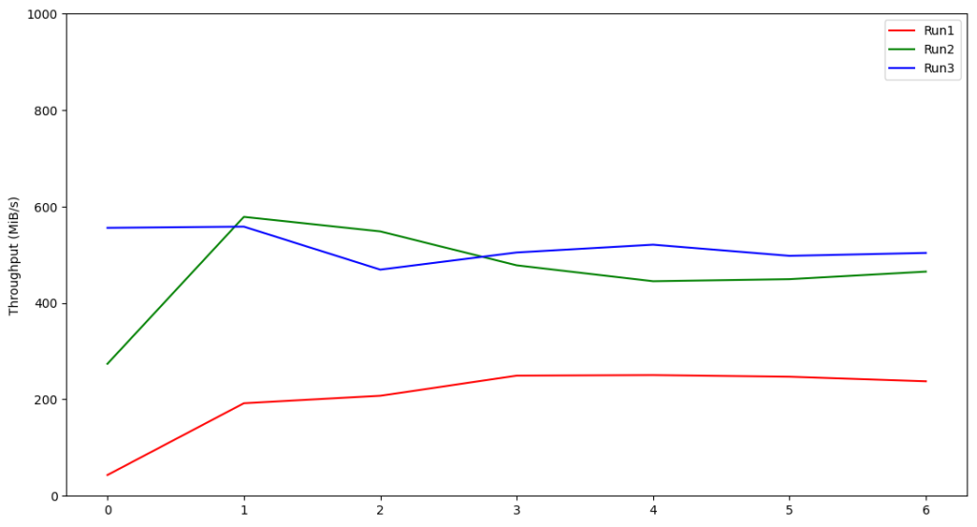

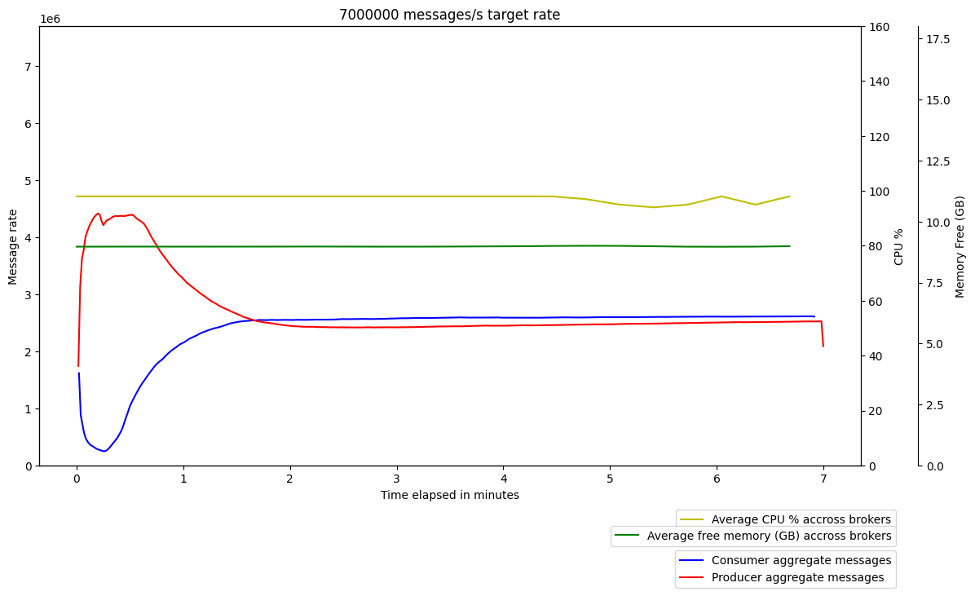

Graviton2/gp3 vs. x86/gp2 Benchmarking

For the benchmarking, we decided to compare instances that have similar specifications (in terms of memory, network bandwidth, virtual CPU, and purpose). The majority of the clusters on our managed Kafka platform use r5 memory-optimized instances, so it makes sense for us to compare one of the r5 instances against its r6g instance (Graviton2) counterpart: r5.large vs. r6g.large. To ensure that our results weren’t distorted by any bottleneck effects, we gave ourselves plenty of room to move in terms of the gp2 disk size (500 GB), and the gp3 disk provisioned IOPS and throughput (16000 IOPS, 1000 MiB/s). We also monitored the IOWait just to make sure that we were not hitting disk bottlenecks. We used the latest Kafka version at the time (Kafka 2.6.1), with client ⇔ broker encryption enabled.

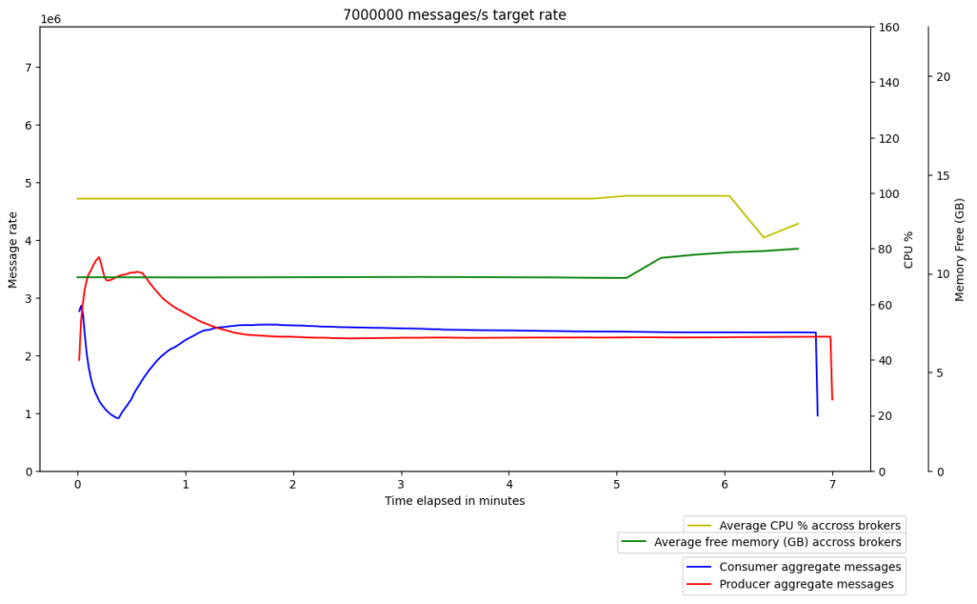

The hope here was that everything would just work, and we would see immediate performance benefits from the AWS Graviton2/gp3 instances relative to the x86/gp2 instances. However, these initial benchmarking results weren’t what we’d hoped at all.

Graviton2 – gp3

![Graviton2 – gp3]() x86 – gp2

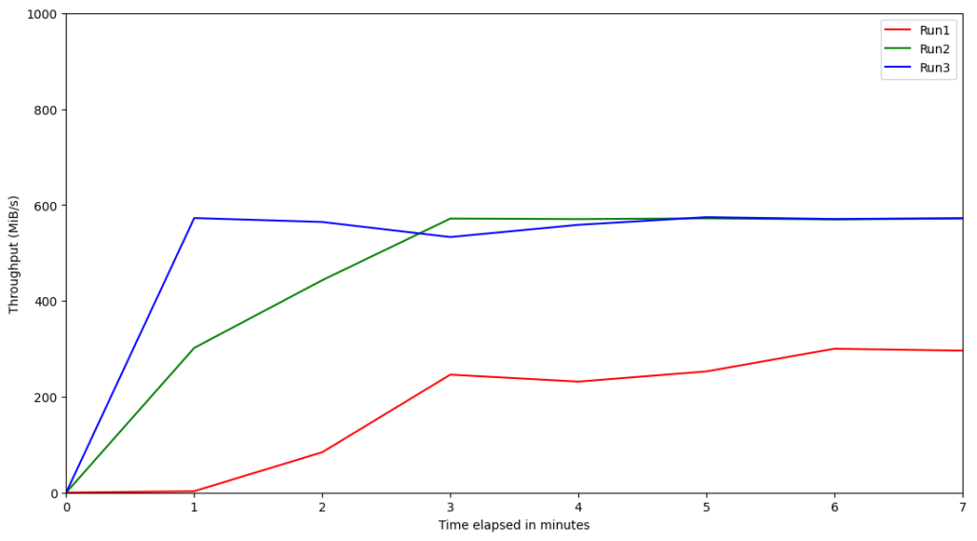

x86 – gp2

We repeated the experiments to make sure this wasn’t just noise, but the results were quite consistent; the performance of the Graviton2/gp3 instances was significantly lower than their x86/gp2 counterparts, with the former’s message throughput being almost half of the latter’s. So, we dived deeper in order to identify the cause of the drop in performance.

We developed various hypotheses as to the cause of the performance issues, implemented changes to address each one, and then ran our benchmarking again to assess their impact. We kept all other parameters consistent to make sure we were isolating the effects of each potential issue. Some of our hypotheses about potential causes of the performance degradation included:

- The difference in the JDK being used (in x86/gp2 we used OpenJDK, while in Graviton2/gp3 we used Corretto). However, further benchmarking showed there were no significant differences between OpenJDK and Corretto performance-wise.

- The difference in the disk type used (gp2 vs. gp3). However, after varying the processor/disk combinations in our benchmarking, we saw no significant differences.

- An anomaly for a specific instance type. We tried changing the instance size, using r5.xlarge and r6g.xlarge instances instead of r5.large and r6g.large, but x86 still demonstrated better message throughput.

Given that Graviton2 instances are also cheaper, we additionally tried adding a node to the Graviton2 Kafka cluster, to bring the clusters to cost parity. There was a slight boost in Graviton2 performance, however, the x86 cluster was still demonstrating superior performance, despite having fewer nodes.

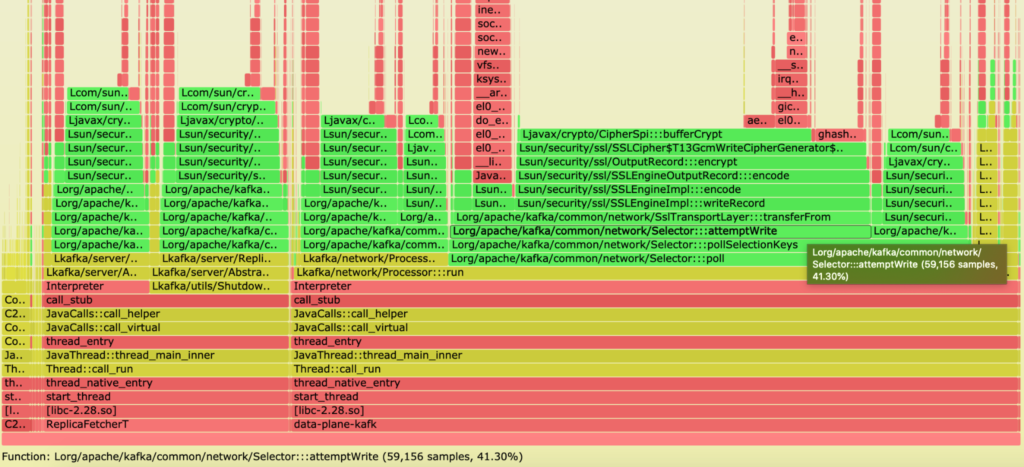

Since none of these hypotheses seemed to point to the source of the issue, we decided to profile the Kafka application as it was running on both instance kinds to locate the bottleneck. Following guidance from Brendan Gregg’s 2016 talk at JavaOne, we used flame graphs to perform our CPU profiling. Based on the results, we had a strong suspicion that crypto/SSL was the biggest blocker for us.

Graviton2 – gp3

x86 – gp2

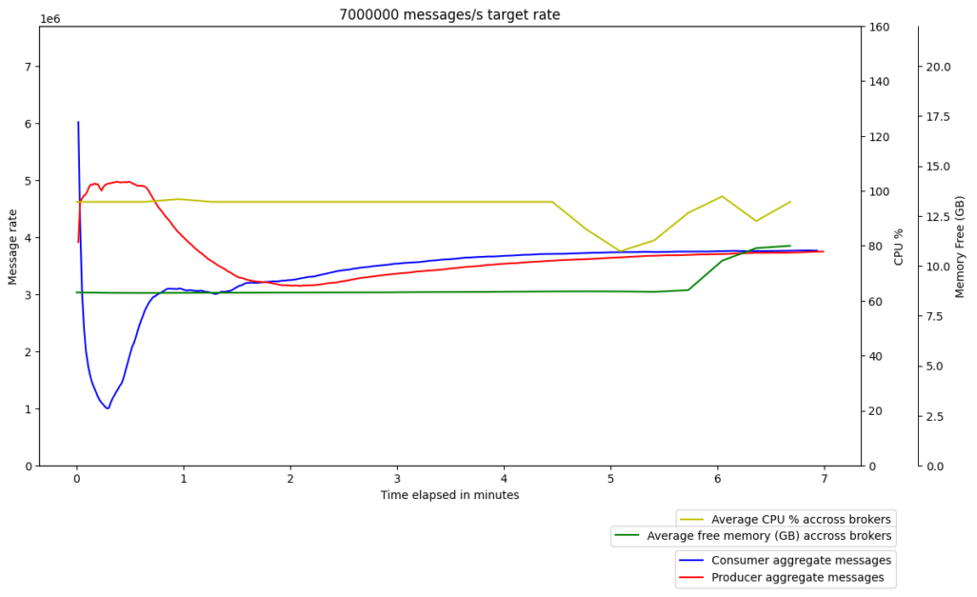

Now that we’d identified a likely cause of the problem, we ran the experiment with the same setup, except this time with client ⇔ broker encryption disabled. Finally, we saw the performance of Kafka on Graviton2/gp3 eclipse that of its x86/gp2 counterpart in several repeated trials.

Graviton2 – gp3

![Kafka performance on Graviton2/gp3]() x86 – gp2

x86 – gp2

We raised a GitHub issue to see if others had experienced similar issues. After some thorough discussions, we discovered that some OpenJDK optimization/intrinsic support for AES counter mode is missing for the ARM architecture used on Graviton2 instances. As a result, a JDK enhancement issue was raised upstream to track this issue.

We raised a GitHub issue to see if others had experienced similar issues. After some thorough discussions, we discovered that some OpenJDK optimization/intrinsic support for AES counter mode is missing for the ARM architecture used on Graviton2 instances. As a result, a JDK enhancement issue was raised upstream to track this issue.

So, we’d uncovered the source of the problem, but we still had no immediately available solution to the performance issues in ARM with client ⇔ broker encryption enabled. Fortunately, we found The Amazon Corretto Crypto Provider (ACCP). Quoting from the blog:

“ACCP implements the standard Java Cryptography Architecture (JCA) interfaces and replaces the default Java cryptographic implementations with those provided by libcrypto from the OpenSSL project. ACCP allows you to take full advantage of assembly-level and CPU-level performance tuning, to gain significant cost reduction, latency reduction, and higher throughput across multiple services and products, as shown in the examples below.”

This was a welcome feature because the native compilation of libcrypto allowed us to address the absence of intrinsic support for AES counter mode.

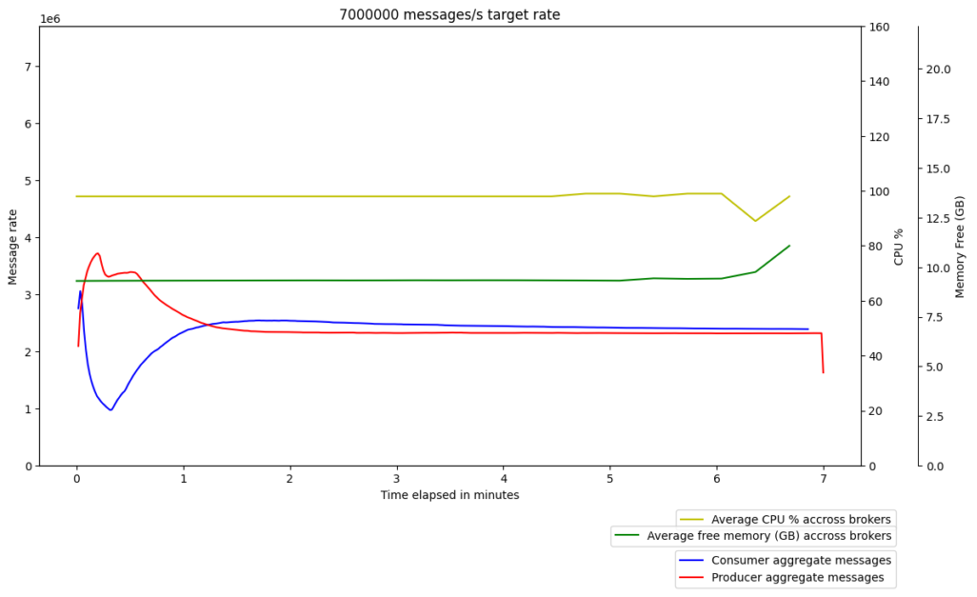

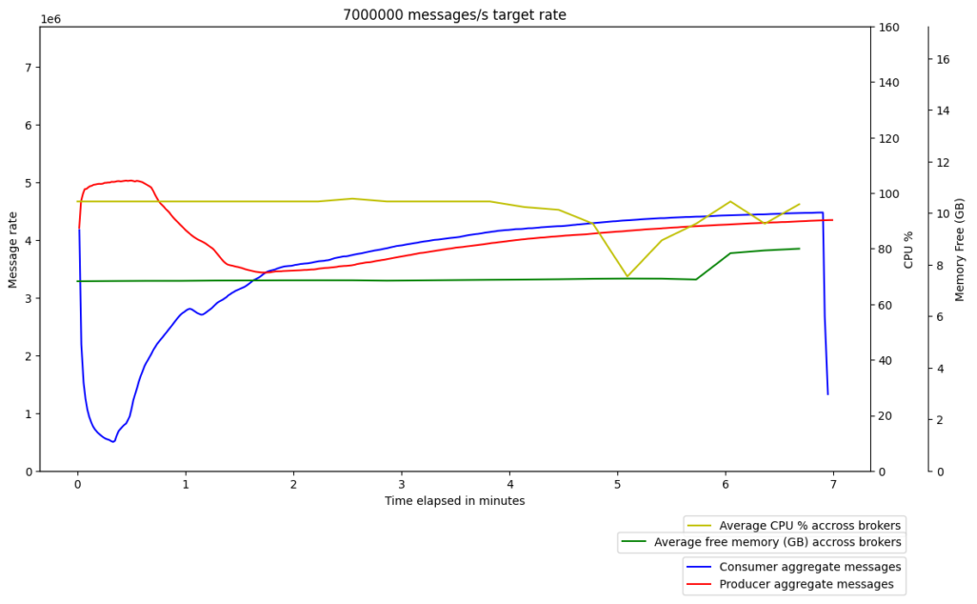

We, therefore, incorporated Amazon Corretto Crypto Provider (ACCP) in our setup when running Kafka. Following this change, we finally saw the performance of Kafka on Graviton2 surpass the performance on x86, even with client ⇔ broker encryption enabled. Interestingly, we also saw a slight improvement in the performance of Kafka on x86 with ACCP, which made Graviton2’s superior performance all the more promising.

x86 – gp2

Graviton2 – gp3

It is worth noting that if our JDK enhancement issue is addressed and the upgraded JDK version is adopted, then ACCP will no longer be required to avoid the performance issues that we uncovered. We also note that we chose to upgrade the underlying OpenSSL version used to compile ACCP to 1.1.1k (instead of the default 1.1.1j). We did this due to some CVE (e.g., CVE-2021-3450) identified in the default which was addressed in 1.1.1k.

It is worth noting that if our JDK enhancement issue is addressed and the upgraded JDK version is adopted, then ACCP will no longer be required to avoid the performance issues that we uncovered. We also note that we chose to upgrade the underlying OpenSSL version used to compile ACCP to 1.1.1k (instead of the default 1.1.1j). We did this due to some CVE (e.g., CVE-2021-3450) identified in the default which was addressed in 1.1.1k.

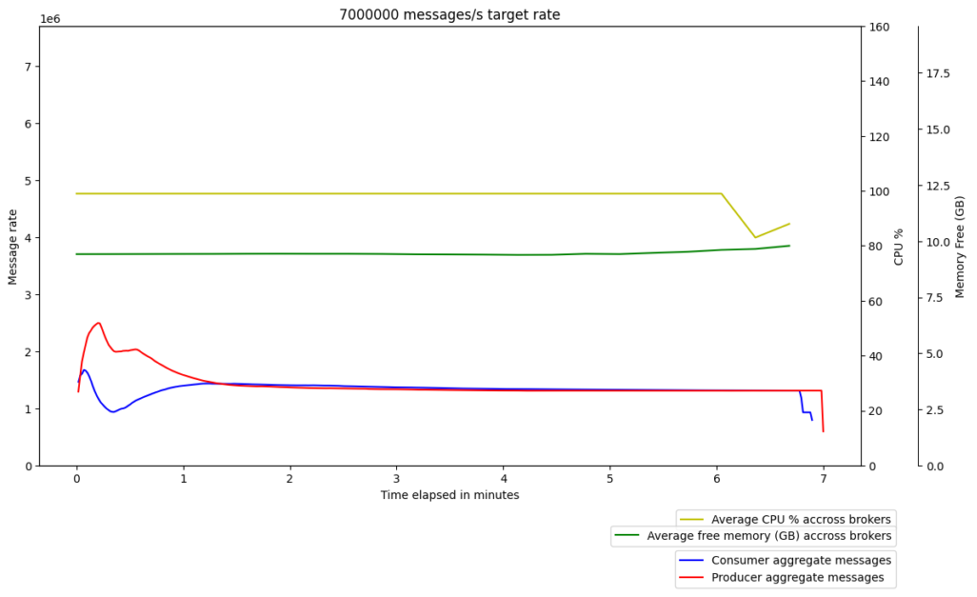

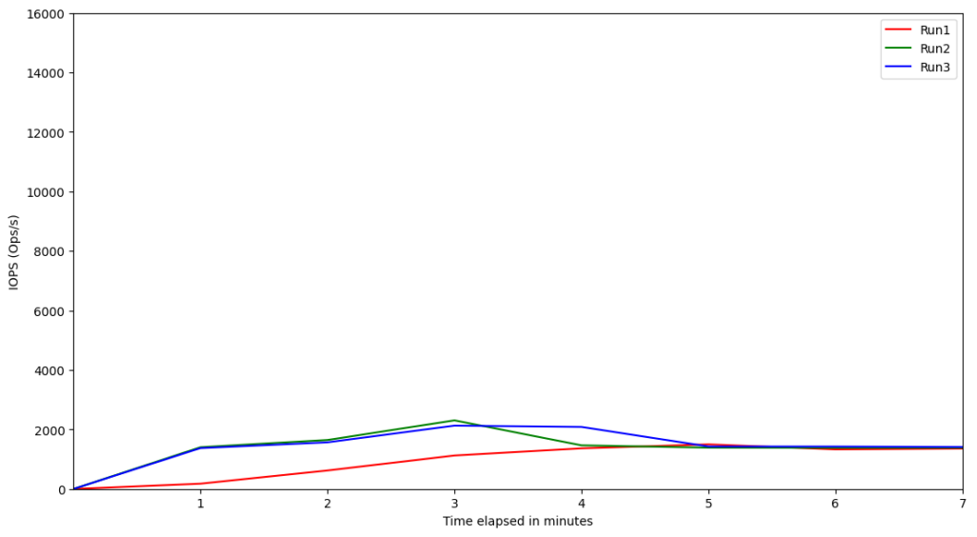

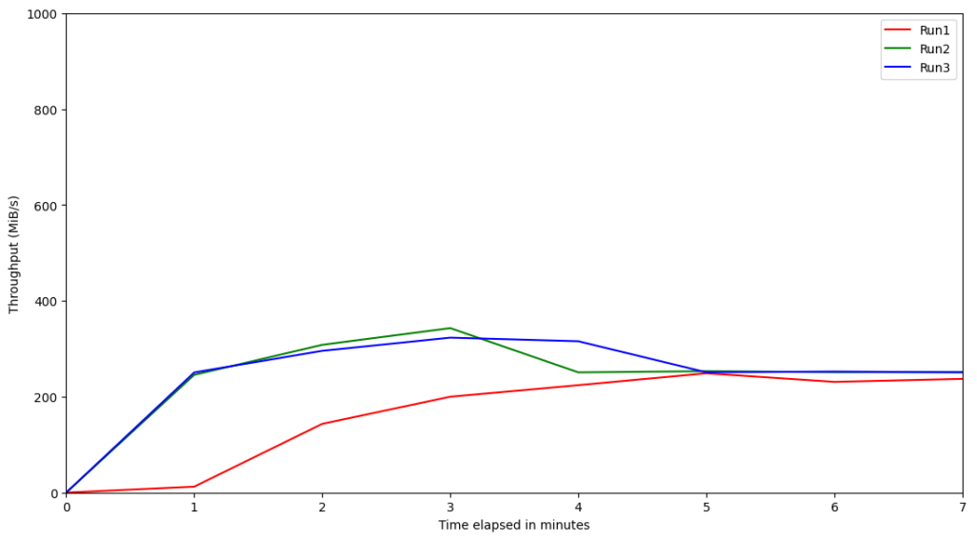

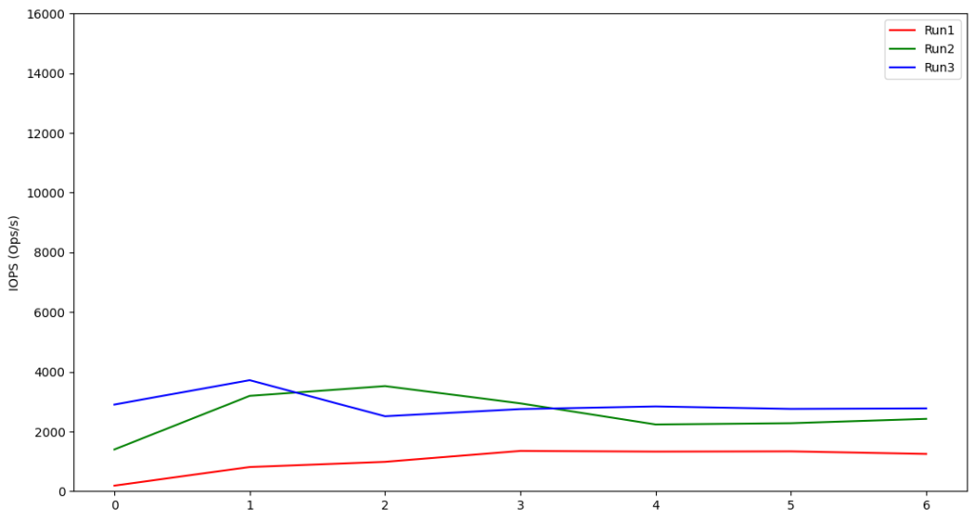

Provisioned IOPS and Throughput

With our performance issues with Kafka on Graviton2 successfully navigated, we also needed to find a suitable gp3 setup. Gp3 disks come parameterized with provisioned IOPS and throughput that comes at a price when we provision at a higher capacity. By default, a gp3 disk comes with 3000 IOPS and 125 MiB/s capacity included. Note that this is different from gp2 disks, where the IOPS number is derived from the disk size, and the throughput is capped at 250 MiB/s.

During experimentation, we found that running benchmarks with a message size of 1MB generated the most stress on the attached disk. 1MB messages are within the message size limit in the default Kafka configuration; hence, we used this message size in the subsequent experiments to determine the maximum required provisioned IOPS and throughput configuration.

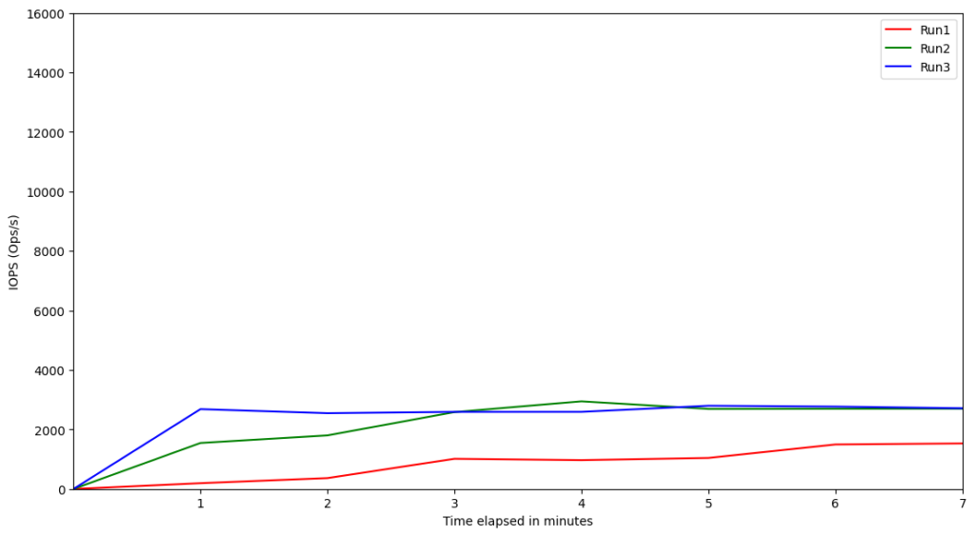

We provisioned the instances using gp3 disks with the maximum IOPS and throughput configurable and then ran the experiments. We scraped the AWS EBS metrics using the AWS CLI, and then plotted the graph to see the highest IOPS and throughput that was required for each instance. The following are some samples of the IOPS and throughput graphs:

r6g.large

r6g.xlarge

r6g.2xlarge

Performance of AWS Graviton2 With gp3

Based on these results, we can see that for the production-tier Graviton2 instances running Kafka, there is no need to provision the IOPS much higher than 4500 or throughput more than 600 MiB/s. IOPS and throughput are interrelated, so the interesting question is why the relative performances of these Graviton2 benchmarked instances seem to indicate the throughput is capped when theoretically it should be able to go higher. The reason for this cap of ~600 MiB/s throughput is the EBS bandwidth. According to the documentation on Amazon EC2 instance types, the EBS bandwidths of those instances are up to 4,750 Mbps (~594MiB/s). This explains the observed throughput cap due to a caveat on the statement above; there will be a strong correlation between IOPS and throughput unless Kafka is specifically forcing the OS to flush the data to disk.

Conclusion

By leveraging ACCP alongside Apache Kafka on Graviton2 instances, Kafka users can achieve the highly performant Kafka experience they’re looking for. After a little further experimentation to determine the optimal provisioned IOPS and throughput, users will be able to realize the fully benefits of switching to gp3 disks.

Opinions expressed by DZone contributors are their own.

x86 – gp2

x86 – gp2 x86 – gp2

x86 – gp2

Comments