A Practical Guide to Augmenting LLM Models With Function Calling

Learn how to build a more dynamic AI application using a no-code tool, enabling integration of external functions with OpenAI LLM.

Join the DZone community and get the full member experience.

Join For FreeWhat Is Function Calling?

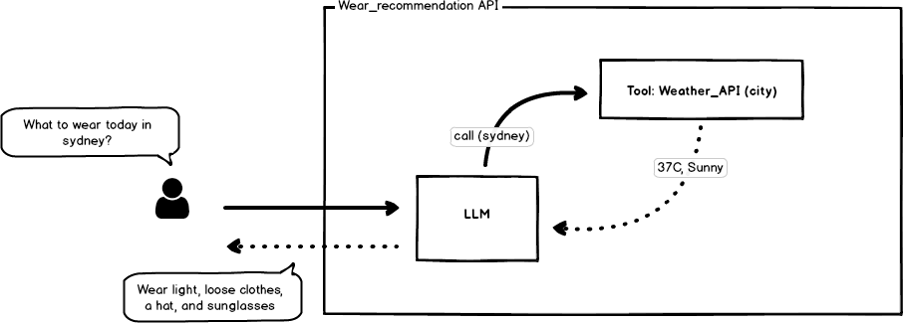

LLM models are powerful, but they’re limited to the data they've been trained on. Enter function calling: a feature that lets us enhance LLM’s capabilities by “calling” functions to gather external information. This means we can teach AI how to fetch specific, real-world data, like current weather, and use it to provide more relevant answers.

Use Case

“What should I wear today?” The answer varies based on the weather, but now it can be automated. Imagine an API that recommends clothing based on the forecast. In this demo, we used Kumologica, a low-code tool, to integrate OpenAI with a weather service. The goal: to enable OpenAI to use real-time weather data and offer on-point clothing advice.

Design

Our focus was on keeping things simple and effective. Using Kumologica, we connected OpenAI’s function-calling capability to a custom weather-based wardrobe API. When OpenAI encounters a query about what to wear, function calling activates, fetching specific data from an external weather API. This API gathers weather details — temperature, precipitation, wind — and then interprets them to provide clothing recommendations.

Implementation

Kumologica’s low-code setup made the integration straightforward. We designed a flow that takes a city name as input, triggers a weather API call, and relays the data to OpenAI through function calling. The result? A tailored clothing suggestion: layers for chilly weather, light attire for warmth, and rain gear when it’s wet. The entire process is quick and simple.

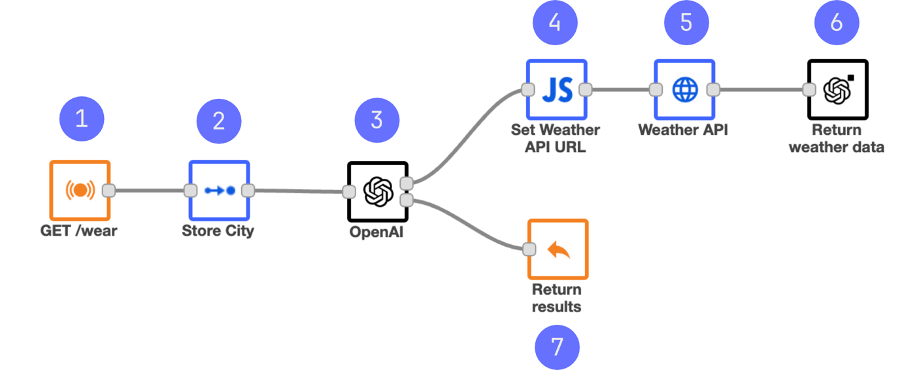

The following diagram illustrates the complete implementation of the API, detailing the flow of data and the steps involved in generating personalized clothing recommendations based on weather conditions.

Here’s an explanation of the components:

1. GET /wear (EventListener)

This represents an HTTP GET request endpoint. It takes a query parameter (“city”) to initiate the flow.

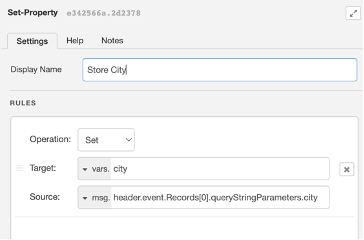

2. Store City (Set-Property)

This stores the city name from the incoming request.

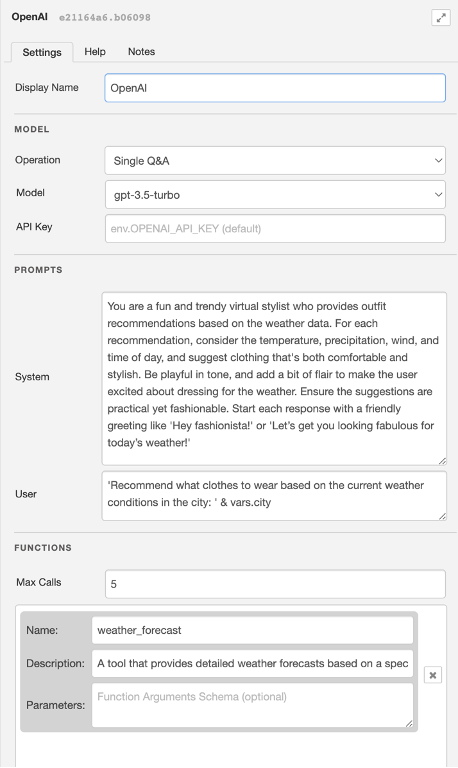

3. OpenAI (OpenAI)

This calls the OpenAI API to generate personalized clothing recommendations based on the weather for the given city.

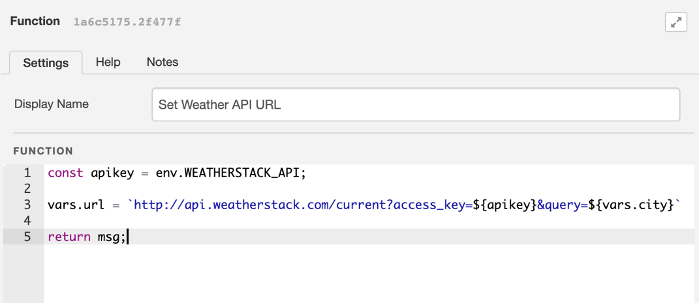

4. Set Weather API URL (Function)

This is a JavaScript function that dynamically constructs the URL for querying a weather API based on the stored city name.

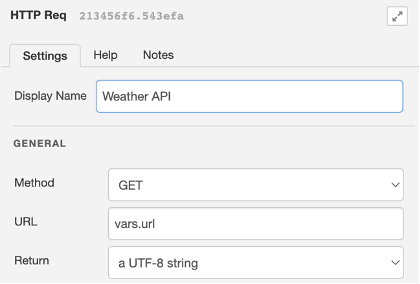

5. Weather API (HTTP Request)

Call a third-party weather service API (WeatherStack) to retrieve current weather data.

6. Return Weather Data (OpenAIToolEnd)

Pass the weather data to OpenAI API (3) to complete the request.

7. Return Results (EventListenerEnd)

This sends the final clothing recommendations back to the user as a response to their initial request.

Try It

1. Install Kumologica

npm install -g @kumologica/sdk2. Clone the Project Repository

git clone https://github.com/KumologicaHQ/demo-weather-wear

cd demo-weather-wear

3. Install the Dependencies

npm install

4. Set Environment Variables

Create an .env file in the root directory and configure the following variables:

OPENAI_API_KEY=<Your OpenAI API Key>

WEATHERSTACK_API=<Your Weatherstack API Key>4. Running Locally

Open Kumologica Designer:

kl open .To test the API locally, you can use the designer TestCase or an external tool like Postman or CURL to make a request: GET http://127.0.0.1:1880/wear?city=sydney.

Wrap Up

In conclusion, function calling opens up exciting possibilities for enhancing the capabilities of LLMs by allowing them to interact with real-world data in real-time.

The use case presented can be expanded further with parameter definition in function calling, enabling the LLM to extract parameters from the prompt, as well as the ability to call multiple functions. This paves the way for even more powerful and customized AI-driven solutions.

Opinions expressed by DZone contributors are their own.

Comments