Artificial Intelligence in Architecture

This article explores how artificial intelligence is being used in architecture through examples like Rayon, Delve, Finch3D, and NVIDIA’s Omniverse.

Join the DZone community and get the full member experience.

Join For Free

Artificial Intelligence in Architecture

Artificial intelligence (AI) promises to revolutionize our present world. Not with anything so dramatic as sentient robots taking over society. Instead, it’s all about computer algorithms doing complex, interpretive tasks efficiently and, dare we say it, intelligently.

One area where the promise seems exceptionally bright is architecture. In many ways, AI is the perfect tool for this field. Of course, there’s always an element of artistry when designing buildings and outdoor spaces, but there are a ton of calculations to consider.

If you can break it down into numbers, computers can help. The geometry and efficient use of the space, building material amounts, wind patterns, load-bearing weights, and even foot traffic are all areas ripe for AI.

Of course, computers are already doing some of this in rudimentary ways as part of design programs. However, the promise of AI is that the computer will figure things out to come up with solutions to design problems, often with limited human intervention.

As of this writing, AI in architecture is moving forward with research and experimentation. And, no wonder, given the complexity and expense of many architectural projects.

Still, some exciting developments are afoot, including many commercial products from startups and major tech companies.

Generative Design

Machine learning (ML) is a big focus for AI in architecture. However, ML is not synonymous with AI but is a subset of the more significant discipline.

With ML, the idea is to create and train an algorithm to complete tasks with incremental improvements after each attempt. This is similar to how a human would learn a rote task; only ML can do it faster.

One framework for ML is the GAN or generative adversarial network, a form of unsupervised learning using two artificial neural networks.

The idea here is that one network, called a generator, creates whatever you’re asking for - such as images of human faces. Then a second neural network, called a discriminator, decides if the generator's output is as good as actual pictures of human faces.

The two neural networks are engaged in a kind of competition where the generator tries to fool the discriminator, and the discriminator plays defense to prevent misleading.

The end game, in our example, would be for the generator to create better and better images of human faces until they are indistinguishable from the real thing, ultimately fooling the discriminator.

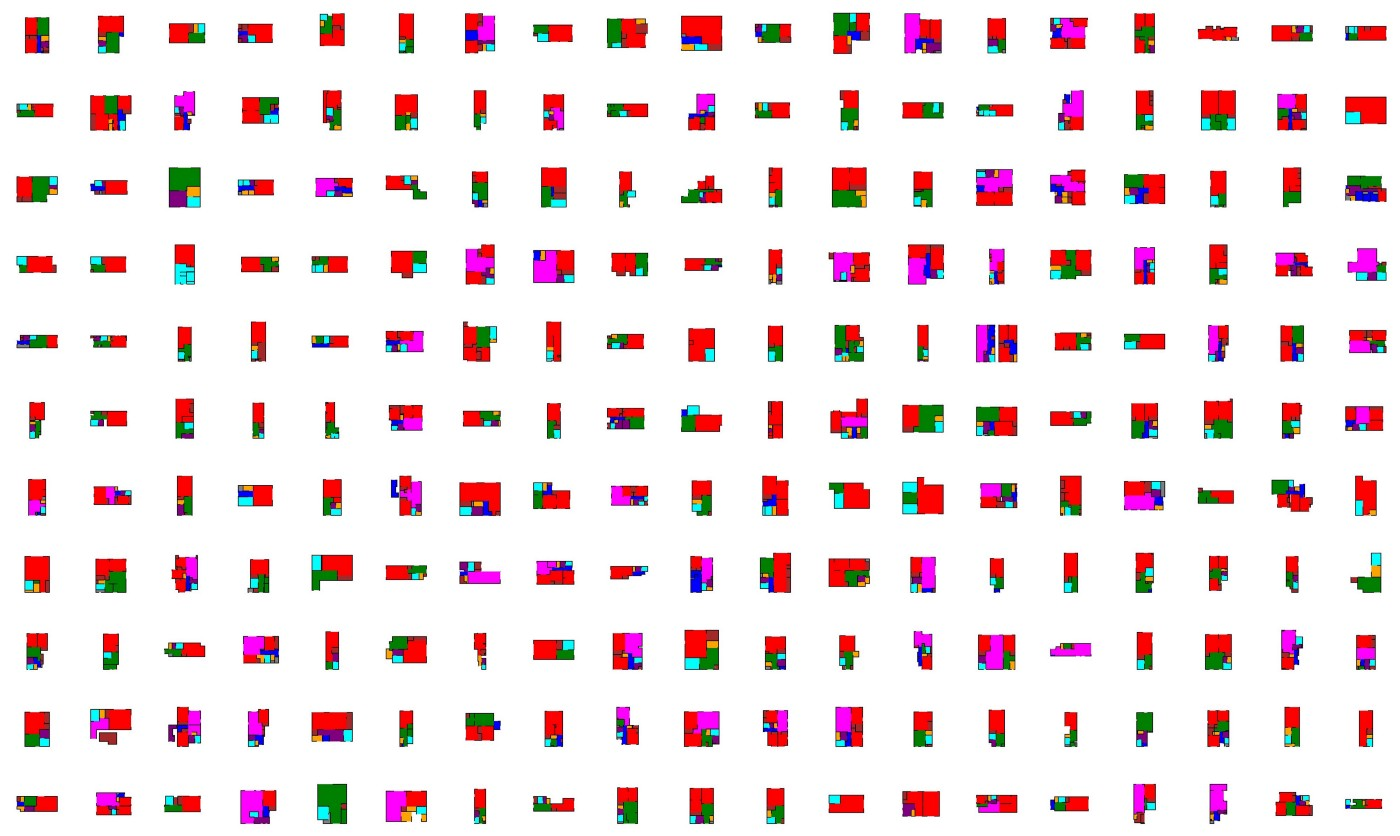

An apartment building dataset | Photo Credit: Stanislas Chaillou

One among many who've been experimenting with GANs to create architectural designs has been Stanislas Chaillou.

For his master's thesis work at Harvard, Chaillou used GANs to create and refine floorplan design. During his work, he found that not only would primary restraints of space and function impact design but also the style used to guide these GAN-generated floorplans.

"It seems that style permeates the very essence of any generative process irrevocably...there will be no agnostic-AI for Architecture," Chaillou said in an article on Towards Data Science. "On the contrary, each model or algorithm will come with its flavor, personality, and know-how."

That's an appealing notion that you could have, for example, a few models that favor design touches of modern architecture from people such as Frank Gehry and Zaha Hadid, another influenced by Bauhaus, and another that’s some fusion of multiple styles.

Chaillou has co-founded Rayon, a collaborative software company specializing in floorspace design.

GAN is just one flavor of generative design where the idea is to refine a design produced by a computer over time slowly. This can be using an unsupervised learning model such as a GAN or a more collaborative method with more significant human input.

Another example of generative design is Delve, a real estate development tool from Google’s Sidewalk Labs. Delve can generate hundreds of designs in minutes.

Each design considers various retail, residential, parking, and public spaces requirements. The designs also have a detailed cost model to estimate what a given design should cost.

Web Apps

The wonderful thing about the current state of technology is that you don't necessarily need onsite computing power to do advanced work. On top of that, web apps are becoming more and more refined on the front end, blurring the lines between local and cloud software.

Several web apps are looking to revolutionize architecture. We've already seen one example with Delve, but there are others, such as Finch 3D.

Finch is designed to be used in the early stages of a project. Finch's AI features can generate multiple options based on the design needs.

Another exciting company working on an AI-based product is Higharc, which aims to automate house design by creating 3D models and plans through an iterative process.

"While the buyer or builder sees a simple 3D model, there are sophisticated algorithms behind the scenes continuously determining crucial details that typically take hours of manual effort," Higharc founder and CEO Marc Minor told The Financial Times in 2020.

Autodesk acquired AI architecture startup Spacemaker in late 2020. This cloud-based software helps teams analyze and design real estate sites using generative design tools like Delve.

In an Autodesk blog post, the company said, "Spacemaker can analyze up to 100 criteria across city blocks: zoning, views, daylight, noise, wind, roads, traffic, heat islands, parking, and more."

Step Into the Omniverse

Any AI project needs a lot of computer power, whether local or in the cloud, and an excellent source for that is the humble graphics card.

NVIDIA's Omniverse collaboration and simulation platform is a "scalable, multi-GPU real-time reference development platform for 3D simulation and design collaboration."

Omniverse can accommodate a small or large number of users and single or multiple GPUs. Omniverse is based on the capabilities of NVIDIA's RTX GPUs and Pixar's Universal Scene Description software.

Omniverse is not exclusively an architectural tool. Instead, it’s used to generate photorealistic 3D designs and simulations for fields such as architecture, engineering, animation, or industrial design.

Architects can integrate the Omniverse with tools such as Autodesk 3ds Max, Rhino, and Trimble Sketchup to transform a design into a 3D simulation.

Right now, a lot of AI work with Omniverse requires feeding the product from a generative design workflow into the Omniverse. The future, however, promises some exciting developments.

For example, NVIDIA's AI Research Lab in Toronto created a tool called GANverse 3D that takes 2D images and transforms them into 3D models. It’s easy to see how AI tools like that could transform architecture or any industry where 3D modeling is an essential part of the process.

Computer Vision

Many art projects are also trying out the implications of AI in architecture, such as the Deadalus Pavilion from London-based Ai Build.

Credit: Ai Build

This small-scale structure was designed with NVIDIA GPUs using computer vision and deep learning to increase the speed and accuracy of the elements of this 3D-printed structure created by an industrial robot (humans assembled the structure).

The Future of AI and Architecture

Artificial intelligence has a lot of promise for the future of many industries, and architecture is no exception. While it's still early days, there's a lot of potentials for AI to enhance architecture design processes.

However, despite all the AI-powered tools, humans are still required to refine and approve any designs used. While architectural tools are getting more advanced, they won't be replacing human architects anytime soon.

Published at DZone with permission of Kevin Vu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments