Architecting API Management Solutions With WSO2 API Manager, Part 2

To wrap up this series, we explore the designing the deployment architecture and how to make the proper infrastructure selection for your API.

Join the DZone community and get the full member experience.

Join For FreeWelcome back! If you missed Part 1, you can check it out here!

Designing the Deployment Architecture

WSO2 has defined five deployment patterns for WSO2 API Manager based on the following four aspects of the deployment:

- Throughput required by each API manager component.

- Dynamic scalability of each component.

- Internal/external API gateway separation.

- Hybrid Cloud deployments.

Once the solution architecture and capacity planning tasks are completed the deployment architecture of an API management solution can be designed by considering the above requirements of the project.

Deployment Pattern 1

Active/Active All-In-One API Manager with High Availability

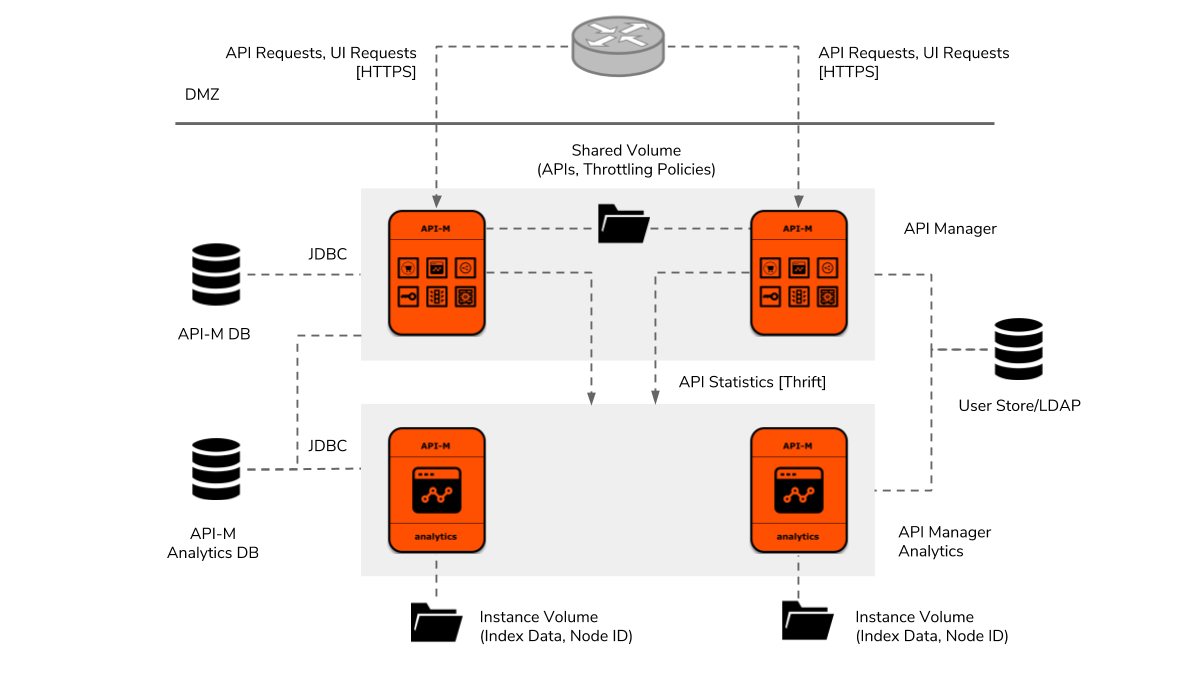

Figure 4: WSO2 API Manager Deployment Pattern 1

Deployment pattern 1 provides the simplest approach for deploying WSO2 API manager in production with high availability and the least number of instances. As shown above, it includes two all-in-one API manager instances and two API manager analytic instances. Two dedicated databases will be needed for persisting API manager and analytics data. Sharing the same database among analytics and API manager instances may degrade the performance of the API Gateway. The organization’s LDAP-based user store can be integrated for allowing single-sign-on (SSO) to UI components and authenticating and authorizing APIs. A shared volume would be needed for synchronizing API files and throttling policies among the API manager instances. This volume would need to have multi-write support and ability to mount to multiple instances. For example, on AWS, an EFS instance, and on an on-premise datacenter, an NFS server, can be used for this purpose. In addition, the analytics instances would need instance volumes for persisting their individual index data and node identifier on the filesystem.

It is important to note that in this design, API manager and analytics instances would not be able to dynamically scale on demand due to the way their internal components have been designed to work. This is mainly due to the client-side failover transports (Apache Thrift and AMQP) used by the Traffic Manager and Analytics components. If new instances are created at runtime, those will not be discoverable by the existing instances. Therefore, if dynamic scalability is required, such solutions would need to switch to deployment pattern 2. The average throughput supported by this pattern can be calculated by referring to the performance benchmark test results [1]. In general, this pattern will be able to support around 1500 TPS for APIs carrying 10K messages and around 700 TPS for 100K messages.

Deployment Pattern 2

Dynamically Scalable API Gateway and Key Manager

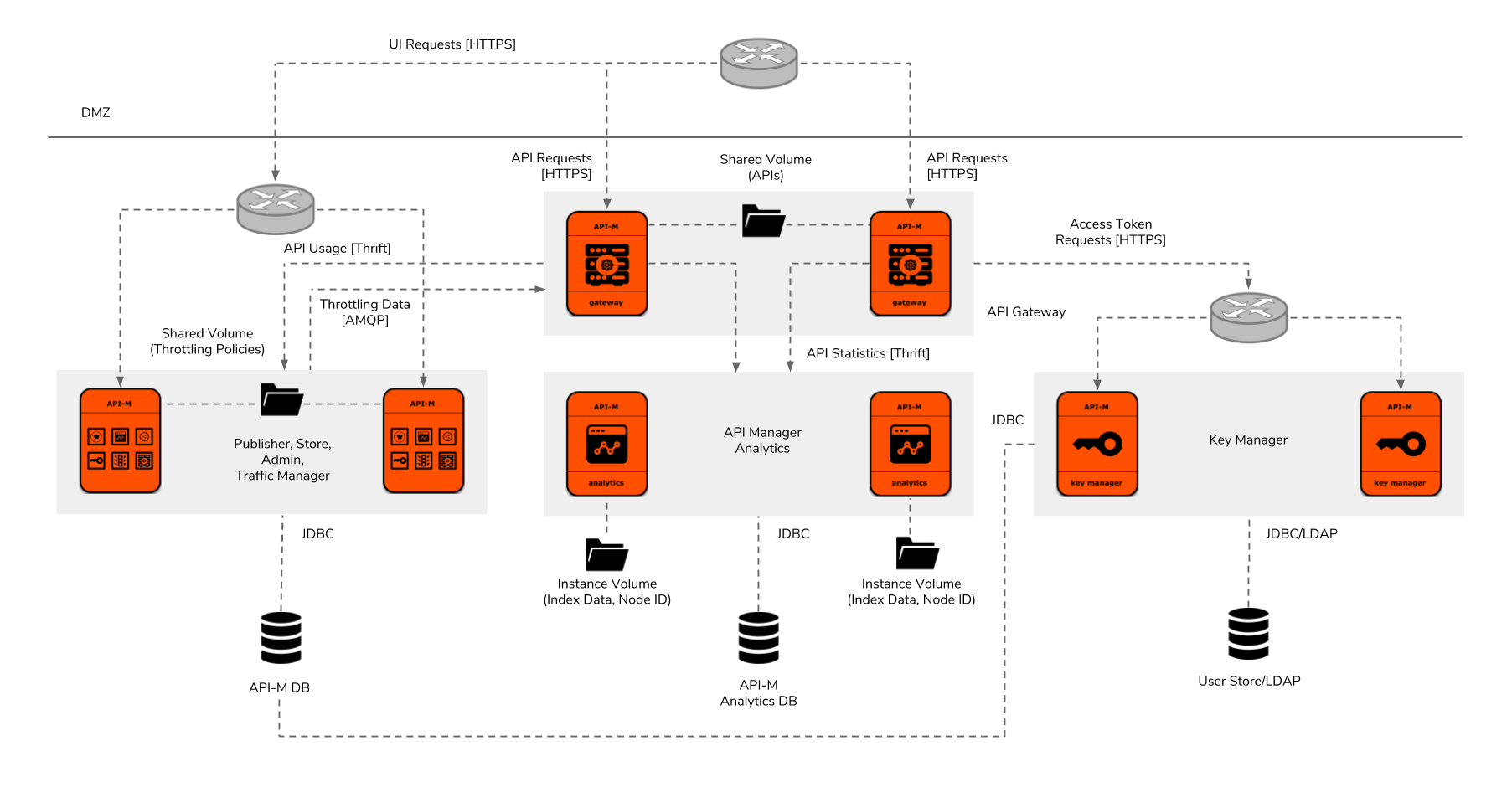

Figure 5: WSO2 API Manager Deployment Pattern 2

The second API manager deployment pattern has been designed for API management solutions which require higher throughputs at the API Gateway. In contrast to pattern 1, in this pattern, the API Gateway and Key Manager components have been taken out from the all-in-one instance and deployed separately for dynamic scalability. The rest of the components (Publisher, Store, Admin, and Traffic Manager) run in a single JVM using two fixed instances. If we scale the Gateway instances then we would also need to scale the Key Manager instances for supporting the access token requests. Theoretically, the two Analytics and Traffic Manager instances will be able to handle the load of ten API Gateway instances. Therefore, the API Gateway and Key Manager instances can be dynamically scaled until their instance count reaches ten. If the API Gateway instance count goes beyond ten, either Analytics and Traffic Manager instances would need to be manually scaled or deployment pattern 3 might need to be used.

Technically, the throughput supported by deployment pattern 2 will be almost similar to pattern 1 when the number of API Gateway instances are at minimum level. Nevertheless, those can be dynamically scaled using the infrastructure features such as an autoscaling group on AWS and the Horizontal Pod Autoscaler on Kubernetes based on the incoming request load. At the minimum instance count, each component will provide high availability. The API Gateway, Key Manager, and single JVM instances which includes Publisher, Store, Admin, and Traffic Manager components will need layer a 7 load balancer for routing HTTP traffic. Apache Thrift and AMQP connections will be made point-to-point and those will not be able to be load balanced.

Deployment Pattern 3

Fully Distributed API Manager Deployment

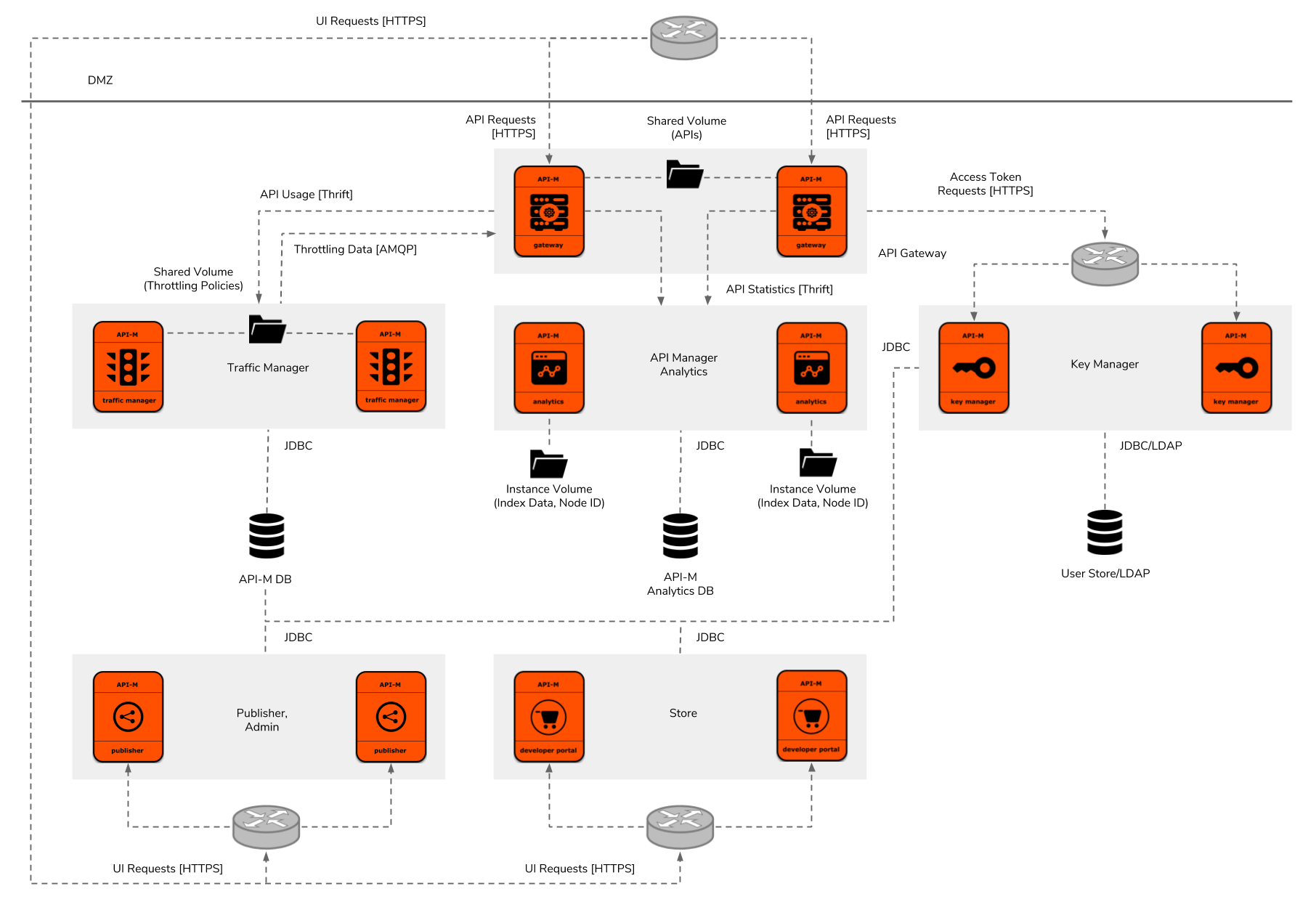

Figure 6: WSO2 API Manager Deployment Pattern 3

Deployment pattern 3 has been designed for solutions which require higher throughputs at the both API Gateway and UI components levels. It deploys all API manager components' individual clusters to be able to scale independently. Out of these six clusters, except for the Analytics and Traffic Manager, all other components will be dynamically scalable based on the incoming request load. Analytics and Traffic Manager instances will need to be manually scaled and their dependent components will need to be updated accordingly. In this architecture, a layer 7 load balancer will be needed for the API Gateway, Traffic Manager, Publisher/Admin, and Store.

Similar to deployment pattern 3, this approach will also provide an almost similar throughput at the API Gateway at the minimum instance count. The decision for moving to this pattern may need to be taken based on the throughput required by the API Gateway or the dynamic scalability of the UI components.

Deployment Pattern 4

Internal/External API Gateway Separation

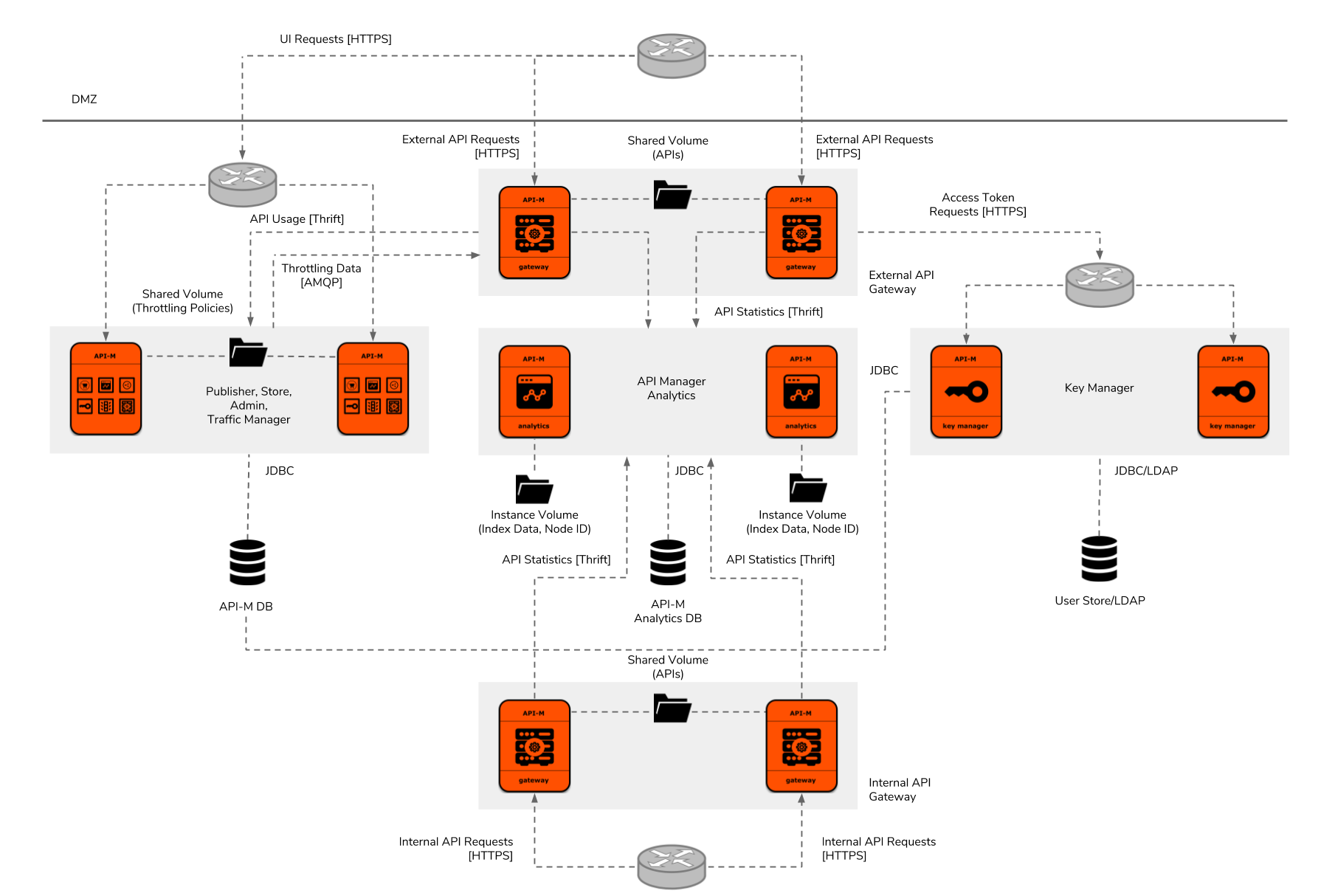

Figure 7: WSO2 API Manager Deployment Pattern 4

Deployment pattern 4 has been designed for solutions which require internal and external API Gateway separation. It has been designed by using deployment pattern 2 and by adding two additional Gateway instances. Therefore, it comprises of the same characteristics of pattern 2 except for the dedicated Gateway instances for managing external API traffic. Multiple API Gateway clusters can be added to WSO2 API Manager deployments using its gateway environment management feature. APIs can be specifically published to each gateway environment based on their accessibility level. Routing to each API Gateway cluster can be controlled at the network layer by only allowing external API traffic to reach the external API gateway instances.

Technically, the performance of this deployment pattern would also be similar to the deployment pattern 2 at the minimum instance count. Nevertheless, due to the internal/external API traffic separation, there will be more room in each API Gateway cluster to handle more traffic and those will be dynamically scalable.

Deployment Pattern 5

Hybrid Cloud API Manager Deployment

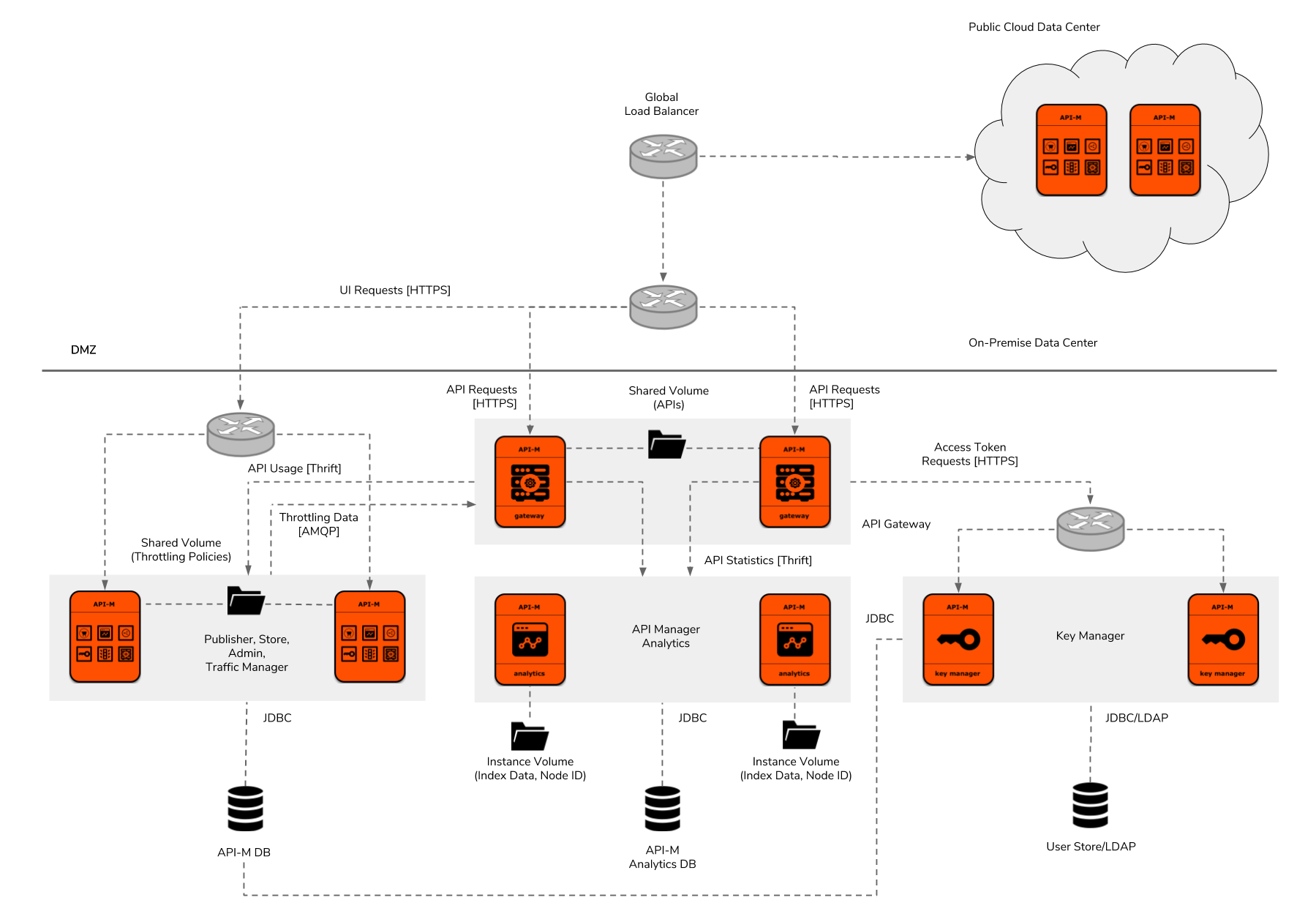

Figure 8: WSO2 API Manager Deployment Pattern 5

Deployment pattern 5 has been designed for solutions which require API management capabilities in both private and public cloud environments. As a reference, the on-premise deployment has been designed using deployment pattern 2. However, it can be designed using any of the above deployment patterns according to the requirements of the solution. The public cloud API management deployment can either use a shared WSO2 API Cloud or a dedicated deployment of the WSO2 API Manager in any of your organization’s own public cloud infrastructures. This type of a deployment can be used for different purposes:

- Utilizing Public Cloud as a Disaster Recovery (DR) Option

If the requirement is option 1, two data centers can be used in Active/Passive mode by replicating databases and filesystems from one data center to the other periodically. Traffic can be routed to the main data center by always using a global DNS based load balancer such as AWS Route 53 and it can be switched to the secondary data center automatically if the main data center becomes unavailable.

2. Managing the Separation of Internal and External APIs

If the requirement is to have a separation between internal and external APIs and external APIs need to be exposed to the internet or systems available on a public cloud the two data centers can be used in Active/Active mode. In this approach, the global load balancer may need to configured to route requests based on the APIs using the context path of the URL.

3. Obtaining Additional Infrastructure Resources

This approach would be quite challenging to implement if the latency between the on-premise data center and public cloud network is high and the connection between the data centers is not highly reliable. In this model, if API requests are routed to both data centers at the same time, the data might get inconsistent due to the time needed for replicating the state is high. One way to solve this problem would be to route requests based on the origin of the request without routing to both data centers at the same time.

Infrastructure Selection

WSO2 API Manager can be deployed on any virtual machine based platforms or on Kubernetes, OpenShift, and DC/OS container cluster management platforms which have the following features:

- A production-grade RDBMS: MySQL v5.6/5.7, MS SQL v2012/2014/2016, Oracle v12.1.0, PostgreSQL v9.5.3/9.6.x.

- Shared persistent volumes with the multi-write capability.

- Instance persistent volumes.

- Internal TCP and HTTP routing.

- Layer 7 load balancing for external routing.

Instructions for installing a standard virtual machine-based platform based on deployment pattern 1 can be found in the WSO2 documentation. As far as I know, currently, instructions for other deployment patterns are not in place. Resources for installing Pivotal Cloud Foundry and AWS are currently being implemented in the WSO2 API-M PCF and WSO2 API-M Cloud Formation Github repositories. Configuration management modules for Puppet can be found in WSO2 API-M Puppet Github repository.

Resources and instructions for installing Kubernetes and OpenShift platforms based on all five deployment patterns can be found in the WSO2 API-M Kubernetes Github repository. Resources for installing on DC/OS are now being implemented in the WSO2 API-M DC/OS Github repository.

Conclusion

Architecting an API management solution using the WSO2 API Manager may involve several steps for identifying the business requirements, designing the high-level solution architecture, the detailed solution architecture, and the deployment architecture based on the infrastructure selection. WSO2 has identified five different deployment patterns for WSO2 API Manager to be deployed in enterprise environments based on performance, dynamic scalability, internal/external API separation, and Hybrid cloud deployment requirements. It can be deployed on any virtualized platform or container cluster manager which has production grade RDBMSs, shared and instance persistent volumes, internal HTTP and TCP routing, and layer 7 load balancing support for external routing.

References for the Series

[1] WSO2 API Manager Performance and Capacity Planning, WSO2: https://docs.wso2.com/display/AM210/WSO2+API-M+Performance+and+Capacity+Planning

[2] Securing Microservices (Part 1), Prabath Siriwardena: https://medium.facilelogin.com/securing-microservices-with-oauth-2-0-jwt-and-xacml-d03770a9a838

[3] WSO2 API Manager Deployment Patterns, WSO2: https://docs.wso2.com/display/AM2xx/Deployment+Patterns

[4] WSO2 API Manager Customer Stories, WSO2: https://wso2.com/api-management/customer-stories/

[5] Compatibility of WSO2 Products. WSO2: https://docs.wso2.com/display/compatibility/Compatibility+of+WSO2+Products

[6] Benefits of a Multi-regional API Management Solution for a Global Enterprise, Lakmal Warusawithana: https://wso2.com/library/article/2017/10/benefits-of-a-multi-regional-api-management-solution-for-a-global-enterprise/

[7] Router Icon, OpenClipArt: https://openclipart.org/detail/171415/router-symbol

[8] Folder and Database Icons, Font WSO2: https://github.com/wso2/ux-font-wso2

Published at DZone with permission of Imesh Gunaratne. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments