Application Scaling: Pointers on Choosing Scaling Strategies

Should all apps be scalable from the start? And does it save money in the long run? Let's dive into the technical details.

Join the DZone community and get the full member experience.

Join For FreeI bet every single entrepreneur has scalability on the list as they plan their future app. No matter the business goals in mind, everyone would be happy to get a stable app that survives Black Friday without a hint of a glitch.

Hey, I’m Alex Shumski, Head of Presales at Symfa. So far as building software architectures is what I have been doing for a living for years and years, I’m here to suggest a thing or two to those who aren’t certain if they need app scaling at all and which strategy to follow, if any.

Yeah, I totally realize that requirements differ within each business case. Some need a high-load app with an eye to explosive enterprise and user demand growth. Others do not exactly plan app extensions any time soon but opt for robust scalability at the engineering stage, as they believe that improving it later would hit their wallets badly.

From a large perspective, should any kind of app rise to the scalability challenge? If we do it early on while just starting mobile or web app development, does this save funds? Now, let’s get technical right away.

Application Scalability: What Is It Even About?

For starters, terminology. Essentially, scalability implies a system’s growth capacity, mostly in terms of handling increasing user requests and service demand. It specifically associates the backend, hosting servers, and databases. To enhance your app’s bandwidth, you can opt for the two following approaches:

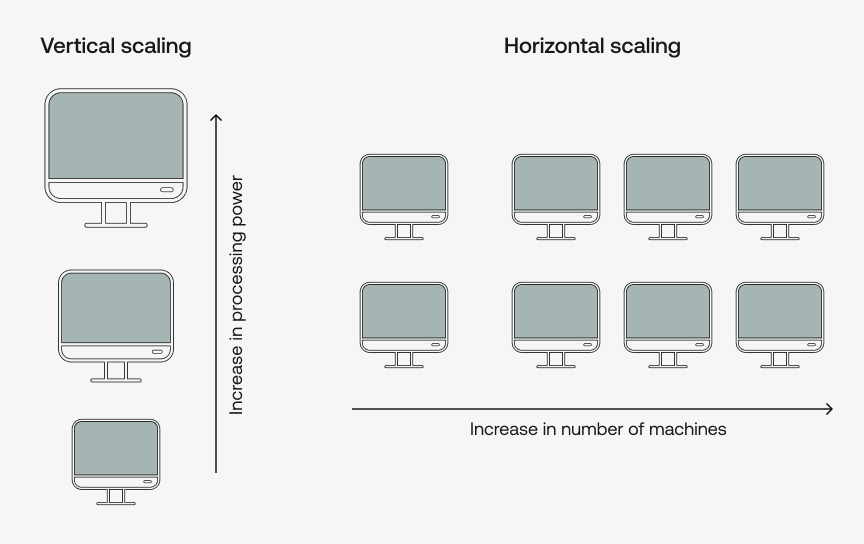

Scale-up or Vertical Scaling

This is literally about increasing your system's resources. This means that before scaling, it utilized an 8-core machine with 4GB memory; it should move to a stronger machine having eight cores and 8GB memory. So, in the case of vertical scaling, we’re talking about enhancing exclusively the capacity of the server your app runs on.

Scale-Out or Horizontal Scaling

This one isn’t just about scaling the resources in place but getting more machines. Say your server powers a single machine. In time, with the load growth, you’re going to duplicate the machine having equal service while balancing the load.

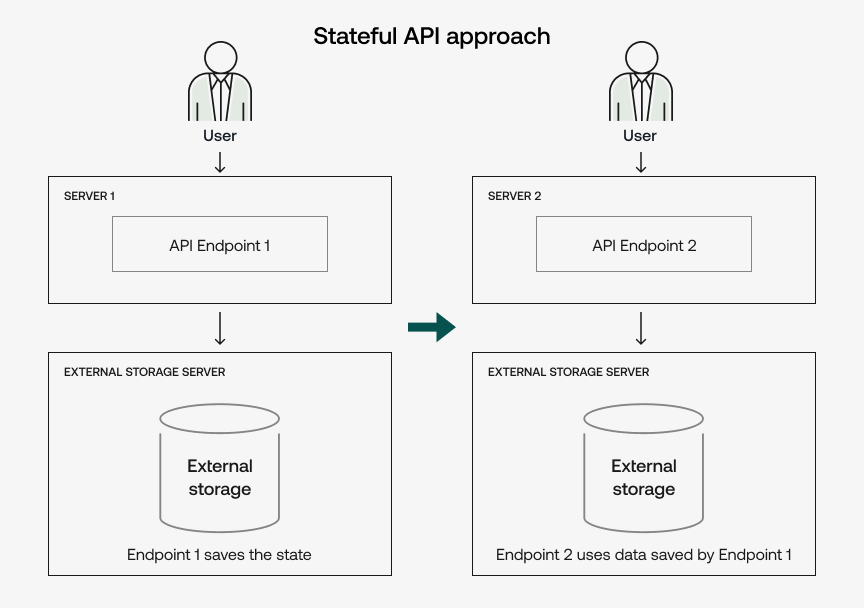

The good news is that most systems are adapted to support the scale-out. It’s just that load balancing may turn out to be a challenge due to the emergence of status monitoring, along with endpoints and backend organization principles. But these can also help deal with the problem.

As applied to the backend, a nice way out is stateless API: as the server receives a request, its state doesn’t change, just like with any of the following ones. What I’m saying is if a REST-enabled backend is stateless, each call is completely independent. Meaning it contains all the data required to effectively handle it on itself, without using session or hidden data while processing requests.

This way, you’ll keep any kind of information (known as state) out of the server so that no one can access the first request through the second one, and so on. In a word, the stateless method facilitates balancing. No matter how many dependencies your app holds, it won’t be a big deal for you.

Why exactly is this important? The thing is, several requests made by one and the same user may go to disparate servers. This means that to save the state, the system is to keep it in a temporary link.

Take a purchase request with user data inside. Prior to leading a user to a dedicated payment page handling a particular request, the system is to save their details someplace. To hold the interim data, we can reach out to databases and storage like Redis cache or Azure Blob Storage. In case your app isn’t stateless but stateful, you can configure the Load Balancer and user session ID so that it sends one user request to one server.

Speaking about scaling methods and tools, we should first dig a bit into the two things they most depend on the application type and the deployment model.

Application Type

While choosing a scaling approach to follow, one should consider what sits well with their app type and its components — which one handles the highest load? In this regard, we differentiate:

- CPU-intensive apps with high-loaded processor capacity;

- Data-rich apps with massive information turnaround aching for database scaling;

- Network-intensive apps depend on network capacities.

Deployment Model

Depending on what kind of instances your app runs on (could be virtual machines or Amazon EC2), you can opt for the NGINX load balancer or Apache Zookeeper tools residing on a dedicated machine to monitor the machine load.

Sure, tasks behind any app differ, but there are reliable services to handle static content scaling — say, CDN CloudFront. Then, you can use the balancer to further configure scaling routines within each Amazon infrastructure service.

Imagine you’re seeking vertical scalability. By utilizing EC2, you can establish that the instances can be launched with D2 large or D2XXL, other than D2 micro resource subscription. Otherwise, in cases when the load breaks a specified limit, there are extra instances available. Like, it turns out that your system is far too CPU-intensive for 80%, so you deploy additional instances, and none of the functionality gets wrecked.

Ultimate Considerations While Selecting App Scaling Strategies

Again, within Symfa’s practice, many clients want a perfectly scalable app from the outset as they fear future overspending. I’d say, in most cases, this is just partly true. As I see it, in the first place, there’s much more sense in finding out if the demand in your app is THAT high and then detecting the components that are going to struggle with the most intensive load.

So, it can all boil down to the following questions:

Do the Scalability Issues Show?

Other than risking scaling investments without even a sign of future problems, one should better make live data-driven decisions about the scope of scaling measures to take.

Imagine you're building an app while anticipating it to be network-intensive. Yet, it goes live — and whoop, it’s CPU-intensive instead. Still, this sort of scalability challenge is not that big a deal. You go to the AWS infrastructure, change instance types, and you’re done with it. And it’s way cheaper than bringing changes to the entire app architecture.

Does Scaling Pay Its Money’s Worth?

Your app’s complexity always counts as you plan to scale. Say you’re a startup. You’re engineering an app with a rather small codebase, which takes one to two years. Here, scaling doesn’t tend to make a hole in your funds — you can switch to the microservices architecture under reasonable costs, thus enabling each of your services to further scale proportionally.

Another case. You have about half a year of development ahead when there comes a call for scaling. And you know what? It even simplifies the challenge. Granted, your app still hosts little logic and dependencies while having a small database, but splitting it into microservices is pretty doable.

Otherwise, you can move the hurdled components to effective provider solutions. If it’s the database that’s troubled, you may opt for a Database-as-a-Service tool while delegating scaling to, say, the AWS infrastructure. Note: here, I’d again suggest a close revision of your app’s business goals, as hosting services have their specifics. This is exactly the case when you might think of a seemingly cheap way towards app scalability, but reality crashes it.

A simple example. You have a network- and CPU-intensive app for video processing, online streaming, and news production. My piece of advice here is: to avoid excessive hosting expenses, you’d rather build a dedicated data center to handle the load while leaving just the content distribution routines in the Cloud.

Holding a special place on the scaling list, sophisticated legacy applications. The thing is, monolith systems can be a mixture of massive dependencies, logic, and databases, which makes it no easier to scale. Still, it’s not impossible.

In case the app runs on an internal data center, we should apply the “lift and shift” method while migrating the whole app infrastructure to the Cloud. Thus, vertical scaling wins us some time and resources to improve the parts that are used to drag the application architecture behind.

Some Closing Pointers

Again, always bear in mind that you can’t walk in someone’s shoes when it comes to scaling. There's a legacy monolith, and there’s a plain simplicity of a newborn app with minimum dependencies — no silver bullet is applicable. It's all about your app’s business objectives and, most specifically, components and communications. So, first, take your time to make sure your app’s going to be high-load and expect some prospective scalability issues to emerge on your horizon.

Published at DZone with permission of Alexander Shumski. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments