API Gateway vs. Load Balancer

This article will simplify these architectural concepts and help the reader choose the one that works best based on individual use cases.

Join the DZone community and get the full member experience.

Join For FreeRecently, while working on a project at work, we came to this architectural choice of whether to use API Gateway as the interface of a backend service, back the service behind a load balancer, or maybe have the API Gateway route the requests to the load balancer fronting the service. While debating about these architectural choices with my peers, I realized this is a problem many software development engineers would face while designing solutions in their domain. This article will hopefully simplify these concepts and help choose the one that works the best based on individual use cases.

Callout

Please understand the requirements or the problem you are working on first as the choice you make will be highly dependent on the use cases or requirements you have.

Application Programming Interface (API)

Let's understand what an API is first. An API is how two actors of software systems (software components or users) communicate with each other. This communication happens through a defined set of interfaces and protocols e.g., the weather bureau’s software system contains daily weather data. The weather app on your phone “talks” to this system via APIs and shows you daily weather updates on your phone.

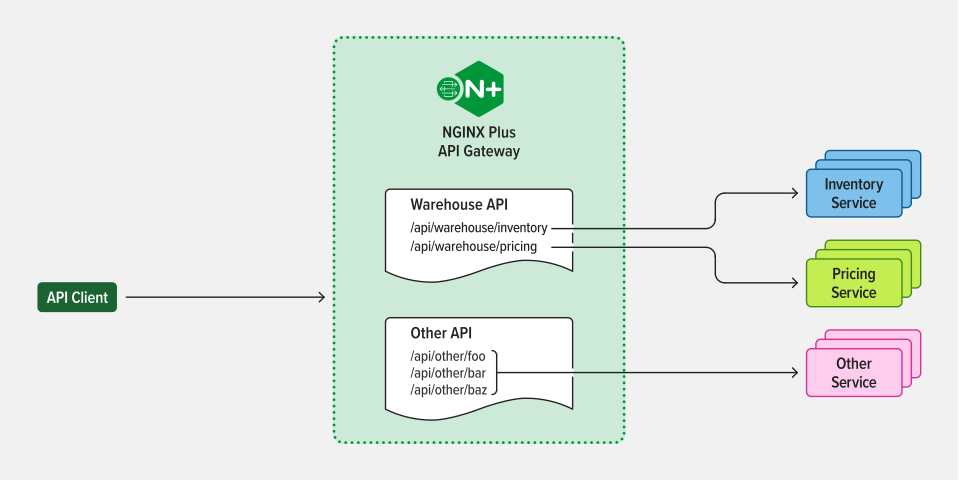

API Gateway

An API Gateway is a component of the app-delivery infrastructure that sits between clients and services and provides centralized handling of API communication between them. In very simplistic terms API Gateway is the gateway to the API. It is the channel that helps users of the APIs communicate with the APIs while abstracting out complex details of the APIs e.g. services they are in, access control (authentication and authorization), security (preventing DDoS attacks), etc. Imagine it being the switchboard operator of the manual telephone exchange, whom users can call and ask to be connected with a specific number (analogous to the software component here).

Let's discuss the pros and cons of API Gateway a little.

Pros

- Access control: Providers support Authenticating and Authorizing the clients before requests reach the Backend systems.

- Security: Providers security/potential mitigations from DDoS (Distributed Denial of Service) attacks from the get-go.

- Abstraction: Abstracts out internal hosting details of the Backend APIs and provides clean routing to Backend services based on multiple techniques — path-based routing, Query String params-based routing, etc.

- Monitoring and analytics: API Gateway could provide additional support for API-level monitoring and analytics to help scale infrastructure gracefully.

Cons

- Additional layer between users and services: API Gateway adds another layer between users and Services, thus adding additional complexity to the orchestration of requests.

- Performance impact: Since an additional layer is added in the service architecture, this could lead to potential performance impact, as the requests now have to pass through one more layer before reaching backend services.

Load Balancing and Load Balancer

Load balancing is the technique of distributing load between multiple backend servers, based on their capacity and actual requests pattern. Today's applications can have requests incoming at a higher rate (read hundreds or thousands of requests/second), asking backend services to perform actions (e.g. data processing, data fetching, etc.). This requires services to be hosted on multiple servers at once. This thus means we need a layer sitting on top of these backend servers (load balancer), which could route incoming requests to these servers, based on what they can handle "efficiently" while keeping customer experience and service performance intact. The load balancers also ensure that no one server is overworked, as that could lead to requests failing or getting higher latencies.

On a very high level, the load balancer does the following:

- Routes incoming requests to backend servers to "efficiently" distribute the load on the servers.

- Maintain the performance of the service, by ensuring no one server is overworked.

- Lets the service efficiently and independently scale up or down and routes the requests to the active hosts (The load balancer figures out the number of active hosts by performing a technique named Heartbeat).

Pros

- Performance: Load balancers help maintain the service performance by ensuring that the request load is distributed across the servers.

- Availability: Load balancers help maintain the availability of the service as with them, now there could be multiple servers hosting the same service.

- Support scalability: Helps service scale up or down cleanly (horizontally) by letting new servers be added or removed anytime needed.

Cons

- Potential single point of failure: Since all requests have to flow through a Load Balancer, a load balancer can become a single point of failure for the whole service, if not configured properly by adding enough redundancy.

- Additional overhead: Load balancers use multiple algorithms to route the requests to the backend services e.g. Round robin, Least connections, Adaptive, etc. This means a request has to flow through this additional overhead in the load balancer to figure out which server the request should be forwarded to. This could thus add additional performance overload as well on the requests.

When To Use What

Let's come to the crux of the article now. When do we use a load balancer for a service and when do we use an API Gateway for it?

When To Use API Gateway

Going with the Pros of API Gateway mentioned above, the following are the cases when API Gateway is best suited:

- When we need central access control (authentication and authorization) before the backend services/APIs

- When we need central security mechanisms for issues like DDoS.

- When we are exposing APIs to external customers (read Internet) we don't want to share internal details of the services or infrastructure to the Internet.

- When we need out-of-the-box monitoring and analytics on the APIs and need insights on how to scale the backend services.

When To Use Load Balancer

- When the service can get a high number of requests/second, which one server can't handle the service would be hosted on more than one server.

- When the service has defined availability and SLAs and needs to adhere to that.

- When the service needs to be able to scale up or down as required.

Opinions expressed by DZone contributors are their own.

Comments