Amazon S3 Parallel MultiPart File Upload

Join the DZone community and get the full member experience.

Join For FreeIn this blog post, I will present a simple tutorial on uploading a large file to Amazon S3 as fast as the network supports.

Amazon S3 is clustered storage service of Amazon. It is designed to make web-scale computing easier.

Amazon S3 provides a simple web services interface that can be used to store and retrieve any amount of data, at any time, from anywhere on the web. It gives any developer access to the same highly scalable, reliable, secure, fast, inexpensive infrastructure that Amazon uses to run its own global network of web sites. The service aims to maximize benefits of scale and to pass those benefits on to developers.

For using Amazon services, you'll need your AWS access key identifiers, which AWS assigned you when you created your AWS account. The following are the AWS access key identifiers:

- Access Key ID (a 20-character, alphanumeric sequence)For example: 022QF06E7MXBSH9DHM02

- Secret Access Key (a 40-character sequence)For example: kWcrlUX5JEDGM/LtmEENI/aVmYvHNif5zB+d9+ct

| Caution | |

|---|---|

Your Secret Access Key is a secret, which only you and AWS should know. It is important to keep it confidential to protect your account. Store it securely in a safe place. Never include it in your requests to AWS, and never e-mail it to anyone. Do not share it outside your organization, even if an inquiry appears to come from AWS or Amazon.com. No one who legitimately represents Amazon will ever ask you for your Secret Access Key.

|

The Access Key ID is associated with your AWS account. You include it in AWS service requests to identify yourself as the sender of the request.

The Access Key ID is not a secret, and anyone could use your Access Key ID in requests to AWS. To provide proof that you truly are the sender of the request, you also include a digital signature calculated using your Secret Access Key. The sample code handles this for you.

Your Access Key ID and Secret Access Key are displayed to you when you create your AWS account. They are not e-mailed to you. If you need to see them again, you can view them at any time from your AWS account.

To get your AWS access key identifiers

- Go to the Amazon Web Services web site at http://aws.amazon.com.

- Point to Your Account and click Security Credentials.

- Log in to your AWS account.The Security Credentials page is displayed.

- Your Access Key ID is displayed in the Access Identifiers section of the page.

- To display your Secret Access Key, click Show in the Secret Access Key column.

You can use your Amazon keys from a properties file in your application.

Here is a sample for properties file containing Amazon keys:

-

# Fill in your AWS Access Key ID and Secret Access Key # http://aws.amazon.com/security-credentials accessKey = <your_amazon_access_key> secretKey = <your_amazon_secret_key>

Here is sample AmazonUtil class for getting AWS Credentials from properties file.

public class AmazonUtil {

private static final Logger logger = LogUtil.getLogger();

private static final String AWS_CREDENTIALS_CONFIG_FILE_PATH =

ConfigUtil.CONFIG_DIRECTORY_PATH + File.separator + "aws-credentials.properties";

private static AWSCredentials awsCredentials;

static {

init();

}

private AmazonUtil() {

}

private static void init() {

try {

awsCredentials =

new PropertiesCredentials(IOUtil.getResourceAsStream(AWS_CREDENTIALS_CONFIG_FILE_PATH));

}

catch (IOException e) {

logger.error("Unable to initialize AWS Credentials from " + AWS_CREDENTIALS_CONFIG_FILE_PATH);

}

}

public static AWSCredentials getAwsCredentials() {

return awsCredentials;

}

}

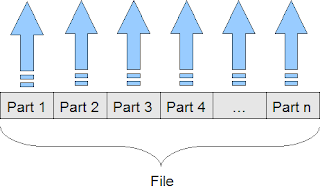

Amazon S3 has Multipart Upload service which allows faster, more flexible uploads into Amazon S3. Multipart Upload allows you to upload a single object as a set of parts. After all parts of your object are uploaded, Amazon S3 then presents the data as a single object. With this feature you can create parallel uploads, pause and resume an object upload, and begin uploads before you know the total object size. For more information on Multipart Upload, review the Amazon S3 Developer Guide

In this tutorial, my sample application uploads each file parts to Amazon S3 with different threads for using network throughput as possible as much. Each file part is associated with a thread and each thread uploads its associated part with Amazon S3 API.

In this tutorial, my sample application uploads each file parts to Amazon S3 with different threads for using network throughput as possible as much. Each file part is associated with a thread and each thread uploads its associated part with Amazon S3 API.

Figure 1. Amazon S3 Parallel Multi-Part File Upload Mechanism

Amazon S3 API suppots MultiPart File Upload in this way:

1. Send a MultipartUploadRequest to Amazon.

2. Get a response containing a unique id for this upload operation.

3. For i in ${partCount}

3.1. Calculate size and offset of split-i in whole file.

3.2. Build a UploadPartRequest with file offset, size of current split and unique upload id.

3.3. Give this request to a thread and starts upload by running thread.

3.3.1. Send associated UploadPartRequest to Amazon.

3.3.2. Get response after successful upload and save ETag property of response.

4. Wait all threads to terminate

5. Get ETags (ETag is an identifier for successfully completed uploads) of all terminated threads.

6. Send a CompleteMultipartUploadRequest to Amazon with unique upload id and all ETags. So

Amazon joins all file parts as target objects.

Here is implementation:

public class AmazonS3Util {

private static final Logger logger = LogUtil.getLogger();

public static final long DEFAULT_FILE_PART_SIZE = 5 * 1024 * 1024; // 5MB

public static long FILE_PART_SIZE = DEFAULT_FILE_PART_SIZE;

private static AmazonS3 s3Client;

private static TransferManager transferManager;

static {

init();

}

private AmazonS3Util() {

}

private static void init() {

// ...

s3Client = new AmazonS3Client(AmazonUtil.getAwsCredentials());

transferManager = new TransferManager(AmazonUtil.getAwsCredentials());

}

// ...

public static void putObjectAsMultiPart(String bucketName, File file) {

putObjectAsMultiPart(bucketName, file, FILE_PART_SIZE);

}

public static void putObjectAsMultiPart(String bucketName, File file, long partSize) {

List<PartETag> partETags = new ArrayList<PartETag>();

List<MultiPartFileUploader> uploaders = new ArrayList<MultiPartFileUploader>();

// Step 1: Initialize.

InitiateMultipartUploadRequest initRequest = new InitiateMultipartUploadRequest(bucketName, file.getName());

InitiateMultipartUploadResult initResponse = s3Client.initiateMultipartUpload(initRequest);

long contentLength = file.length();

try {

// Step 2: Upload parts.

long filePosition = 0;

for (int i = 1; filePosition < contentLength; i++) {

// Last part can be less than part size. Adjust part size.

partSize = Math.min(partSize, (contentLength - filePosition));

// Create request to upload a part.

UploadPartRequest uploadRequest =

new UploadPartRequest().

withBucketName(bucketName).withKey(file.getName()).

withUploadId(initResponse.getUploadId()).withPartNumber(i).

withFileOffset(filePosition).

withFile(file).

withPartSize(partSize);

uploadRequest.setProgressListener(new UploadProgressListener(file, i, partSize));

// Upload part and add response to our list.

MultiPartFileUploader uploader = new MultiPartFileUploader(uploadRequest);

uploaders.add(uploader);

uploader.upload();

filePosition += partSize;

}

for (MultiPartFileUploader uploader : uploaders) {

uploader.join();

partETags.add(uploader.getPartETag());

}

// Step 3: complete.

CompleteMultipartUploadRequest compRequest =

new CompleteMultipartUploadRequest(bucketName,

file.getName(),

initResponse.getUploadId(),

partETags);

s3Client.completeMultipartUpload(compRequest);

}

catch (Throwable t) {

logger.error("Unable to put object as multipart to Amazon S3 for file " + file.getName(), t);

s3Client.abortMultipartUpload(

new AbortMultipartUploadRequest(

bucketName, file.getName(), initResponse.getUploadId()));

}

}

// ...

private static class UploadProgressListener implements ProgressListener {

File file;

int partNo;

long partLength;

UploadProgressListener(File file) {

this.file = file;

}

@SuppressWarnings("unused")

UploadProgressListener(File file, int partNo) {

this(file, partNo, 0);

}

UploadProgressListener(File file, int partNo, long partLength) {

this.file = file;

this.partNo = partNo;

this.partLength = partLength;

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

switch (progressEvent.getEventCode()) {

case ProgressEvent.STARTED_EVENT_CODE:

logger.info("Upload started for file " + "\"" + file.getName() + "\"");

break;

case ProgressEvent.COMPLETED_EVENT_CODE:

logger.info("Upload completed for file " + "\"" + file.getName() + "\"" +

", " + file.length() + " bytes data has been transferred");

break;

case ProgressEvent.FAILED_EVENT_CODE:

logger.info("Upload failed for file " + "\"" + file.getName() + "\"" +

", " + progressEvent.getBytesTransfered() + " bytes data has been transferred");

break;

case ProgressEvent.CANCELED_EVENT_CODE:

logger.info("Upload cancelled for file " + "\"" + file.getName() + "\"" +

", " + progressEvent.getBytesTransfered() + " bytes data has been transferred");

break;

case ProgressEvent.PART_STARTED_EVENT_CODE:

logger.info("Upload started at " + partNo + ". part for file " + "\"" + file.getName() + "\"");

break;

case ProgressEvent.PART_COMPLETED_EVENT_CODE:

logger.info("Upload completed at " + partNo + ". part for file " + "\"" + file.getName() + "\"" +

", " + (partLength > 0 ? partLength : progressEvent.getBytesTransfered()) +

" bytes data has been transferred");

break;

case ProgressEvent.PART_FAILED_EVENT_CODE:

logger.info("Upload failed at " + partNo + ". part for file " + "\"" + file.getName() + "\"" +

", " + progressEvent.getBytesTransfered() + " bytes data has been transferred");

break;

}

}

}

private static class MultiPartFileUploader extends Thread {

private UploadPartRequest uploadRequest;

private PartETag partETag;

MultiPartFileUploader(UploadPartRequest uploadRequest) {

this.s3Client = s3Client;

this.uploadRequest = uploadRequest;

}

@Override

public void run() {

partETag = s3Client.uploadPart(uploadRequest).getPartETag();

}

private PartETag getPartETag() {

return partETag;

}

private void upload() {

start();

}

}

}

AWS

Upload

Amazon Web Services

Opinions expressed by DZone contributors are their own.

![[Caution]](http://docs.aws.amazon.com/fws/1.1/GettingStartedGuide/images/caution.png)

Comments