AI and Automation: A Double-Edged Sword For Application Security

Learn how AI and automation technology provide great returns but must be approached with caution in order to understand and minimize associated risks.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2023 Enterprise Security Trend Report.

For more:

Read the Report

In recent years, developments in artificial intelligence (AI) and automation technology have drastically reshaped application security. On one hand, the progress in AI and automation has strengthened security mechanisms, reduced reaction times, and reinforced system resilience. On the other hand, the challenges in AI and automation have created exploitable biases, overreliance on automation, and expanded attack surfaces for emerging threats.

As we can see, there is immense value and growing potential for these technologies when redefining security scenarios, but we must not ignore that there are numerous challenges as well. Truthfully, every new technology brings a new opportunity for an exploit that, unless addressed, would compromise the very security it seeks to improve. Let's explore how AI and automation technology both help and hurt application security.

Enhanced Threat Detection

AI has evolved from basic anomaly detection to proactive threat response and continuous monitoring. With cybersecurity teams often required to do more with less, coupled with the need for greater resource efficiency, AI threat detection is crucial to addressing the increasingly complex and sophisticated cyber threats that organizations face.

AI-powered tools offer real-time attack detection and maintain continuous observation, which is critical when threats can emerge suddenly and unexpectedly. Their adaptive learning allows AI technologies to identify patterns sooner and take proactive actions to avoid or deescalate potential threats and attacks. Additionally, learning from past incidents and adapting to new threats make systems more resilient against attacks, improving the detection of security breaches or vulnerabilities through advanced analytical capabilities. Similarly, the shift toward automated responses is also a response to the need for more efficient resource management.

As seen in Table 1, we are able to observe key developments in AI detection and their results:

| EVOLUTION OF THREAT DETECTION | ||

|---|---|---|

| Year | Key Developments in AI Threat Detection | Key Challenges and Advancements |

| 1950s | Early conceptualization of Al | Threat detection applications were limited; Al primarily focused on symbolic reasoning and basic problem-solving |

| 1980s | Rule-based systems and basic expert systems were introduced for specific threat types | Limited by the complexity of rule creation and the inability to adapt to evolving threats |

| 1990s | Machine learning (ML) algorithms gained popularity and were applied to signature-based threat detection | SVMs, decision trees, and early neural networks were used for signature matching; limited effectiveness against new, unknown threats |

| 2000s | Introduction of behavior-based detection using anomaly detection algorithms | Improved detection of previously unknown threats based on deviations from normal behavior; challenges in distinguishing between legitimate anomalies and actual threats |

| 2010s | Rise of deep learning, particularly convolutional neural networks for image-based threat detection; improved use of ML for behavioral analysis | Enhanced accuracy in image-based threat detection; increased adoption of supervised learning for malware classification |

| 2020s | Continued advancements in deep learning, reinforcement learning, and natural language processing; integration of Al in next-gen antivirus solutions; increased use of threat intelligence and collaborative Al systems | Growing focus on explainable Al, adversarial ML to address security vulnerabilities, and the use of Al in orchestrating threat responses |

Table 1

Improvements to Efficiency and Accuracy

Automation presents a critical change in how security teams approach and manage cyber threats, moving away from traditional passive anomaly detection to modern active automated responses. Automation for incident response has impacted how threats are managed. It not only accelerates the response process but also ensures a consistent and thorough approach to threat management. A notable advancement in this area is the ability of AI systems to perform automated actions, such as isolating compromised devices to prevent the spread of threats and executing complex, AI-driven responses tailored to specific types of attacks.

It also enables security teams to allocate their resources more strategically, focusing on higher-level tasks and strategies rather than routine threat monitoring and response. By moving from passive detection to active, automated actions, AI is empowering security teams to respond to threats more swiftly and effectively, ensuring that cybersecurity efforts are as efficient and impactful as possible.

Reducing Human Error

The use of AI is a major step forward in reducing human error and enhancing effective security overall. AI's capabilities — which include minimizing false positives, prioritizing alarms, strengthening access control, and mitigating insider threats — collectively create a more reliable and efficient security framework.

Figure 1: Human error resolutions with AI

Over-Reliance on Automation

The incorporation of AI and automation into various business processes alleviates security needs while simultaneously broadening the potential attack surface, which results in a critical concern. This situation demands the development of robust security protocols tailored specifically for AI to prevent it from becoming a weak link in the security framework.

As AI becomes more prevalent, cyber attackers are adapting and gaining a deeper understanding of AI systems. This expertise allows them to exploit weaknesses in AI algorithms and models. Consequently, cybersecurity strategies must evolve to defend against both traditional threats as well as sophisticated threats targeting AI vulnerabilities. Every AI system, interface, and data point represents a possible target, requiring a robust cybersecurity approach that covers all aspects of AI and automation within an organization.

This evolving landscape requires continuous identification and mitigation of emergent risks, signifying a dynamic process where security strategies must be regularly assessed and adapted to address new vulnerabilities as they surface. This evolving cybersecurity challenge underscores the importance of ongoing vigilance and adaptability in protecting against AI and automation-related threats.

Exploitable AI Biases

Ensuring the integrity and effectiveness of AI systems involves addressing biases that are present in their training data and algorithms, which can lead to skewed results and potentially compromise security measures. Efforts to refine these algorithms are ongoing, focusing on using diverse datasets and implementing checks to ensure fair and unbiased AI decisions.

As seen in Table 2, balancing AI security features with the need for ethical and privacy-conscious use is a significant and ongoing challenge. It demands a comprehensive approach that encompasses technical, legal, and ethical aspects of AI implementation.

| AI BIASES AND SOLUTIONS | |

|---|---|

| Common Biases in AI | Strategies to Mitigate Bias |

| Training data bias |

|

| Algorithmic bias |

|

| Privacy concerns |

|

| Ethical considerations |

|

| Overall mitigation approach |

|

Table 2

Potential for Malicious Use

AI and automation present not only advancements but also significant challenges, particularly in how they can be exploited by malicious actors. The automation and learning capabilities of AI can be used to develop more adaptive and resilient malware, presenting a challenge to traditional cybersecurity defenses.

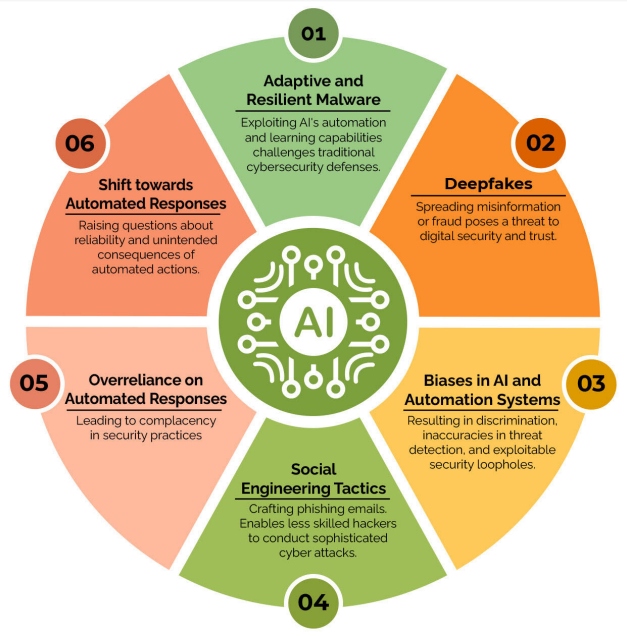

Figure 2: Malicious uses for AI and automation and various challenges

While AI aims to enhance efficiency, it raises questions about the reliability and potential unintended consequences of such automated actions, underscoring the need for careful integration of AI in cybersecurity strategies.

Negligence and Security Oversight

The emergence of AI and automation has not only transformed security but also altered regulation. 2023 is a turning point in the regulation of AI technologies, due largely in part to their growing sophistication and presence everywhere. The overall sentiment leans toward more stringent regulatory measures to ensure responsible, ethical, and secure use of AI, especially where cybersecurity is concerned.

Regulatory initiatives like the NIST AI Risk Management Framework and the AI Accountability Act are at the center of this security challenge. These are designed to set guidelines and standards for AI development, deployment, and management. The NIST Framework provides a structured approach for assessing and mitigating AI-related risks, while the AI Accountability Act emphasizes transparency and accountability in AI operations.

However, the adoption of AI and automation presents significant cybersecurity difficulties. The technical, social, and organizational challenges with implementing AI applications pose even greater hurdles compounded with growing costs of integrating robust AI algorithms into current cybersecurity designs. These considerations present organizations that are operating in uncertain regulatory environments with the daunting task of maintaining a delicate balance between leading practical implementation of edge security safeguards and compliance.

Ultimately, this balance is crucial for ensuring that the benefits of AI and automation are used effectively while adhering to regulatory standards and maintaining ethical and secure AI practices.

Conclusion

The dual nature of AI and automation technology shows that they provide great returns but must be approached with caution in order to understand and minimize associated risks. It is apparent that while the use of AI and automation strengthens application security with enhanced detection capabilities, improved efficiency, and adaptive learning, they also introduce exploitable biases, potential over reliance on automated systems, and an expanded attack surface for adversaries.

As these technologies evolve, it will be important for us to adopt a forward-looking framework that assumes a proactive and balanced approach to security. This entails not just leveraging the strengths of AI and automation for improved application security but also continuously identifying, assessing, and mitigating the emergent risks they pose. Ultimately, we must remain continuously vigilant because as these technologies evolve, so does the obligation to adapt to new risks.

Resources

- "Thune, Klobuchar release bipartisan AI bill," Rebecca Klar, The Hill

- Artificial Intelligence 2023 Legislation, NCSL

- Artificial Intelligence Risk Management Framework (AI RMF 1.0), NIST

This is an article from DZone's 2023 Enterprise Security Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments