A Step-by-Step Guide to Tomcat Performance Monitoring

Monitoring your web apps is important. In this post, we take a step-by-step look at how to monitor your Tomcat-based applications.

Join the DZone community and get the full member experience.

Join For FreeOverview

Monitoring the metrics and runtime characteristics of an application server is essential to ensure the adequate functioning of the applications running on that server, as well as to prevent or resolve potential issues in a timely manner.

As far as Java applications go, one of the most commonly used servers is Apache Tomcat, which will be the focus of this article.

Tomcat performance monitoring can be done either by relying on JMX beans or by using a dedicated monitoring tool like MoSKito or JavaMelody.

Of course, it’s important to know what is relevant to monitor and what are acceptable values for the metrics that are being watched.

In the following sections, let’s take a look at how you can setup Tomcat monitoring and what metrics can be used to keep tabs on performance.

Tomcat Performance Metrics

When checking the performance of an application deployed on a server, there are several areas that can provide clues as to whether everything is working within ideal parameters.

Here are some of the key areas you’ll want to monitor:

- memory usage – this reading is critical, since running low on heap memory will cause your application to perform slower, and can even lead to OutOfMemory exceptions; on the other hand, using very little of the available memory could mean you could decrease your memory needs and therefore minimize costs.

- garbage collection – since this is a resource-intensive process itself, you have to determine the right frequency at which it should be run, as well as if a sufficient amount of memory is freed up every time.

- thread usage – if there are too many active threads at the same time, this can slow down the application, and even the whole server.

- request throughout – this metric refers to the number of requests that the server can handle for a certain unit of time and can help determine your hardware needs.

- number of sessions – a similar measure to the number of requests is the number of sessions that the server can support at a time.

- response time – if your system takes too long to respond to requests, users are likely to quit so it’s crucial to monitor this response time and investigate the potential causes.

- database connection pool – monitoring this can help tune the number of connections in a pool that your application needs.

- error rates – this metric is helpful in identifying any issues in your codebase.

- uptime – this is a simple measure that shows how long your server has been running or down.

The Tomcat server comes to your aide in monitoring performance by providing JMX beans for most of these metrics that you can verify using a tool like Tomcat Manager or JavaMelody.

To continue this article, we’re going to take a look at each area of performance, any MBeans definitions that can help monitor it, and how you can view their values.

But first, let’s start with defining a very simple application that we’re gonna use as an example to monitor.

Example Application to Monitor

For this example, we’re gonna use a small web service application that uses an H2 database, built with Maven and Jersey.

The application will manipulate a simple User entity:

public class User {

private String email;

private String name;

// standard constructors, getters, setters

}The REST web service defined has two endpoints that saves a new User to the database and outputs the list of Users in JSON format:

@Path("/users")

public class UserService {

private UserDAO userDao = new UserDAO();

public UserService () {

userDao.createTable();

}

@POST

@Consumes(MediaType.APPLICATION_JSON)

public Response addUser(User user) {

userDao.add(user);

return Response.ok()

.build();

}

@GET

@Produces(MediaType.APPLICATION_JSON)

public List<User> getUsers() {

return userDao.findAll();

}

}Building a REST web service is outside the scope of this piece. For more information, check out our article on Java Web Services.

Also, note that the examples in this article are tested with Tomcat version 9.0.0.M26. For other versions, the names of beans or attributes may differ slightly.

Tomcat Manager

One way of obtaining the values of the MBeans is through the Manager App that Tomcat comes with. This app is protected, so to access it, you need to first define a user and password by adding the following in the conf/tomcat-users.xml file:

<role rolename="manager-gui"/>

<role rolename="manager-jmx"/>

<user username="tomcat" password="s3cret" roles="manager-gui, manager-jmx"/>The Manage App interface can be accessed at http://localhost:8080/manager/html. This contains some minimal information on the server status and the deployed applications, as well as the possibility to deploy a new application.

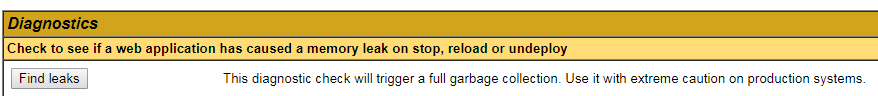

One interesting feature for the purpose of performance monitoring is the possibility of checking for memory leaks:

This will look for memory leaks in all the deployed applications.

Information on the JMX beans can be found at http://localhost:8080/manager/jmxproxy. This is displayed in a text format, as it is intended to be processed by tools.

To retrieve data about a specific bean, you can add parameters to the URL that represent the name of the bean and attribute you want:

http://localhost:8080/manager/jmxproxy/?get=java.lang:type=Memory&att=HeapMemoryUsageOverall, this tool can be useful for a quick check, but it’s limited and unreliable; therefore, it’s not recommended for a production instance.

Next, let’s move on to a tool that provides a friendlier user interface.

Where to Start:

Enabling Tomcat Performance Monitoring With JavaMelody

If you’re using Maven, simply add the javamelody-core dependency to the pom.xml:

<dependency>

<groupId>net.bull.javamelody</groupId>

<artifactId>javamelody-core</artifactId>

<version>1.69.0</version>

</dependency>In this way, you can enable monitoring for your web application.

After deploying the application on Tomcat, you can access the monitoring screens at the /monitoringURL.

JavaMelody contains useful graphs for displaying information related to various performance measures, as well as a way to find the values of the Tomcat JMX beans.

Most of these beans are JVM-specific and not application-specific.

Let’s go through each of the most important metrics – and see what MBeans are available and other ways to monitor them.

Where to Start:

Memory Usage

Monitoring the used and available memory is helpful both for ensuring proper functioning and statistics purposes. When the system can no longer create new objects due to lack of memory, the JVM will throw an exception.

Also, you should note that a constant increase in memory usage without a corresponding activity level is indicative of a memory leak.

Generally, it’s difficult to set a minimum absolute value for the available memory, and you should instead base it on observing the trends of your particular application. The maximum value should of course, not exceed the size of the available physical RAM.

The minimum and maximum heap size can be set in Tomcat by adding the parameters:

set CATALINA_OPTS=%CATALINA_OPTS% -Xms1024m -Xmx1024mOracle recommends setting the same value for the two arguments to minimize garbage collections.

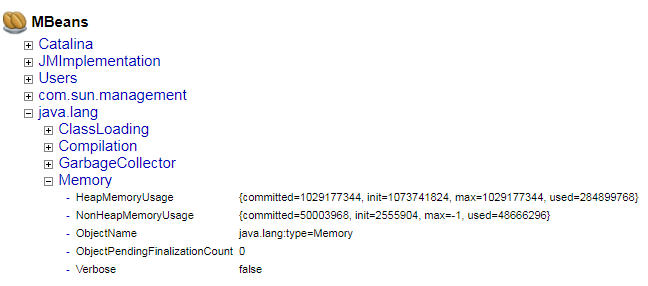

To view the available memory, you can inspect the MBean java.lang:type=Memory with the attribute HeapMemoryUsage:

The MBeans page can be accessed at the /monitoring?part=mbeans URL.

Also, the MBean java.lang:type=MemoryPool has attributes that show the memory usage for every type of heap memory.

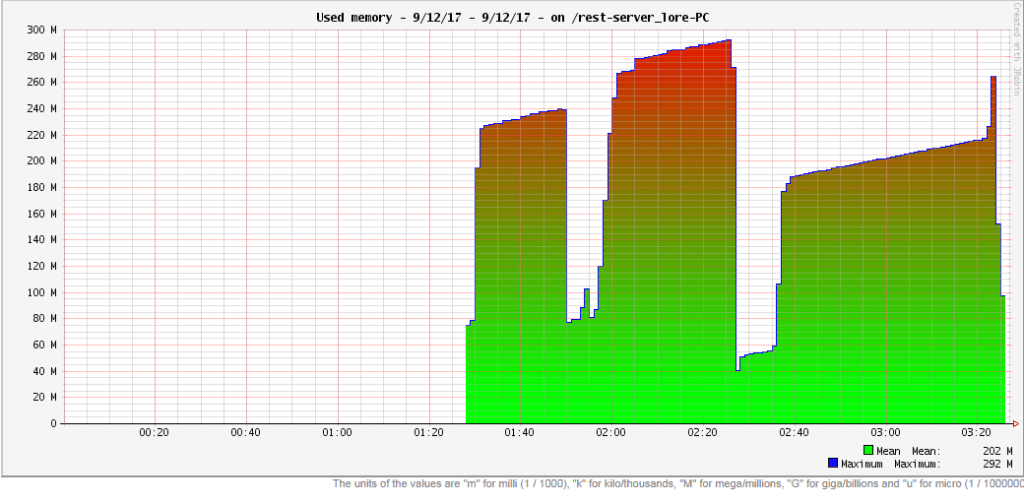

Since this bean only shows the current status of the memory, you can also check the “Used memory” graph of JavaMelody to see the evolution of memory usage over a period of time:

In the graph, you can see the highest memory-use reading was 292 MB, while the average is 202 MB of the allocated 1024 MB, which means the initial value is more than enough.

Of note here is that JavaMelody runs on the same Tomcat server, which does have a small impact on the readings.

Where to Start:

Garbage Collection

This is the process through which unused objects are released so that memory can be freed up again. If the system spends more than 98% of CPU time doing garbage collection and recovers less than 2% heap, the JVM will throw an OutOfMemoryError with the message “GC overhead limit exceeded.”

This is usually indicative of a memory leak, so it’s a good idea to watch for values approaching these limits and investigate the code.

To check these values, you can take a look at the java.lang:type=GarbageCollector MBean, particularly the LastGcInfo attribute. This shows information about the memory status, duration, and thread count of the last execution of the GC.

A full garbage collection cycle can be triggered from JavaMelody using the “Execute the garbage collection” link.

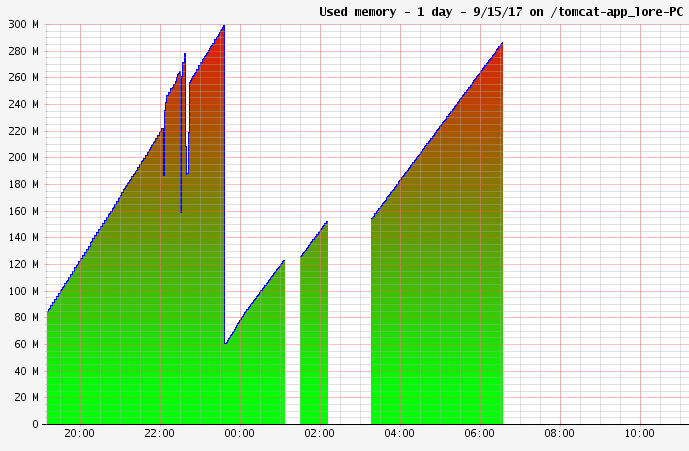

Let’s have a look at the evolution of the memory usage – before and after garbage collection:

In the case of the example app, the GC is run at 23:30 and the graph shows a large percentage of memory is reclaimed.

Where to Start:

Thread Usage

To find the status of the in-use threads, Tomcat provides the ThreadPool MBean. The attributes currentThreadsBusy, currentThreadCount and maxThreads provide information on the number of currently busy threads, the number of threads currently in the thread pool as well as the maximum number of threads that can be created.

By default, Tomcat uses a maxThreads number of 200.

If you expect a large number of concurrent requests, this count can naturally be increased by modifying the conf/server.xml file:

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443"

maxThreads="400"/>Alternatively, if the system performs poorly with a high thread count, you can tune and adjust the value. What’s important here is a good battery of performance tests to put load on the system and see how the application and the server handle that load.

Where to Start:

Request Throughput and Response Time

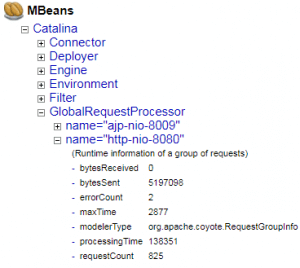

For determining the number of requests in a period of time, you can make use of the MBean Catalina:type=GlobalRequestProcessor, which has attributes like requestCount and errorCount – which represent the total number of requests performed and errors encountered.

The maxTime attribute shows the longest time to process a request, while processingTime represents the total time for processing all the requests:

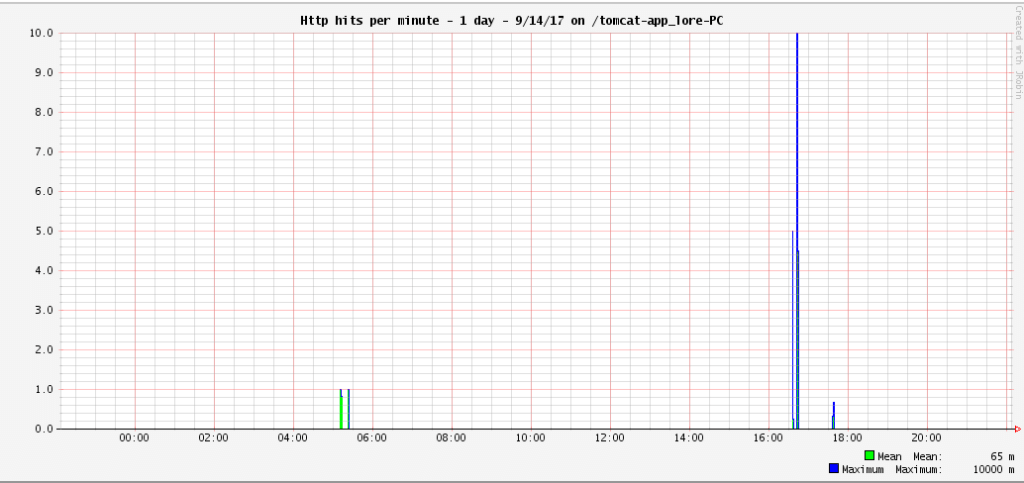

The disadvantage of viewing this MBean directly is that it includes all the requests made to the server. To isolate the HTTP requests, you can check out the “HTTP hits per minute” graph of the JavaMelody interface.

Let’s send a request that retrieves the list of users, then a set of requests to add a user and display the list again:

You can see the number of requests sent around 17:00 displayed in the chart, with an average execution time of 65 ms.

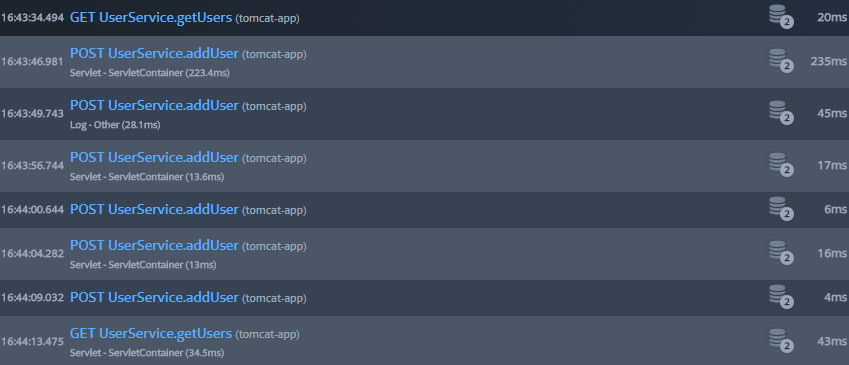

JavaMelody provides high-level information on all the requests and the average response time. However, if you want more detailed knowledge on each request, you can add another tool like Stackify’s Prefix – which monitors the performance of the application per individual web request.

Another advantage of Prefix is that you can see which application each request it belongs to, in case you have multiple applications deployed on the same Tomcat server.

In order to use both JavaMelody and Prefix, you have to disable the gzip compression of the JavaMelody monitoring reports, to avoid encoded everything twice. To do that, simply add the gzip-compression-disabledparameter to the MonitoringFilter class in the web.xml of the application:

<filter>

<filter-name>javamelody</filter-name>

<filter-class>net.bull.javamelody.MonitoringFilter</filter-class>

<init-param>

<param-name>gzip-compression-disabled</param-name>

<param-value>true</param-value>

</init-param>

</filter>Next, download Prefix, then create a setenv.bat (setenv.sh for Unix systems) file in the bin directory of the Tomcat installation. In this file, add the -javaagent parameter to CATALINA_OPTS to enable Prefix profiling for the Tomcat server:

set CATALINA_OPTS=%CATALINA_OPTS% -javaagent:"C:\Program Files (x86)\StackifyPrefix\java\lib\stackify-java-apm.jar"Now you can access the Prefix reports at http://localhost:2012/ – and view the time at which each request was executed and how long it took:

This is naturally very useful for tracking down the cause of any lag in your application.

Database Connections

Connecting to a database is an intensive process, which is why it’s important to use a connection pool.

Tomcat provides a way to configure a JNDI data source that uses connection pooling, by adding a Resource element in the conf/context.xml file:

<Resource

name="jdbc/MyDataSource"

auth="Container"

type="javax.sql.DataSource"

maxActive="100"

maxIdle="30"

maxWait="10000"

driverClassName="org.h2.Driver"

url="jdbc:h2:mem:myDb;DB_CLOSE_DELAY=-1"

username="sa"

password="sa"

/>Then, the MBean Catalina:type=DataSource can display information regarding the JNDI data source, such as numActive and numIdle, representing the number of active or idle connections.

For the database connections to be displayed in the JavaMelody interface, the JNDI data source has to be named MyDataSource. Afterwards, you can consult graphs such as “SQL hits per minute”, “SQL mean times” and “% of SQL errors.”

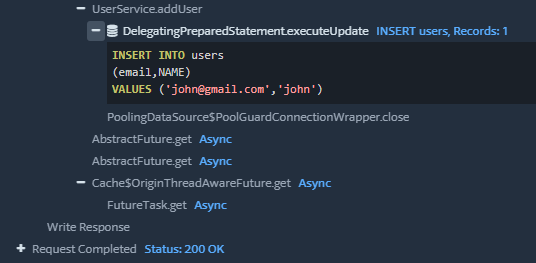

For more detail on each SQL command sent to the database, you can consult Prefix for each HTTP request. The requests that involve a database connection are marked with a database icon.

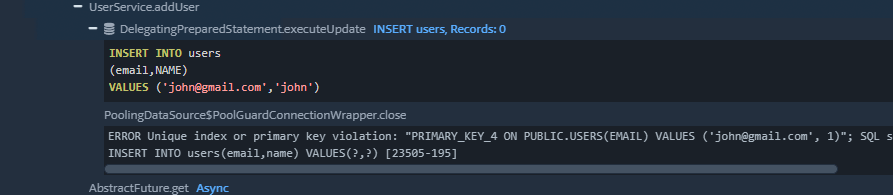

Prefix will display the SQL query that was generated by the application. Let’s see the data recorded by Prefix for a call to the addUser() endpoint method:

The screenshot above shows the SQL code, as well as the result of the execution.

In case there is a SQL error, Prefix will show you this as well. For example, if someone attempts to add a user with an existing email address, this causes a primary key constraint violation:

The tool shows the SQL error message, as well as the script that caused it.

Error Rates

Errors are a sign that your application is not performing as expected, so it’s important to monitor the rate at which they occur. Tomcat does not provide an MBean for this, but you can use other tools to find this information.

Let’s introduce an error in the example application by writing an incorrect name for the JNDI data source, and see how the performance tools behave.

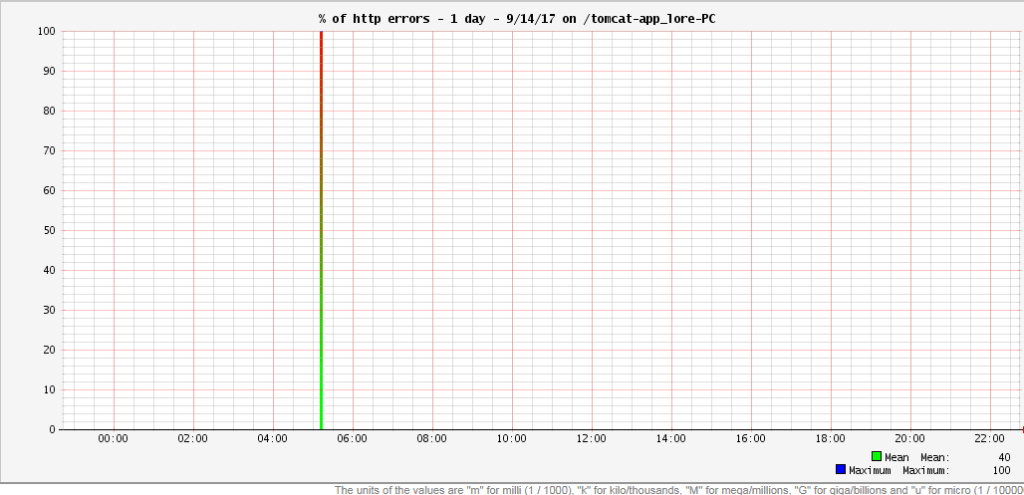

JavaMelody provides a “% of HTTP errors” chart which shows what percentage of requests at a given time resulted in an error:

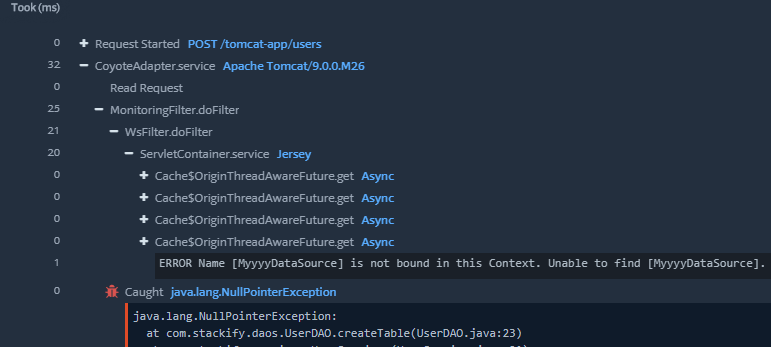

This shows you that an error occurred, but it’s not very helpful in identifying the error. For this latter purpose, you can turn to Prefix again – which highlights HTTP requests that ended with an error code:

![]()

If you select this request, Prefix will display details regarding the endpoint that was accessed and the error encountered:

According to this, the error happened when accessing the /users endpoint, and the cause is “MyyyDataSource is not bound in this context,” meaning the JNDI data source with the incorrect name was not found.

Conclusion

Monitoring the performance of a Tomcat server is crucial to successfully run your Java applications in production. This can refer to ensuring that the application responds to requests without significant delays, in addition to identifying any potential errors or memory leaks in your code.

Tomcat anticipates this need by providing a series of performance-related JMX beans that you can monitor. And of course, a production-grade APM tool such as Stackify’s Prefix can make the task a lot easier as well as scalable.

Overall, this is data you always need to keep track of in production and understand well, to stay in front of the issues that will inevitably come up.

Published at DZone with permission of Eugen Paraschiv, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments