Lessons Learned When Migrating Service Virtualization to OpenSource

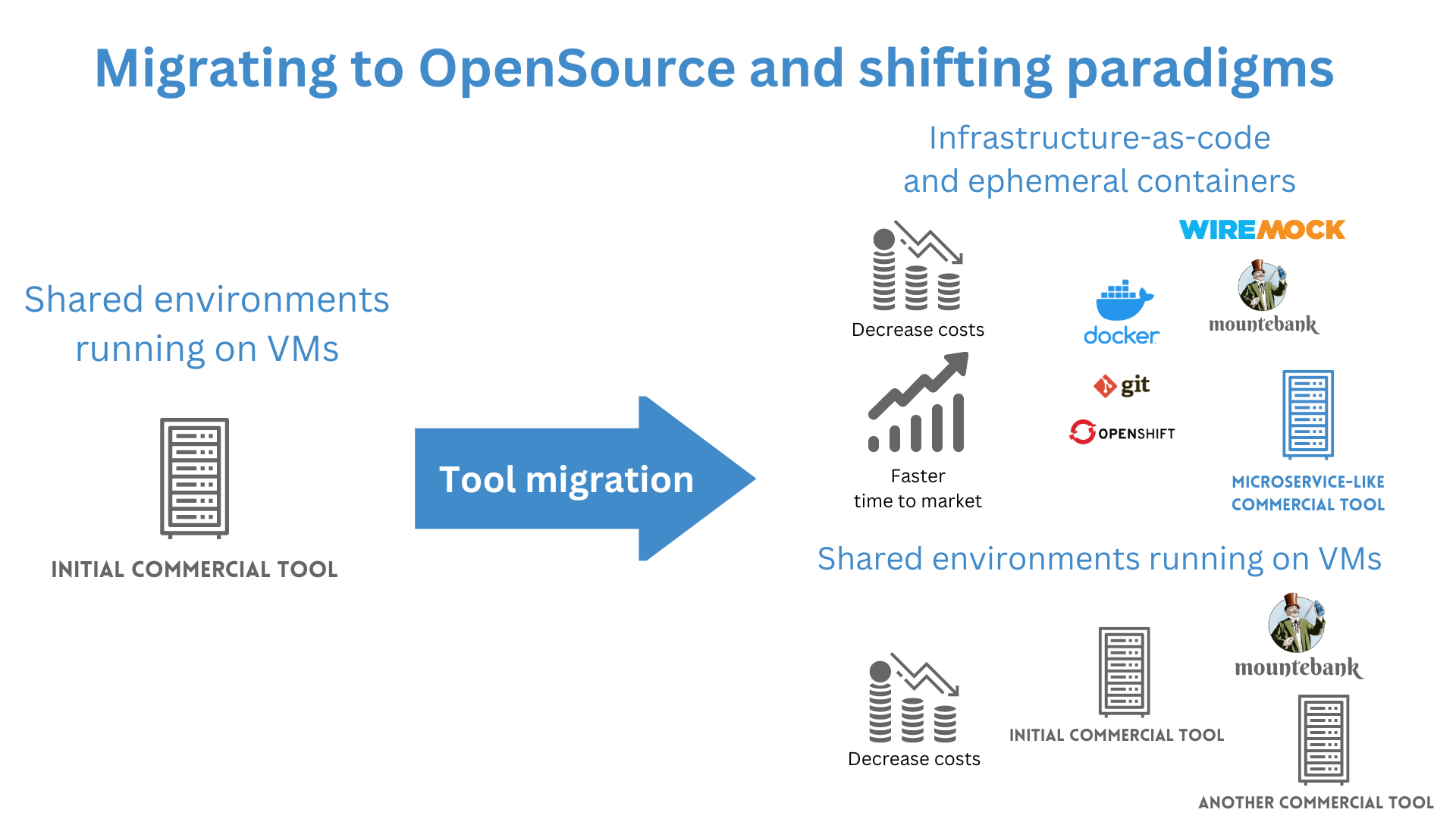

A high-level view on migrating from a service virtualization tool to OpenSource API Simulators. How three teams migrated their virtual services to new tools.

Join the DZone community and get the full member experience.

Join For FreeKey Takeaways

- You can migrate your service virtualization with OpenSource tools to cut tooling costs but might need to supplement with other commercial offerings to cover missing protocol support, i.e. IBM MQ.

- Decoupling the teams' schedules and allowing them to migrate at their chosen pace has proven to be the most effective.

- Use an internal mailing list and brown bag lunches to share knowledge about migration, best practices, common mistakes, and other learnings.

- Manage the lagging cohort — allow teams to stay with an existing tool or postpone if the budget allows

- Look for tools compatible with an infrastructure-as-code approach with ephemeral containers if that is what you already use.

- Sharing the configuration files and simulators between teams can save you time.

Introduction

I have worked closely with three teams at a large retail bank as a vendor representative, helping them migrate from a commercial service virtualization tool to OpenSource WireMock and another less costly commercial tool. I have also been briefed on how other teams at the bank have migrated to tools like Mountebank.

Most virtual services were migrated to the OpenSource tools, but the teams had to supplement with commercial offerings. Selected groups migrated away from a monolithic-like centralized service virtualization approach to the ephemeral distributed microservice-like API simulation approach.

This article details what has happened at the bank from a high-level view. I also describe how those three teams migrated their virtual services to new tools.

Three Teams Migrating Virtual Services

I have worked closely with three teams for a client — the bank. Two were performance testing teams, and one was a QA team running system tests.

The three teams have decided to move off a commercial service virtualization tool to OpenSource WireMock for HTTP and supplement the missing IBM MQ protocol support with another commercial offering that fits the new world of microservices and ephemeral containers.

On top of that, they have decided to move away from running on VMs and to use OpenShift. The two main reasons for the migration were cost-cutting and moving to a new paradigm infrastructure running on ephemeral containers in a distributed OpenShift environment. The estimate was 51% cost saving per year on tooling. The teams were on the path to moving to ephemeral containers in OpenShift to cut release cycle times and shift testing earlier in the life cycle, even more than when running in traditional shared environments. WireMock and the selected commercial tool are lightweight in disk and memory and CPU usage, have only one deployable component, and fit well into this paradigm.

The migration to WireMock took six months. The teams had to develop a dashboard on top of many WireMock instances to orchestrate their usage to support multiple virtual services (or API mocks and simulators, as some called them) in one place, which took two months. Migrating services to WireMock took one month, as they did it manually. Testing of the new virtual services was interrupted by other projects in flight, and that is why it was rescheduled multiple times and eventually took 2.5 months. The team also needed a couple of weeks to get up to speed with the new OpenSource tool.

The teams deployed the new API simulators in a new OpenShift cluster that the bank has provisioned. They wanted to evaluate the pros and cons of OpenShift and microservices running in ephemeral containers with a real project. The migration of virtual services, so testing infrastructure, was a significant enough project to highlight potential issues with the new OpenShift cluster setup. It did not, however, carry many critical risks as real uses like production services were using it.

The team used a commercial tool to support IBM MQ queuing protocol, as WireMock does not support it.

The teams have shared with each other the OpenShift, WireMock, and the other commercial tool configuration files. Sharing the configuration files meant new teams saved a lot of time when migrating.

Migrating To Different Commercial Tools

I have worked with teams that migrated to WireMock and the microservice-like commercial API simulation tool. I have not worked with it but have been briefed on other groups that migrated to WireMock, Mountebank, and other commercial tools.

The reason for migrating to two different commercial offerings for different teams was that the other team had already been using other products from the same vendor, so it made sense to purchase from the same vendor again, just a different tool. Also, those teams did not need to shift architecture paradigms and migrate to ephemeral containers and infrastructure-as-code just yet. They continued working with a monolithic-like architecture. The teams have migrated several hundred HTTP services to Mountebank and a few dozen non-HTTP ones, like mainframe protocols, to the other commercial tool.

A few teams have decided to continue using the initial commercial tool as well and not migrate any virtual services, as it fits their requirements well and they could afford the tool licenses.

The takeaway is that letting teams choose the tools that match their requirements can be an effective way to implement service virtualization at a large enterprise. Fast-moving product teams want team schedules decoupled and the ability to choose the best tools for the job (self-service autonomous teams).

Migrate to a New Way of Service Virtualization

Several teams at the bank have decided to take this migration as an opportunity to move away yet another piece of their infrastructure from a centrally governed to a self-service model. Those teams are less focused on reducing the cost to absolute minimums and more on optimizing for the fastest time to market in a competitive area.

Those product teams that move fast would like more control over the infrastructure of the tools they run. They would also like to run the service virtualization the same way they run their microservices and other tools, in ephemeral test containers that they spin up based on demand.

One of the three teams in the case study now runs their performance and load tests daily, and the whole ephemeral infrastructure for the performance test run is re-created per test run. The other teams chose not to switch to ephemeral containers and deployed the virtual services in long-running containers, as that was how the rest of their development was done.

Centrally managed service virtualization (i.e COE - Center Of Excellence) |

Self-service ephemeral service virtualization test containers |

|

Management of the SV tool servers |

Typically a central team of administrators (COE) |

The product team that needs the API simulators |

Purchasing of SV tool licenses |

Typically a central team of administrators (COE) |

The product team that needs the API simulators |

Creation of virtual services |

The product team that needs the virtual services |

The product team that needs the API simulators |

Lifecycle |

Typically long-lived 24/7 running instances |

Typically ephemeral instances spin up based on demand |

Development technology |

Typically VMs |

Typically ephemeral test containers |

Deployment architecture |

Typically a few shared instances of service virtualization environments |

Many ephemeral test containers running on local laptops, inside CI/CD pipelines, in OpenShit, etc. |

Securing virtual services |

Shared environment Virtual Services need to be secured via HTTP authorization or client certificate authorization. |

The API simulators configuration access needs to be managed via Git. Less need to secure endpoints as they are ephemeral and typically available only locally and CI/CD, not shared environments. |

Lessons Learned

There have been several lessons the bank has learned during the migration process, which hopefully can help you, the reader, on your migration journey.

Commercial Off-the-Shelf Tools Are Feature-Rich

Commercial off-the-shelf offerings have been on the market for decades and have many features missing in open-source tools like WireMock or Mountebank. Most use cases will have workarounds available that your team can implement in acceptable time frames — for example, custom code extensions in WireMock. The lack of features in OpenSource should not stop you from exploring if a migration project is likely to succeed, especially if you can supplement missing bits with other lower-cost commercial offerings.

Wiremock and Mountebank Are Excellent Open Source Tools

WireMock and Mountebank are excellent OpenSource tools with many contributors, are well maintained, and are still growing in popularity. The downside of WireMock is it supports HTTP(S) but not IBM MQ, RabbitMQ, gRPC, or other protocols and technologies. Mountebank supports a few more protocols, but the ones mentioned above and needed by this particular bank still need to be made available.

For a complete migration, your teams might need to supplement with other commercial vendors if you want to support protocols or advanced features not available in OpenSource tools.

Choose a Commercial Vendor That Will Help With the Migration

Commercial service virtualization tools can be an adequate replacement for your current tooling if you run in VMs (Virtual Machines). Choose a tool that comes from the same family of tools and will suffice if there is no need to change the deployment paradigm from VMs to containers. Just be mindful of the cost, as these tools share a similar pricing model.

If you want to deploy your services in OpenShift or other flavors of Kubernetes running containers, look for tools compatible with an infrastructure-as-code approach that can be managed by self-service teams (like OpenSource WireMock or Mountebank or selected commercial offerings).

If you choose to work with a commercial vendor, confirm with the vendor consultants if they can help avoid typical mistakes when migrating. You can outsource part of the work to them. Choose the vendor carefully and look at how responsive they are to fixing potential issues in the tool and if they are open to joint roadmap prioritization.

A commercial vendor can also help you by providing service virtualization migration tools.

Allow Teams To Choose Their Schedule and Tools

An effective way to migrate a large organization from commercial tolling to OpenSource is to allow individual departments or teams to decide the best tool for the job. Each group can choose a tool within their budget that meets their unique criteria during migration.

Different teams will need to move on different schedules. The amount of work to migrate will differ; some groups can have only a few services, and some will have hundreds. On top of that, product teams on the critical path that work with high-visibility deliverables will need help scheduling the migration work. Decoupling the teams' schedules and allowing them to migrate at their chosen pace has proven to be most effective in this case.

The overall migration can take 6-24 months, depending on the scale of your organization, and requires effective communication and planning between the product teams and the infrastructure team managing the existing service virtualization infrastructure.

To minimize various types of risks during the migration and to handle the scale of the project, the teams at this bank adopted a multiple J-curve approach to the migration. They have done multiple small migrations instead of one big switchover.

Share Knowledge, Configuration, and Code

Using an internal mailing list to share knowledge about the migration and new tools has proven to be an effective way to spread the know-how, best practices, common mistakes, and other learnings. The teams also used confluence pages to capture and share expertise. Doing brown-bag lunch sessions (workshops) also helped build relationships between the change agents and the teams.

The teams should share OpenShift configuration files and actual simulators where possible.

Decide on Architecture — Old or New

Teams must decide if they want to use service virtualization in ephemeral test containers running in many environments, such as local, CI/CD pipelines, and OpenShift. The alternative is to stay with the traditional approach of sharing long-running 24/7 instances accessed by many teams and builds (see table above for comparison).

The teams, in this case study, have looked at how they deploy software today. So it was a logical next step for those who already used other ephemeral test containers to deploy virtual services that way. The mostly monolithic teams stayed that way after the migration as well.

Manage the Lagging Cohort

There will be teams that are very happy with the existing tooling and want to stay the same for various reasons, such as lack of capability or capacity to do the work now.

They can stay with the existing tooling as long as it's within their budget and does not interfere with other departments' KPIs. An alternative is to postpone the migration to a different time in the future. Some can also be forced to migrate if the vendor relationship risks justify it.

Use-Case-Specific Tools Comparison

From this bank’s point of view, the tool choice was simplified as the scope and characteristics of the migration were narrowed to the bank’s teams’ specific use cases as per the details shared above.

The tools discussed in this case study have many other applications depending on the specific use case. If you want to discuss specific capabilities or features, it is best to contact the Open Source tool mailing list or the chosen commercial vendor directly.

Next Steps

- Review the Wikipedia comparison of API simulation tools to see what OpenSource and commercial vendors offer

- Reach out to the article author Wojciech Bulaty if you need help with the migration by leaving a comment below, via LinkedIn, or email him at “Wojtek [at] trafficparrot.com”

Opinions expressed by DZone contributors are their own.

Comments