11 Patterns to Secure Microservice Architectures

Here are eleven patterns and best practices for securing your microservice architectures.

Join the DZone community and get the full member experience.

Join For FreeMicroservices are a trendy topic; it seems like everyone is learning about or using them. And this makes sense since they are a wonderful tool that helps deliver code more quickly.

My friend, Chris Richardson, is an expert on this topic. He runs microservices.io, a website which lists a great number of microservice patterns. In a recent blog post he offered some helpful guidelines:

Why Microservices?

xxxxxxxxxx

IF

you are developing a large/complex application

AND

you need to deliver it rapidly, frequently and reliably

over a long period of time

THEN

the Microservice Architecture is often a good choice

When looking at Chris’ website, I noticed that “Access token” is the only item under security. In that same vein, not everyone will have a need for the patterns listed in this post. If I say to use PASETO tokens instead of JWT (when possible), that will be a challenge for developers that use Okta or other IdPs that don’t offer PASETO.

Watch this blog post as a presentation on YouTube.

1. Be Secure by Design

Secure code is the best code. Secure by design means that you bake security into your software design from the beginning. If you have user input, sanitize the data and remove malicious characters.

I asked my friend Rob Winch what he thought about removing malicious characters. Rob is the lead of the Spring Security project, and widely considered a security expert.

I think that it makes sense to design your code to be secure from the beginning. However, removing malicious characters is tricky at best. What is a malicious character really depends on the context that it is used in. You could ensure there are no malicious characters in an HTML context only to find out that there are other injection attacks (i.e. JavaScript, SQL, etc). It is important to note that even encoding for HTML documents is contextually based.

What’s more is it isn’t always practical to limit the characters. In many contexts a ' is a malicious character, but this is a perfectly valid character in someone’s name. What should be done then?

I find it better to ensure that the characters are properly encoded in the context that they are being used rather than trying to limit the characters. — Rob Winch

As engineers, we’re taught early on about the importance of creating well-designed software and architectures. You study it and take pride in it. Design is a natural part of building software.

Well-known security threats should drive design decisions in security architectures. Reusable techniques and patterns provide solutions for enforcing the necessary authentication, authorization, confidentiality, data integrity, privacy, accountability, and availability, even when the system is under attack.

From the InfoQ Podcast and its Johnny Xmas on Web Security & the Anatomy of a Hack episode:

The OWASP Top 10 really hasn’t changed all that much in the last ten years. For example, despite being the number-one approach used to educate defensive engineers on how to protect their apps, SQL injection is still the most common attack. We continue to repeat the same mistakes that have exposed systems for a decade now. — Johnny Xmas

This is why security precautions need to be baked into your architecture.

OWASP

The Open Web Application Security Project (OWASP) is a nonprofit foundation that works to improve the security of software. They are one of the most widely used sources for developers and technologists to secure the web. They provide and encourage:

- Tools and Resources

- Community and Networking

- Education & Training

I like the example from the Secure by Design book, by Dan Bergh Johnsson, Daniel Deogun, and Daniel Sawano. They show how you can develop a basic User object that displays a username on a page.

xxxxxxxxxx

public class User {

private final Long id;

private final String username;

public User(final Long id, final String username) {

this.id = id;

this.username = username;

}

// ...

}

If you accept any string value for the username, someone could use the username to perform XSS attacks. You can fix this with input validation, like the following.

xxxxxxxxxx

import static com.example.xss.ValidationUtils.validateForXSS;

import static org.apache.commons.lang3.Validate.notNull;

public class User {

private final Long id;

private final String username;

public User(final Long id, final String username) {

notNull(id);

notNull(username);

this.id = notNull(id);

this.username = validateForXSS(username);

}

}

However, this code is still problematic.

- Developers need to be thinking about security vulnerabilities

- Developers have to be security experts and know to use

validateForXSS() - It assumes that the person writing the code can think of every potential weakness that might occur now or in the future

A better design is to create a Username class that encapsulates all of the security concerns.

xxxxxxxxxx

import static org.apache.commons.lang3.Validate.*;

public class Username {

private static final int MINIMUM_LENGTH = 4;

private static final int MAXIMUM_LENGTH = 40;

private static final String VALID_CHARACTERS = "[A-Za-z0-9_-]+";

private final String value;

public Username(final String value) {

notBlank(value);

final String trimmed = value.trim();

inclusiveBetween(MINIMUM_LENGTH,

MAXIMUM_LENGTH,

trimmed.length());

matchesPattern(trimmed,

VALID_CHARACTERS,

"Allowed characters are: %s", VALID_CHARACTERS);

this.value = trimmed;

}

public String value() {

return value;

}

}

public class User {

private final Long id;

private final Username username;

public User(final Long id, final Username username) {

this.id = notNull(id);

this.username = notNull(username);

}

}

This way, your design makes it easier for developers to write secure code.

Writing and shipping secure code is going to become more and more important as we put more software in robots and embedded devices.

2. Scan Dependencies

Third-party dependencies make up 80% of the code you deploy to production. Many of the libraries we use to develop software depend on other libraries. Transitive dependencies lead to a (sometimes) large chain of dependencies, some of which might have security vulnerabilities.

You can use a scanning program on your source code repository to identify vulnerable dependencies. You should scan for vulnerabilities in your deployment pipeline, in your primary line of code, in released versions of code, and in new code contributions.

I recommend watching “The (Application) Patching Manifesto” by Jeremy Long. It’s an excellent presentation. A few takeaways from the talk:

Snyk Survey: 25% projects don’t report security issue; Majority only add release note; Only 10% report CVE;

In short, use tools to prioritize but ALWAYS update dependencies! — Rob Winch

If you’re a GitHub user, you can use dependabot to provide automated updates via pull requests. GitHub also provides security alerts you can enable on your repository.

You can also use more full-featured solutions, such as Snyk and JFrog Xray.

3. Use HTTPS Everywhere

You should use HTTPS everywhere, even for static sites. If you have an HTTP connection, change it to an HTTPS one. Make sure all aspects of your workflow—from Maven repositories to XSDs—refer to HTTPS URIs.

HTTPS has an official name: Transport Layer Security (a.k.a., TLS). It’s designed to ensure privacy and data integrity between computer applications. How HTTPS Works is an excellent site for learning more about HTTPS.

To use HTTPS, you need a certificate. It’s a driver’s license of sorts and serves two functions. It grants permissions to use encrypted communication via Public Key Infrastructure (PKI), and also authenticates the identity of the certificate’s holder.

Let’s Encrypt offers free certificates, and you can use its API to automate renewing them. From a recent InfoQ article by Sergio De Simone:

Let’s Encrypt launched on April 12, 2016 and somehow transformed the Internet by making a costly and lengthy process, such as using HTTPS through an X.509 certificate, into a straightforward, free, widely available service. Recently, the organization announced it has issued one billion certificates overall since its foundation and it is estimated that Let’s Encrypt doubled the Internet’s percentage of secure websites.

Let’s Encrypt recommends you use Certbot to obtain and renew your certificates. Certbot is a free, open-source software tool for automatically using Let’s Encrypt certificates on manually-administrated websites to enable HTTPS. The Electronic Frontier Foundation (EFF) created and maintains Certbot.

The Certbot website lets you choose your web server and system, then provides the instructions for automating certificate generation and renewal. For example, here’s instructions for Ubuntu with Nginx.

To use a certificate with Spring Boot, you just need some configuration.

src/main/resources/application.yml

xxxxxxxxxx

server

ssl

key-storeclasspathkeystore.p12

key-store-passwordpassword

key-store-typepkcs12

key-aliastomcat

key-passwordpassword

port8443

Storing passwords and secrets in configuration files is a bad idea. I’ll show you how to encrypt keys like this below.

You also might want to force HTTPS. You can see how to do that in my previous blog post 10 Excellent Ways to Secure Your Spring Boot Application. Often, forcing HTTPS uses an HTTP Strict-Transport-Security response header (abbreviated as HSTS) to tell browsers they should only access a website using HTTPS.

To see how to set up your Spring-based microservice architecture to use HTTPS locally, see Secure Service-to-Service Spring Microservices with HTTPS and OAuth 2.0.

You might ask “Why do we need HTTPS inside our network?”

That is an excellent question! It’s good to protect data you transmit because there may be threats from inside your network.

Johnny Xmas describes how a web attack typically happens in a recent InfoQ Podcast. Phishing and guessing people’s credentials are incredibly effective techniques. In both cases, the attacker can gain access to an in-network machine (with administrative rights) and wreak havoc.

Secure GraphQL APIs

GraphQL uses HTTP, so you don’t have to do any extra logic from a security perspective. The biggest thing you’ll need to do is keep your GraphQL implementation up-to-date. GraphQL relies on making POST requests for everything. The server you use will be responsible for input sanitization.

If you’d like to connect to a GraphQL server with OAuth 2.0 and React, you just need to pass an Authorization header.

Apollo is a platform for building a data graph, and Apollo Client has implementations for React and Angular, among others.

xxxxxxxxxx

const clientParam = { uri: '/graphql' };

const myAuth = this.props && this.props.auth;

if (myAuth) {

clientParam.request = async (operation) => {

const token = await myAuth.getAccessToken();

operation.setContext({ headers: { authorization: token ? `Bearer ${token}` : '' } });

}

}

const client = new ApolloClient(clientParam);

Configuring a secure Apollo Client looks similar for Angular.

xxxxxxxxxx

export function createApollo(httpLink: HttpLink, oktaAuth: OktaAuthService) {

const http = httpLink.create({ uri });

const auth = setContext((_, { headers }) => {

return oktaAuth.getAccessToken().then(token => {

return token ? { headers: { Authorization: `Bearer ${token}` } } : {};

});

});

return {

link: auth.concat(http),

cache: new InMemoryCache()

};

}

On the server, you can use whatever you use to secure your REST API endpoints to secure GraphQL.

Secure RSocket Endpoints

RSocket is a next-generation, reactive, layer 5 application communication protocol for building today’s modern cloud-native and microservice applications.

What does all that mean? It means RSocket has reactive semantics built in, so it can communicate backpressure to clients and provide more reliable communications. The RSocket website says implementations are available for Java, JavaScript, Go, .NET, C++, and Kotlin.

Spring Security 5.3.0 has full support for securing RSocket applications.

To learn more about RSocket, I recommend reading Getting Started With RSocket: Spring Boot Server.

4. Use Access and Identity Tokens

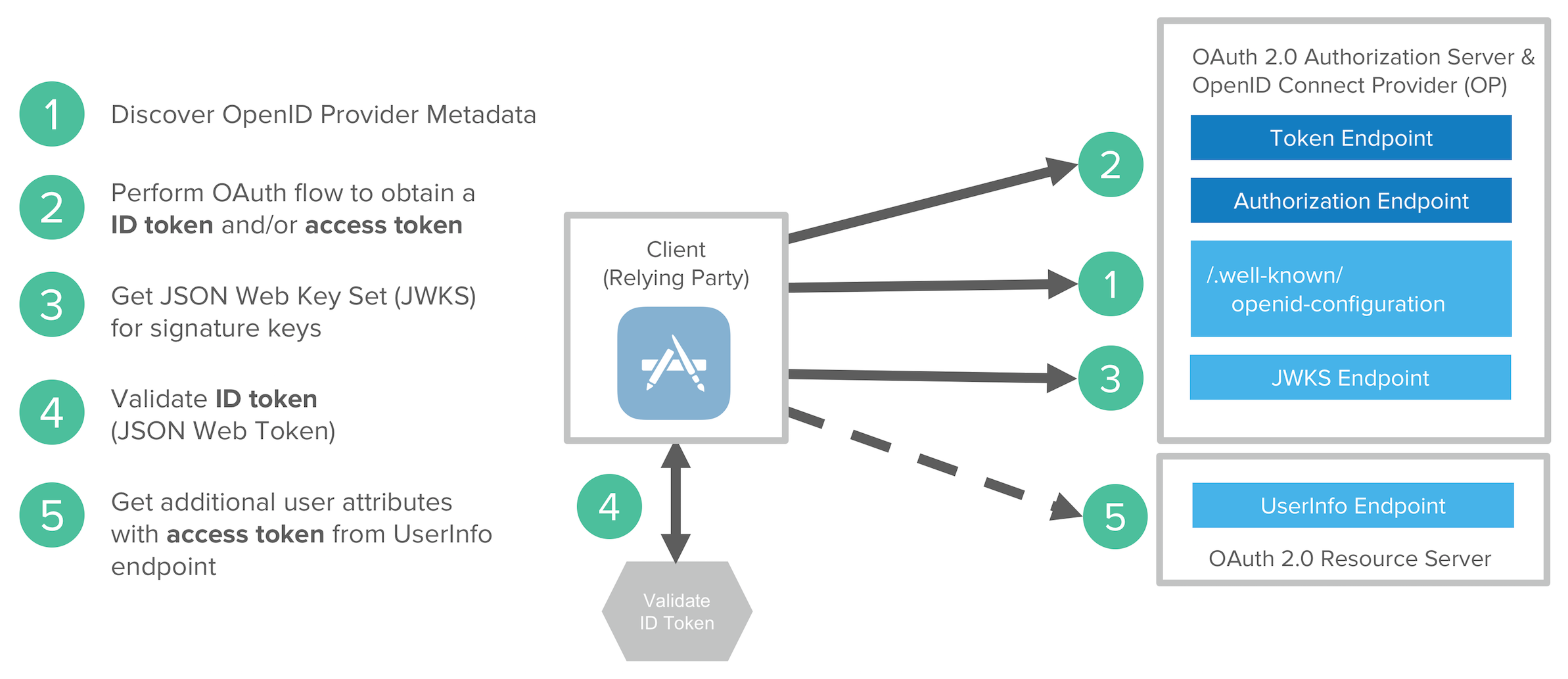

OAuth 2.0 has provided delegated authorization since 2012. OpenID Connect added federated identity on top of OAuth 2.0 in 2014. Together, they offer a standard spec you can write code against and have confidence that it will work across IdPs (Identity Providers).

The spec also allows you to look up the identity of the user by sending an access token to the /userinfo endpoint. You can look up the URI for this endpoint using OIDC discovery, which provides a standard way to obtain a user’s identity.

If you’re communicating between microservices, you can use OAuth 2.0’s client credentials flow to implement secure server-to-server communication. In the diagram below, the API Client is one server, and the API Server is another.

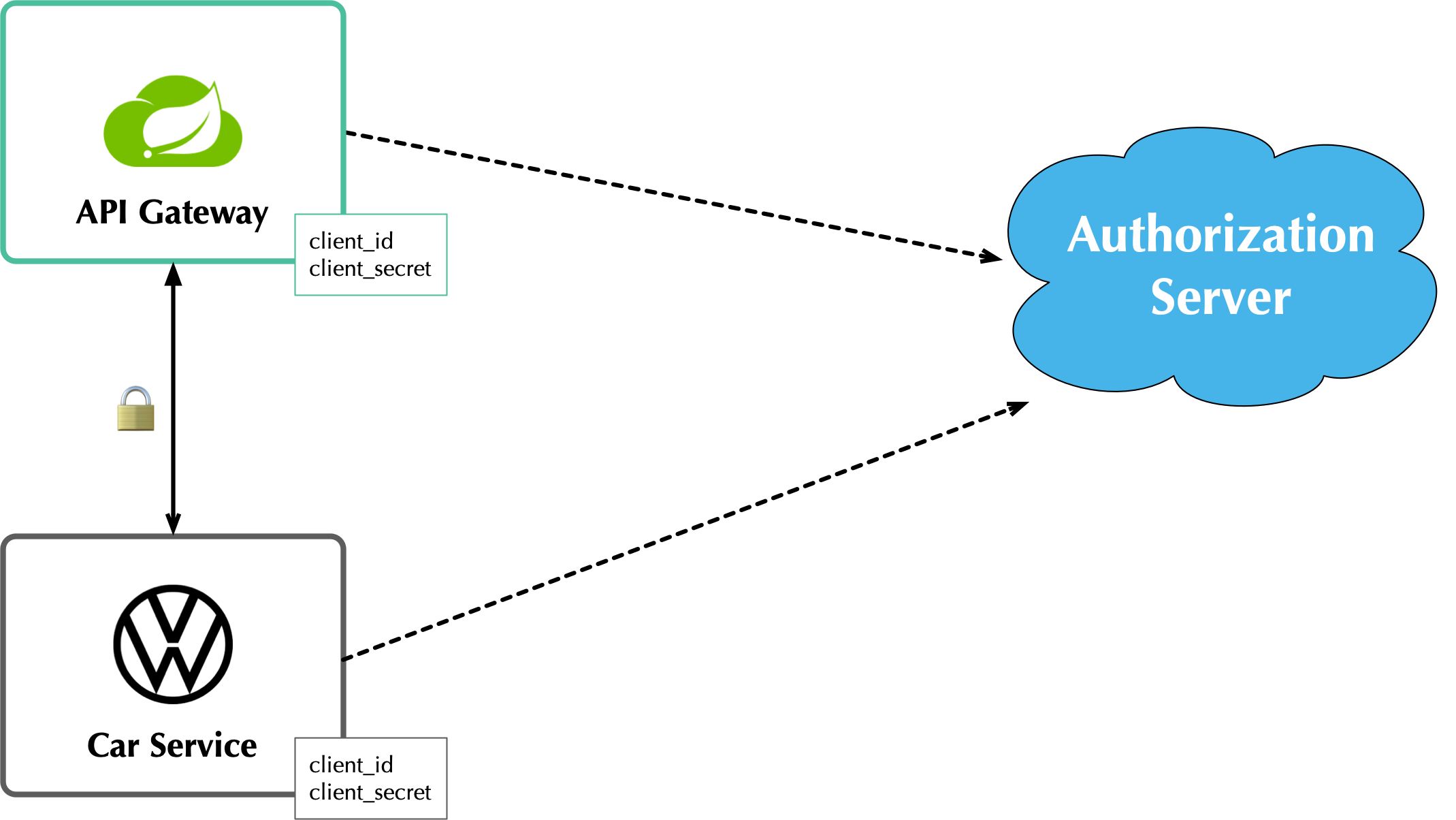

Authorization Servers: Many-to-One or One-to-One?

If you are using OAuth 2.0 to secure your service, you’re using an authorization server. The typical setup is a many-to-one relationship, where you have many microservices talking to one authorization server.

The pros of this approach:

- Services can use access tokens to talk to any other internal services (since they were all minted by the same authorization server)

- A single place to look for all scope and permission definitions

- Easier to manage for developers and security people

- Faster (less chatty)

The cons:

- Opens you up to the possibility of rogue services causing problems with their tokens

- If one service’s token is compromised, all services are at risk

- Vague security boundaries

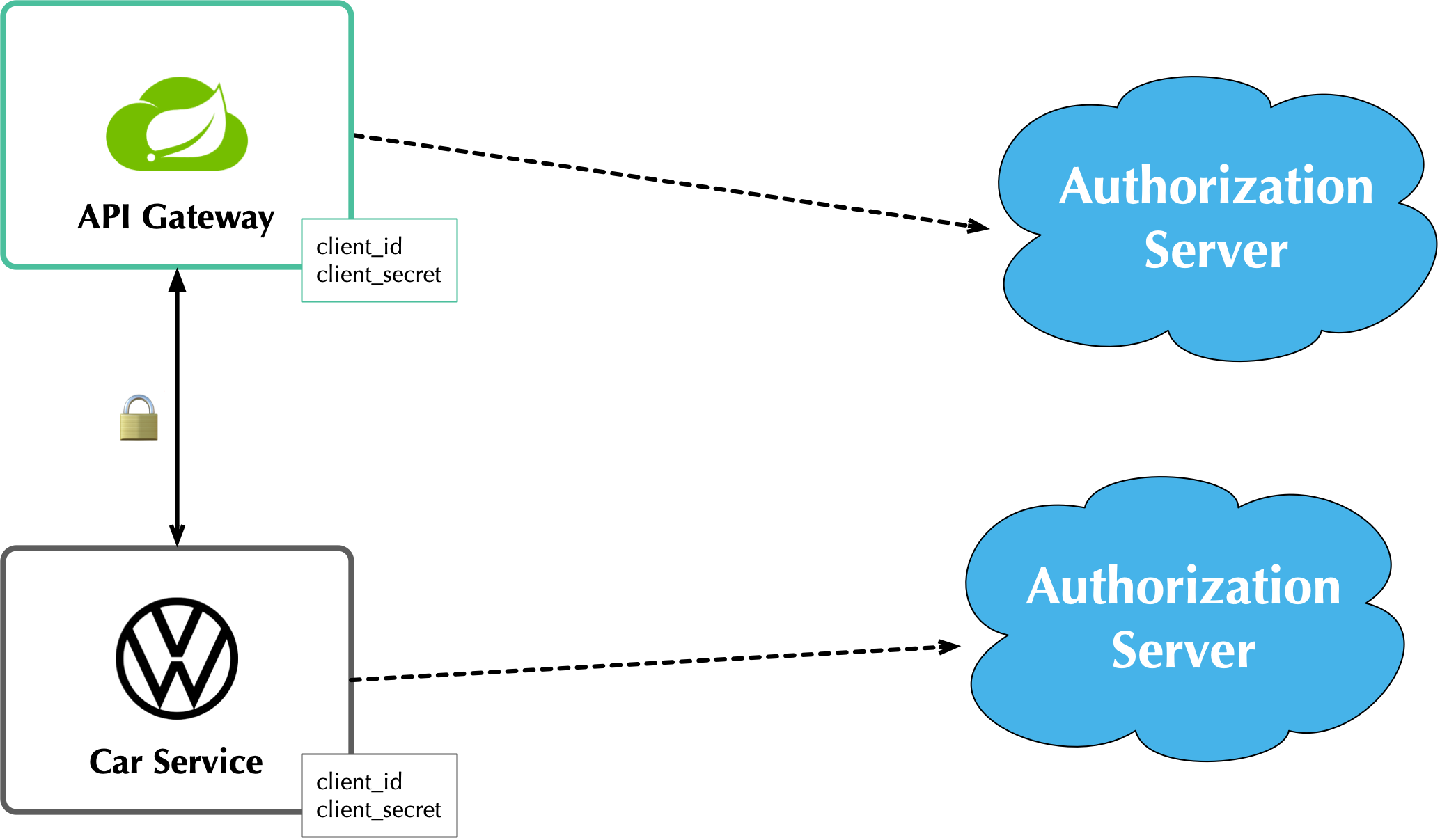

The other, more secure, alternative is a one-to-one approach where every microservice is bound to its own authorization server. If they need to talk to each other, they need to register before trusting.

This architecture allows you to have clearly defined security boundaries. However, it’s slower because it’s more chatty, and it’s harder to manage.

My recommendation: use a many-to-one relationship until you have a plan and documentation to support a one-to-one relationship.

Use PASETO Tokens Over JWT

JSON Web Tokens (JWT) have become very popular in the past several years, but they’ve also come under fire. Mostly because a lot of developers try to use JWT to avoid server-side storage for sessions. See Why JWTs Suck as Session Tokens to learn why this is not recommended.

PASETO stands for platform-agnostic security tokens. Paseto is everything you love about JOSE (JWT, JWE, JWS) without any of the many design deficits that plague the JOSE standards.

My colleagues Randall Degges and Brian Demers wrote up some informative posts on PASETO.

Long story, short: using PASETO tokens isn’t as easy as it sounds. If you want to write your own security, it is possible. But if you’re going to use a well-known cloud provider, chances are it doesn’t support the PASETO standard (yet).

5. Encrypt and Protect Secrets

When you develop microservices that talk to authorization servers and other services, the microservices likely have secrets that they use for communication. These secrets might be an API key, or a client secret, or credentials for basic authentication.

The #1 rule for secrets is don’t check them into source control. Even if you develop code in a private repository, it’s a nasty habit, and if you’re working on production code, it’s likely to cause trouble.

The first step to being more secure with secrets is to store them in environment variables. But this is only the beginning. You should do your best to encrypt your secrets.

In the Java world, I’m most familiar with HashiCorp Vault and Spring Vault.

My co-worker Randall is a fan of Amazon KMS.

In short, the way it works is:

- You generate a master key using KMS

- Each time you want to encrypt data, you ask AWS to generate a new data key for you. A data key is a unique encryption key AWS generates for each piece of data you need to encrypt.

- You then encrypt your data using the data key

- Amazon will then encrypt your data key using the master key

- You will then merge the encrypted data key with the encrypted data to create an encrypted message. The encrypted message is your final output, which is what you would store as a file or in a database.

The reason this is so convenient is that you never need to worry about safeguarding keys—the keys someone would need to decrypt any data are always unique and safe.

You could also use Azure KeyVault to store your secrets.

6. Verify Security with Delivery Pipelines

Dependency and container scanning should be part of your source control monitoring system, but you should also perform tests when executing your CI (continuous integration) and CD (continuous delivery) pipelines.

Atlassian has an informative blog post titled DevSecOps: Injecting Security into CD Pipelines.

DevSecOps is the term many recommend instead of DevOps to emphasize the need to build security into DevOps initiatives. I just wish it rolled off the tongue a little easier.

Atlassian’s post recommends using security unit tests, static analysis security testing (SAST), and dynamic analysis security testing (DAST).

Your code delivery pipeline can automate these security checks, but it’ll likely take some time to set up.

To learn about a more "Continuous Hacking" approach to software delivery, check out this article from Zach Arnold and Austin Adams. They recommend the following:

- Create a whitelist of Docker base images to check against at build time

- Ensure you’re pulling cryptographically signed base images

- Sign the metadata of a pushed image cryptographically so you can check it later

- In your containers, only use Linux distributions that verify the integrity of the package using the package manager’s security features

- When pulling third-party dependencies manually, only allow HTTPS and ensure you validate checksums

- Don’t allow the program to build images whose

Dockerfilespecifies a sensitive host path as a volume mount

But what about the code? Zach and Austin use automation to analyze it, too:

- Run static code analysis on the codebase for known code-level security vulnerabilities

- Run automated dependency checkers to make sure you’re using the last, most secure version of your dependencies

- Spin up your service, point automated penetration bots at the running containers, and see what happens

For a list of code scanners, see OWASP’s Source Code Analysis Tools.

7. Slow Down Attackers

If someone tries to attack your APIs with hundreds of gigs of username/password combinations, it could take a while for them to authenticate successfully. If you can detect this attack and slow down your service, it’s likely the attacker will go away. It’s simply not worth their time.

You can implement rate-limiting in your code (often with an open-source library) or your API Gateway. I’m sure there are other options, but these will likely be the most straightforward to implement.

Most SaaS APIs use rate-limiting to prevent customer abuse. We at Okta have API rate limits as well as email rate limits to help protect against denial-of-service attacks.

8. Use Docker Rootless Mode

Docker 19.03 introduced a rootless mode. The developers designed this feature to reduce the security footprint of the Docker daemon and expose Docker capabilities to systems where users cannot gain root privileges.

If you’re running Docker daemons in production, this is definitely something you should look into. However, if you’re letting Kubernetes run your Docker containers, you’ll need to configure the runAsUser in your PodSecurityPolicy.

9. Use Time-Based Security

Another tip I got from Johnny Xmas on the InfoQ podcast was to use time-based security. Winn Schwartau wrote a well-known Time Based Security book that is a great resource for anyone who wants to take a deeper dive.

The idea behind time-based security is that your system is never fully secure—someone will break in. Preventing intruders is only one part of securing a system; detection and reaction are essential, too.

Use multi-factor authentication to slow down intruders, but also to help detect when someone with elevated privilege authenticates into a critical server (which shouldn’t happen that often). If you have something like a domain controller that controls network traffic, send an alert to your network administrator team whenever there’s a successful login.

This is just one example of trying to detect anomalies and react to them quickly.

10. Scan Docker and Kubernetes Configuration for Vulnerabilities

Docker containers are very popular in microservice architectures. Our friends at Snyk published 10 Docker Image Security Best Practices. It repeats some of the things I already mentioned, but I’ll summarize them here anyway.

- Prefer minimal base images

- Use the

USERdirective to make sure the least privileged is used - Sign and verify images to mitigate MITM attacks

- Find, fix, and monitor for open-source vulnerabilities (Snyk offers a way to scan and monitor your Docker images too)

- Don’t leak sensitive information to Docker images

- Use fixed tags for immutability

- Use

COPYinstead ofADD - Use metadata labels like

maintainerandsecuritytxt - Use multi-stage builds for small and secure images

- Use a linter like hadolint

You might also find Top 5 Docker Vulnerabilities You Should Know from WhiteSource useful.

You should also scan your Kubernetes configuration for vulnerabilities, but there’s much more than that, so I’ll cover K8s security in the next section.

11. Know Your Cloud and Cluster Security

If you’re managing your production clusters and clouds, you’re probably aware of the 4C’s of Cloud Native Security.

Each one of the 4C’s depend on the security of the squares in which they fit. It is nearly impossible to safeguard against poor security standards in cloud, containers, and code by only addressing security at the code level. However, when you deal with these areas appropriately, then adding security to your code augments an already strong base.

The Kubernetes blog has a detailed post from Andrew Martin titled 11 Ways (Not) to Get Hacked. Andrew offers these tips to harden your clusters and increase their resilience if a hacker compromises them.

- Use TLS Everywhere

- Enable RBAC with Least Privilege, Disable ABAC, and use Audit Logging

- Use a Third-Party Auth provider (like Google, GitHub - or Okta!)

- Separate and Firewall your etcd Cluster

- Rotate Encryption Keys

- Use Linux Security Features and a restricted

PodSecurityPolicy - Statically Analyse YAML

- Run Containers as a Non-Root User

- Use Network Policies (to limit traffic between pods)

- Scan Images and Run IDS (Intrusion Detection System)

- Run a Service Mesh

This blog post is from July 2018, but not a whole lot has changed. I do think there’s been a fair amount of hype around service meshes since 2018, but that hasn’t made a huge difference.

Running a service mesh like Istio might allow you to offload your security to a "shared, battle-tested set of libraries." Still, I don’t think it’s "simplified the deployment of the next generation of network security" like the blog post says it could.

Learn More About Microservices and Web Security

I hope these security patterns have helped you become a more security-conscious developer. It’s interesting to me that only half of my list pertains to developers that write code on a day-to-day basis.

- Be Secure by Design

- Scan Dependencies

- Use HTTPS Everywhere

- Use Access and Identity Tokens

- Encrypt and Protect Secrets

The rest of them seem to apply to DevOps people, or rather DevSecOps.

- Verify Security with Delivery Pipelines

- Slow Down Attackers

- Use Docker Rootless Mode

- Use Time Based Security

- Scan Docker and Kubernetes Configuration for Vulnerabilities

- Know Your Cloud and Cluster Security

Since all of these patterns are important considerations, you should make sure to keep a close relationship between your developer and DevSecOps teams. In fact, if you’re doing microservices right, these people aren’t on separate teams! They’re on the same product team that owns the microservice from concept to production.

Looking for more? We have a few microservice and security-focused blogs I think you’ll like:

- Java Microservices with Spring Boot and Spring Cloud

- Build Secure Microservices with AWS Lambda and ASP.NET Core

- Node Microservices: From Zero to Hero

- The Hardest Thing About Data Encryption

- The Dangers of Self-Signed Certificates

We also wrote a book! API Security is a guide to building and securing APIs from the developer team at Okta.

If you liked this post and want notifications when we post others, please follow @oktadev on Twitter. We also have a YouTube channel you might enjoy. As always, please leave a comment below if you have any questions.

A huge thanks to Chris Richardson and Rob Winch for their thorough reviews and detailed feedback.

Published at DZone with permission of Matt Raible, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments